One-liner summary: A powerful AI ChatBot App that lets you chat with multiple documents using LLM and RAG

Note: This is a prototype and not the actual code used in production. Note: Please reach out to me ([email protected]) if you need full-stack real-world best practice.

AI ChatBotApp is a Python-based Generative AI application that allows users to interact with the content of multiple PDF files through natural language questions. It uses LangChain, OpenAI LLM, and vector stores (like Pinecone) to deliver smart, context-aware answers — based on your uploaded documents.

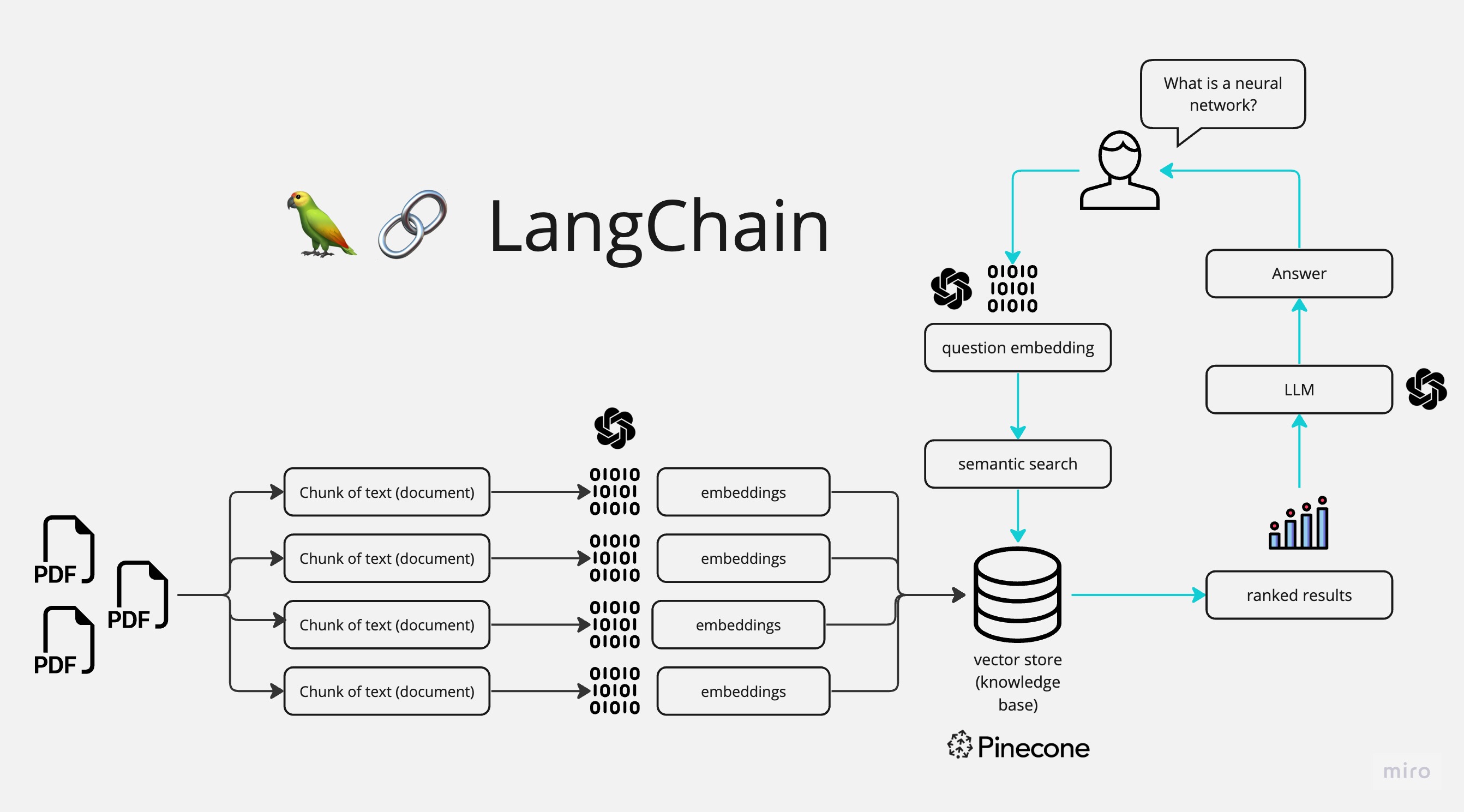

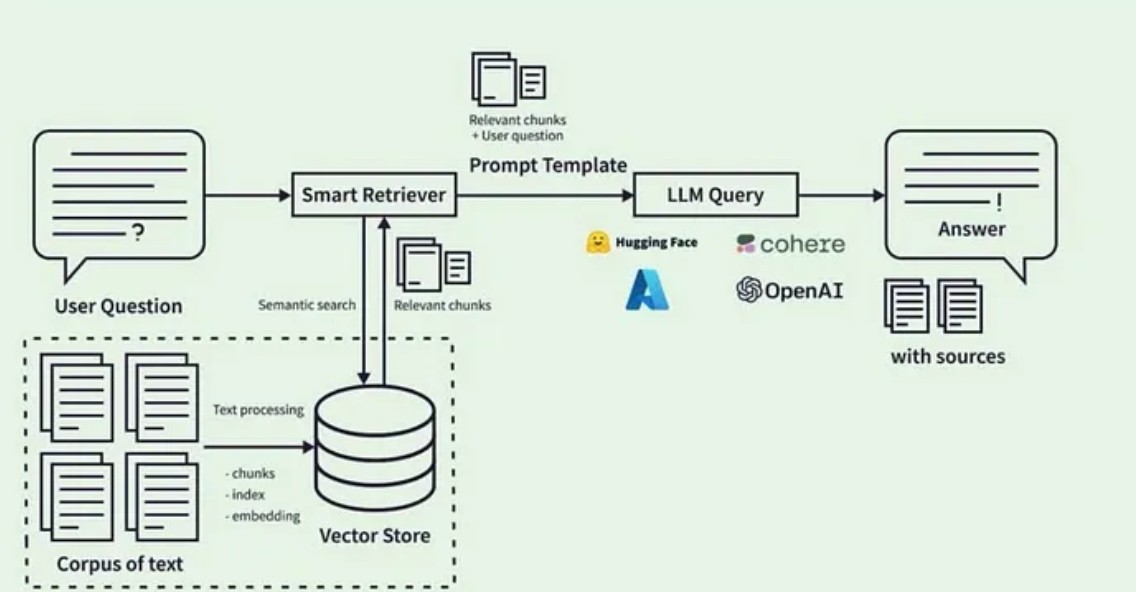

Here’s how the app processes your PDFs and generates responses:

Or take a look at the LangChain + RAG pipeline below:

-

PDF Loading

- Multiple PDF files are loaded, and their text is extracted.

-

Text Chunking

- Long texts are split into smaller chunks for better processing.

-

Text Embedding

- Each chunk is converted into vector embeddings using OpenAI.

-

Question Embedding & Semantic Search

- Your question is also embedded and compared with document chunks in the vector store (like Pinecone).

-

Answer Generation

- The most relevant chunks are passed to the LLM to generate a final answer.

- Clone the repo

git clone https://github.com/seankim0/llm_rag_gen_ai_chatbot.git cd llm_rag_gen_ai_chatbot - Install the required packages:

pip install -r requirements.txt

- Set your OpenAI API key:

Create a .env file in the project root directory and add:

OPENAI_API_KEY=your_secret_api_key

- Make sure all dependencies are installed and your API key is set.

- Run the application using Streamlit:

streamlit run app.py

- A web browser will launch the app interface.

- Upload one or more PDF documents.

- Start chatting and ask questions about the content using natural language.

-

"What is the main point of this document?"

-

"What does the third page say about neural networks?"

-

"Summarize the findings from the last section."