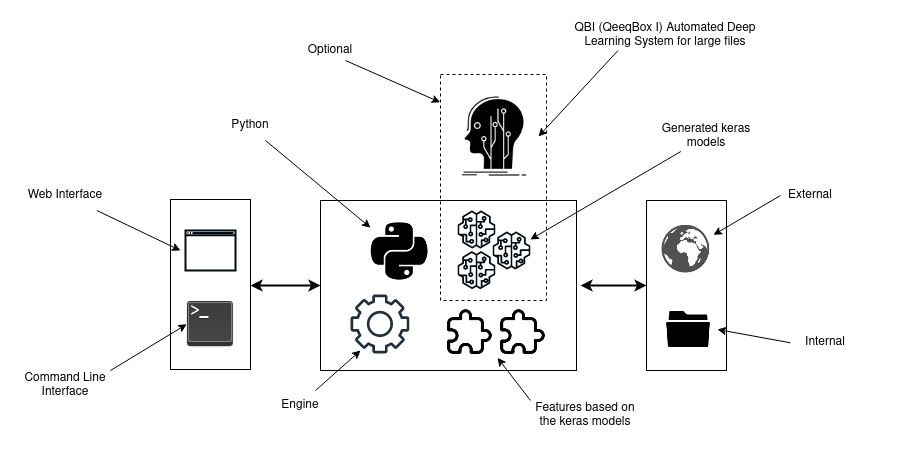

Image analyzer is an interface that simplifies interaction with image-related deep learning models. The interface has built-in features that get adjusted based on the models. You need to set the destination folder that contains your models with specific pattern names. The built-in features will use the names.

The interface was initially part of an internal project that detects abusive content with the possibility of tracing the subjects.

sudo apt-get install -y python3-opencv

pip3 install image-analyzerfrom imageanalyzer import run_server

run_server(settings={'input_shape':[224,224], 'percentage':0.90, 'options':[], 'weights': {'safe':50,'maybe':75,'unsafe':100}, 'verbose':True},port=8989)- [Name] Name of the model

- [Info] Description

- [Categories] Your model categories (if any)

- [Number] Model place

- .h5 Model extention

The following are examples of the model generated automatically by QeeqBox Automated Deep Learning System for large files. The examples are included in this project

- [Model 3.224x][Find safe, maybe and unsafe images 3.224][safe unsafe][1].h5

- [Model 2.224x][Find safe and unsafe images 2.224][safe maybe unsafe][2].h5