-

-

Notifications

You must be signed in to change notification settings - Fork 667

Closed

Description

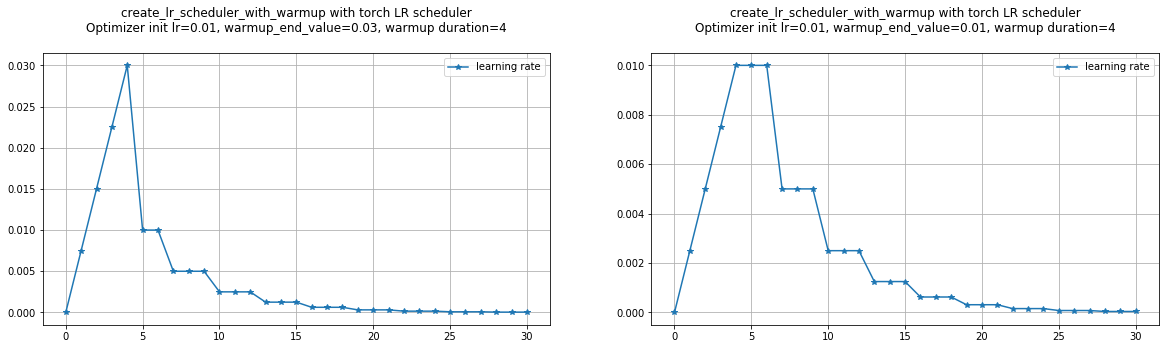

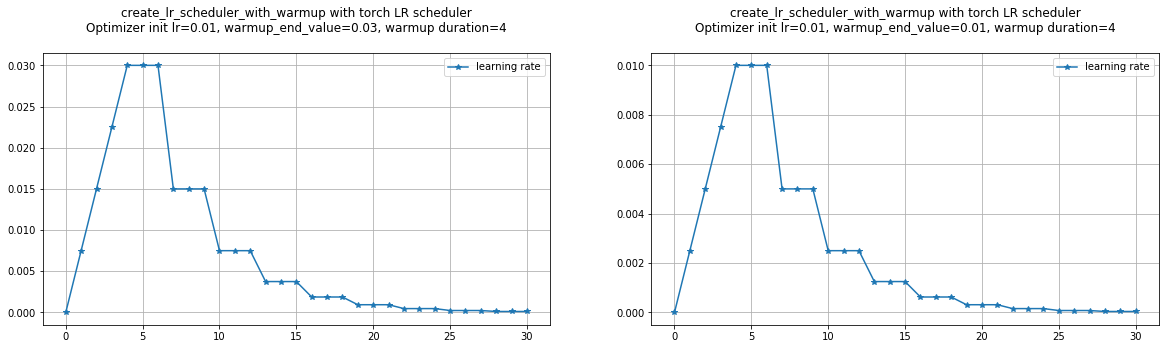

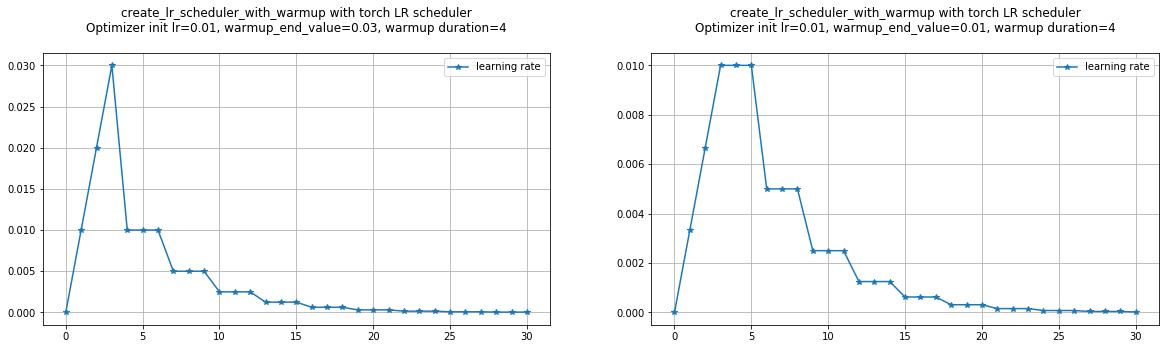

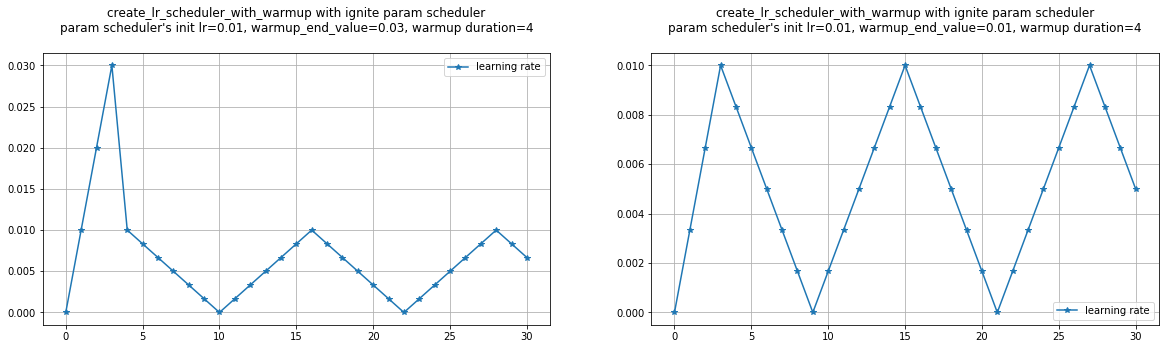

Actual behavior of create_lr_scheduler_with_warmup is a bit incoherent and varies upon pytorch version (1.3.0 vs nightly).

Actual problems:

- warmup duration is +1 event longer than expected on torch lr schedulers

- learning rate after warmup for torch lr schedulers should follow optimizer's initial lr

- first learning rate after warmup is skipped in discontinuous case

Solution

- same warmup duration for all cases

- add additional event in discontinuos lr scheduling

- same behaviour for PyTorch v1.3.0 and nightly

Code to reproduce

import numpy as np

import matplotlib.pylab as plt

%matplotlib inline

import torch

import ignite

from ignite.engine import Engine, Events

from ignite.contrib.handlers import LinearCyclicalScheduler, create_lr_scheduler_with_warmup

from ignite.contrib.handlers.param_scheduler import ParamScheduler

configs = [

{

"name": "create_lr_scheduler_with_warmup with torch LR scheduler",

"description": "Optimizer init lr={}, warmup_end_value={}, warmup duration={}",

"init_lr": 0.01,

"warmup_end_value": 0.03,

"lr_scheduler_cls": torch.optim.lr_scheduler.StepLR,

"lr_scheduler_kwargs": {"step_size": 3, "gamma": 0.5},

},

{

"name": "create_lr_scheduler_with_warmup with torch LR scheduler",

"description": "Optimizer init lr={}, warmup_end_value={}, warmup duration={}",

"init_lr": 0.01,

"warmup_end_value": 0.01,

"lr_scheduler_cls": torch.optim.lr_scheduler.StepLR,

"lr_scheduler_kwargs": {"step_size": 3, "gamma": 0.5},

},

{

"name": "create_lr_scheduler_with_warmup with ignite param scheduler",

"description": "param scheduler's init lr={}, warmup_end_value={}, warmup duration={}",

"init_lr": 0.0,

"warmup_end_value": 0.03,

"lr_scheduler_cls": LinearCyclicalScheduler,

"lr_scheduler_kwargs": {"param_name": "lr", "start_value": 0.01, "end_value": 0.0, "cycle_size": 12},

},

{

"name": "create_lr_scheduler_with_warmup with ignite param scheduler",

"description": "param scheduler's init lr={}, warmup_end_value={}, warmup duration={}",

"init_lr": 0.0,

"warmup_end_value": 0.01,

"lr_scheduler_cls": LinearCyclicalScheduler,

"lr_scheduler_kwargs": {"param_name": "lr", "start_value": 0.01, "end_value": 0.0, "cycle_size": 12},

},

]

t = torch.tensor(0)

def display_create_lr_scheduler_with_warmup(configs):

print("torch version:", torch.__version__)

print("ignite master:", ignite.__version__)

n = 2

for i, config in enumerate(configs):

warmup_duration = 4

optimizer = torch.optim.SGD([t, ], lr=config['init_lr'])

lr_scheduler = config['lr_scheduler_cls'](optimizer, **config['lr_scheduler_kwargs'])

scheduler = create_lr_scheduler_with_warmup(

lr_scheduler,

warmup_start_value=0.0,

warmup_end_value=config['warmup_end_value'],

warmup_duration=warmup_duration,

)

lr_values = []

def save_lr(engine):

lr_values.append((engine.state.iteration - 1, optimizer.param_groups[0]['lr']))

trainer = Engine(lambda engine, batch: None)

trainer.add_event_handler(Events.ITERATION_STARTED, scheduler)

trainer.add_event_handler(Events.ITERATION_STARTED, save_lr)

trainer.run(list(range(31)), max_epochs=1)

lr_values = np.array(lr_values)

if i % n == 0:

plt.figure(figsize=(10 * n, 5))

init_lr = config['init_lr'] if not isinstance(lr_scheduler, LinearCyclicalScheduler) else config['lr_scheduler_kwargs']['start_value']

plt.subplot(1, n, (i % n) + 1)

plt.title("{}\n{}\n".format(config['name'],

config['description'].format(

init_lr, config['warmup_end_value'], warmup_duration)))

plt.plot(lr_values[:, 0], lr_values[:, 1], '*-', label="learning rate")

plt.legend()

plt.grid(which='both')Reactions are currently unavailable

Metadata

Metadata

Assignees

Labels

No labels