-

Notifications

You must be signed in to change notification settings - Fork 5.3k

ARM64: Avoid jump stubs where possible #64148

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

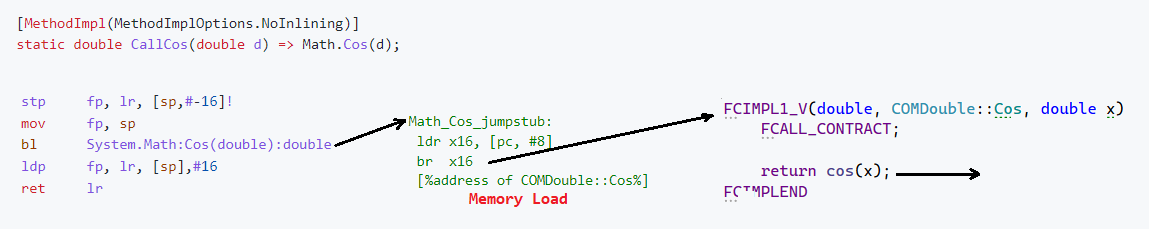

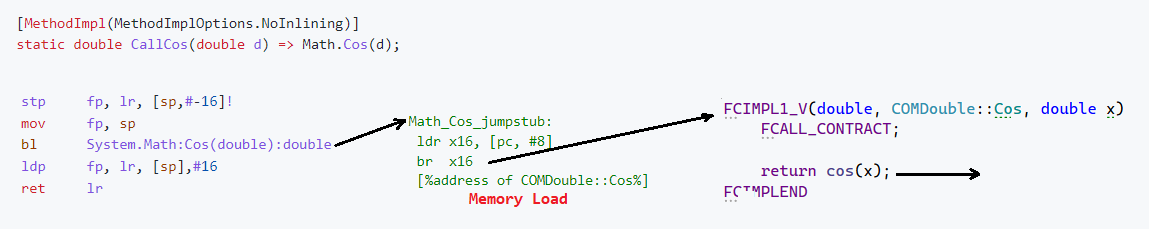

Tagging subscribers to this area: @JulieLeeMSFT Issue DetailsFor context: #62302 The idea is to prefer 00000000 movz x0, #0x40c0

00000000 movk x0, #0x439 LSL #16

00000000 movk x0, #1 LSL #32

00000000 blr x0over 00000000 bl MyCall()for cases where This PR tries to do that for:

but not all of them. TBD: Should I be careful around delegates, pinvokes, check for ReJIT/EnC (I guess it's not supported yet for arm)? Am I heading into a right direction, @jkotas? I'm trying to address your 3rd point in #62302 (comment)

|

|

Diff example static float Test(float x) => x % 1 + MathF.Cos(x);Diff: https://www.diffchecker.com/mNfaCdFj |

|

Benefits are smaller than from #63842: [Benchmark]

[Arguments(3.14)]

public double Test(double d) => Math.Cos(d) * Math.Sin(d) * Math.Tan(d); // 3 InternalCallsCore_Root_baseline - Main But benefits from this PR can be higher if we allow CSE/LoopHoisting for method addresses (especially LoopHoisting) |

ReJIT is supported for arm64, EnC is not - but it is planned for .NET 7. |

|

What is the arm64 machine that you are running this benchmark on? What does the code for |

I am using Apple M1 mini so I assume |

|

Addresses (example): If I apply #63842 then numbers are: Where float MyCos(float x) => MathF.Cos(x);e.g. when ^ is compiled, jit calls |

src/coreclr/vm/jitinterface.cpp

Outdated

| // guess whether the native instruction (e.g. br) will be able to reach this target or not | ||

| // within 128Mb (1Mb is subtracted because we don't know the exact code size at this point) | ||

| // NOTE: it's still just a hint | ||

| if (FitsInRel28(abs((INT64)m_CodeHeader->GetCodeStartAddress()) - (INT64)target) + 1024 * 1024) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ok, it's not correct to use m_CodeHeader->GetCodeStartAddress() here.

What I need here is an approximate location where currently jitted method will be allocated in memory, is it possible?

Or at very least I need a location of coreclr in memory so I can return IMAGE_REL_ARM64_BRANCH26 for everything except coreclr and all calls to coreclr will use direct calls.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

What I need here is an approximate location where currently jitted method will be allocated in memory, is it possible?

It is certainly possible, but it is not a one line change with the current implementation of the code manager. I think we would need to refactor the implementation of code manager and how the JIT interacts with it to make this possible.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I assume x64 will also benefit from it, the current getRelocTypeHint impl is not accurate for it either and most likely leads to jump-stubs or "rejit with relocs off" (where relocs are turned off globally for all future methods) in some cases.

How does it "just jump"? It needs some sort of indirect jump. It would be useful to know the exact instructions used to perform the long jumps (similar to what we need). I assume this sequence is going to be the most efficient sequence and we should be using the same one. |

@jkotas here is the result of I am not sure I understand how to read it, I thought |

|

hm. or maybe I am wrong, checking.. |

|

So with the default settings we have coreclr at e.g. address as you can see, the heap with compiled managed code is quite far away from CoreCLR, the distance is ~6Gb but with the #63842 change it suddenly changes to I didn't save |

The address space randomization will give you addresses that are far from each other, some of the time. You may be able to observe some patterns in ASLR behavior for given program on given OS version, but it is very fragile to generalize the observed patterns. |

I think it means that this branch points to some sort of jump stub. It does not go directly to the target. |

That is my impression as well, but it reproduces quite stably 🙂

Yeah it seems it's pointing to: |

These instructions look identical to the jump stubs that we are using. Why are the jump stubs that we are using so much slower? |

My interpretation of the issue wasn't that our jump stubs are slower; but rather we are doing jump stubs on top of jump stubs. And so while in C/C++ you may have we have We are basically turning what is normally a single or double branch into a 4-5 level branch (and possible actual call stack increase) |

It would be certainly nice to have this more streamlined. However, the numbers from #64148 (comment) are comparing:

The extra |

#39474 tried to do that math functions, but run into problems with getting them recognized as JIT intrinsics at the same time. |

I'd guess the combination of randomized addresses + multiple indirections isn't handled well by the CPU or instruction cache. Particularly since its not a "common" pattern in native code. Agner's (https://www.agner.org/optimize/microarchitecture.pdf) and the Intel/AMD/ARM optimization manuals all touch on how decoding works and cover that code is loaded in 16 or 32-byte aligned windows. Unconditional branches can still impact the branch prediction tables and the microcode cache only goes so far. |

I was also surprised, like the 1-2ns difference as in the PR makes perfect sense but that much difference as in Core_Root_smaller_reservmem doesn't, I can only blame branch predictor I'll rerun the benchmark and try to profile it |

|

You can also try some other cases, like regular managed method to managed method calls. There may be something special about Math.Cos. |

|

Hm..

Do they use the same |

Yes. To experiment with jumpstubs, you can comment out |

|

Benchmarks: using System;

using System.Runtime.CompilerServices;

using BenchmarkDotNet.Attributes;

using BenchmarkDotNet.Running;

public class Test

{

public static void Main(string[] args) =>

BenchmarkSwitcher.FromAssembly(typeof(Test).Assembly).Run(args);

[Benchmark]

public int CurrentManagedThreadId() => Environment.CurrentManagedThreadId; // 1 InternalCall

[Benchmark]

[Arguments(3.14f)]

public float MyCos(float x) => MathF.Cos(x); // 1 InternalCall

[Benchmark]

[Arguments(3.14f)]

public float MyCosSinTan(float x) => MathF.Cos(x) + MathF.Sin(x) + MathF.Tan(x); // 3 InternalCalls

[Benchmark]

public object Allocate() =>

new object[] { new(), new() }; // CORINFO_HELP_NEWARR_1_OBJ and two CORINFO_HELP_NEWSFAST

[Benchmark]

public void CallManagedMethod() // managed to managed

{

TestCallee();

TestCallee();

TestCallee();

TestCallee();

}

[Benchmark]

public void CallManagedMethodLoop() // managed to managed in a loop

{

for (int i = 0; i < 100; i++)

{

// NOTE: method address is not hoisted

TestCallee();

TestCallee();

TestCallee();

TestCallee();

}

}

[MethodImpl(MethodImplOptions.NoInlining)]

void TestCallee() {}

}I've built 4 Core_Roots (Release-osx-arm64): Here is the results: Notes:

Am I correct that the jump-stub for Managed-to-Managed also actually jumps to another "jump stub" that was used to replace method? (PRECODE fixup?) |

Yes, it is to support ReJIT and tiered compilation. |

|

Related: it'd be nice if we could use the initial heap only for optimized code and allocate all unoptimized one in a separate one. |

… into vm-less-jumpstubs # Conflicts: # src/coreclr/vm/jitinterface.cpp

|

Draft Pull Request was automatically closed for inactivity. Please let us know if you'd like to reopen it. |

For context: #62302

The idea is to prefer

over

for cases where

bl MyCall()actually points to a jump-stub. E.g.:It seems in most cases the heap with jitted code is quite far away from CoreCLR(VM) so all internal calls and helper calls need jump stubs at the moment, however, managed-to-managed seem to be also affected.

Am I heading into a right direction, @jkotas? I'm trying to address your 3rd point in #62302 (comment)