-

Notifications

You must be signed in to change notification settings - Fork 72

Fix for date format mismatch #298

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Fix for date format mismatch #298

Conversation

Codecov Report

@@ Coverage Diff @@

## main #298 +/- ##

=======================================

Coverage 95.89% 95.89%

=======================================

Files 65 65

Lines 2800 2804 +4

Branches 418 420 +2

=======================================

+ Hits 2685 2689 +4

Misses 73 73

Partials 42 42

Continue to review full report at Codecov.

|

|

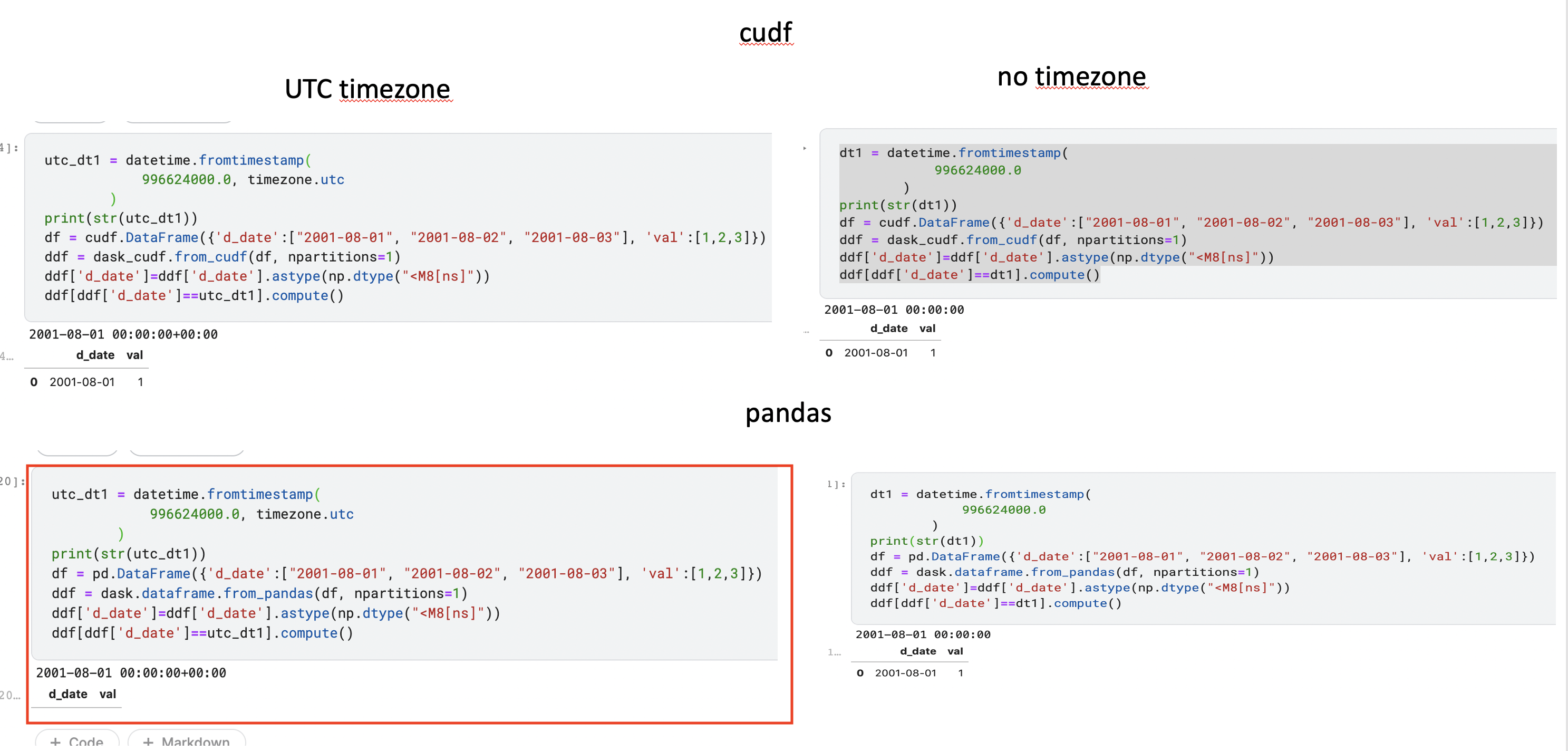

This looks like a reasonable solution. Any ideas why cuDF works while Pandas fails ? |

|

Hi @quasiben, Thanks for reviewing the PR and putting me in the right direction (I missed the part about why it works for CUDF) I tried digging deep into this and found the following, Both pandas and cudf seem to work for the local timezone. but pandas doesn't seem to work with UTC timezone. while executing the query, we are converting the literal timestamp value to the datetime value using the following function. Lines 160 to 165 in 61cbca9

example using the above function: Since we are only accepting UTC timezone currently, we may need to do the casting explicitly, what do you think? And One more point in the favour of handling date casting explicitly is the current version of code includes dask-sql/tests/integration/test_select.py Lines 120 to 127 in 61cbca9

Open for other ideas/Suggestions from everyone! |

|

Thank for digging into this @rajagurunath ! I didn't know dask-sql sets everything as UTC if TZ is not defined.

I suspect this is slower than what you have. |

charlesbluca

left a comment

charlesbluca

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for the work here @rajagurunath 😄 just a few comments:

| int(literal_value.getTimeInMillis()) / 1000, timezone.utc | ||

| ) | ||

|

|

||

| if sql_type == "DATE": |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Would you mind adding a comment here linking to #296?

| if sql_type == "DATE": | |

| # address datetime format mismatch | |

| # https://github.com/dask-contrib/dask-sql/issues/296 | |

| if sql_type == "DATE": |

| if return_column is None: | ||

| return operand | ||

| else: | ||

| # handle datetime type specially |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Same as above - just adding a link to #296

| # handle datetime type specially | |

| # address datetime format mismatch | |

| # https://github.com/dask-contrib/dask-sql/issues/296 |

| assert_frame_equal(result_df, datetime_table) | ||

|

|

||

|

|

||

| def test_date_casting(c, datetime_table): |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thinks we don't actually need datetime_table here? Since we only use the context here

| def test_date_casting(c, datetime_table): | |

| def test_date_casting(c): |

|

@rajagurunath Thanks for working on this! One thing I noticed was that when I perform a date comparison with this PR: import dask.dataframe as dd

import pandas as pd

from dask_sql import Context

df = pd.DataFrame({'d_date':["2001-08-01", "2001-08-02", "2001-08-03"], 'val':[1,2,3]})

ddf = dd.from_pandas(df, npartitions=1)

c = Context()

c.create_table("df", ddf)

query = "SELECT val, d_date FROM df WHERE CAST(d_date as date) >= DATE '2001-08-02'"

c.sql(query).compute()I get: When I run the same code without this PR I get: Do you know what might be happening here? |

|

Working on a PR to supersede this one in #343 |

Initial attempt to solve: #296

Explicitly handled the type conversion for the

datecolumn and a literal value. And wondering if there is any better way to handle this datetime casting. Happy to know everyone's thoughts on this!