-

Notifications

You must be signed in to change notification settings - Fork 29k

[SPARK-17091][SQL] Add rule to convert IN predicate to equivalent Parquet filter #21603

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Changes from 2 commits

264eed8

4f96881

b9b3160

d57f44c

8218596

fdb79b3

ec96b0f

5e748f9

f5fba0e

c386e02

File filter

Filter by extension

Conversations

Jump to

Diff view

Diff view

There are no files selected for viewing

| Original file line number | Diff line number | Diff line change |

|---|---|---|

|

|

@@ -270,6 +270,11 @@ private[parquet] class ParquetFilters(pushDownDate: Boolean) { | |

| case sources.Not(pred) => | ||

| createFilter(schema, pred).map(FilterApi.not) | ||

|

|

||

| case sources.In(name, values) if canMakeFilterOn(name) && values.length < 20 => | ||

| values.flatMap { v => | ||

| makeEq.lift(nameToType(name)).map(_(name, v)) | ||

| }.reduceLeftOption(FilterApi.or) | ||

|

Contributor

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. what about null handling? Do we get the same result as before? Anyway, can we add a test for it? |

||

|

|

||

| case _ => None | ||

| } | ||

| } | ||

|

|

||

| Original file line number | Diff line number | Diff line change |

|---|---|---|

|

|

@@ -20,7 +20,7 @@ package org.apache.spark.sql.execution.datasources.parquet | |

| import java.nio.charset.StandardCharsets | ||

| import java.sql.Date | ||

|

|

||

| import org.apache.parquet.filter2.predicate.{FilterPredicate, Operators} | ||

| import org.apache.parquet.filter2.predicate.{FilterApi, FilterPredicate, Operators} | ||

| import org.apache.parquet.filter2.predicate.FilterApi._ | ||

| import org.apache.parquet.filter2.predicate.Operators.{Column => _, _} | ||

|

|

||

|

|

@@ -660,6 +660,34 @@ class ParquetFilterSuite extends QueryTest with ParquetTest with SharedSQLContex | |

| assert(df.where("col > 0").count() === 2) | ||

| } | ||

| } | ||

|

|

||

| test("SPARK-17091: Convert IN predicate to Parquet filter push-down") { | ||

| val schema = StructType(Seq( | ||

| StructField("a", IntegerType, nullable = false) | ||

| )) | ||

|

|

||

| assertResult(Some(FilterApi.eq(intColumn("a"), 10: Integer))) { | ||

| parquetFilters.createFilter(schema, sources.In("a", Array(10))) | ||

| } | ||

|

|

||

| assertResult(Some(or( | ||

|

||

| FilterApi.eq(intColumn("a"), 10: Integer), | ||

| FilterApi.eq(intColumn("a"), 20: Integer))) | ||

| ) { | ||

| parquetFilters.createFilter(schema, sources.In("a", Array(10, 20))) | ||

| } | ||

|

|

||

| assertResult(Some(or(or( | ||

| FilterApi.eq(intColumn("a"), 10: Integer), | ||

| FilterApi.eq(intColumn("a"), 20: Integer)), | ||

| FilterApi.eq(intColumn("a"), 30: Integer))) | ||

| ) { | ||

| parquetFilters.createFilter(schema, sources.In("a", Array(10, 20, 30))) | ||

| } | ||

|

|

||

| assert(parquetFilters.createFilter(schema, sources.In("a", Range(1, 20).toArray)).isDefined) | ||

| assert(parquetFilters.createFilter(schema, sources.In("a", Range(1, 21).toArray)).isEmpty) | ||

| } | ||

| } | ||

|

|

||

| class NumRowGroupsAcc extends AccumulatorV2[Integer, Integer] { | ||

|

|

||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The threshold is 20. Too many

valuesmay be OOM, for example:There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

what about making this threshold configurable?

Uh oh!

There was an error while loading. Please reload this page.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

make it configurable. Use

spark.sql.parquet.pushdown.inFilterThreshold. By default, it should be around 10. Please also check the perf.cc @jiangxb1987

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

+1

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

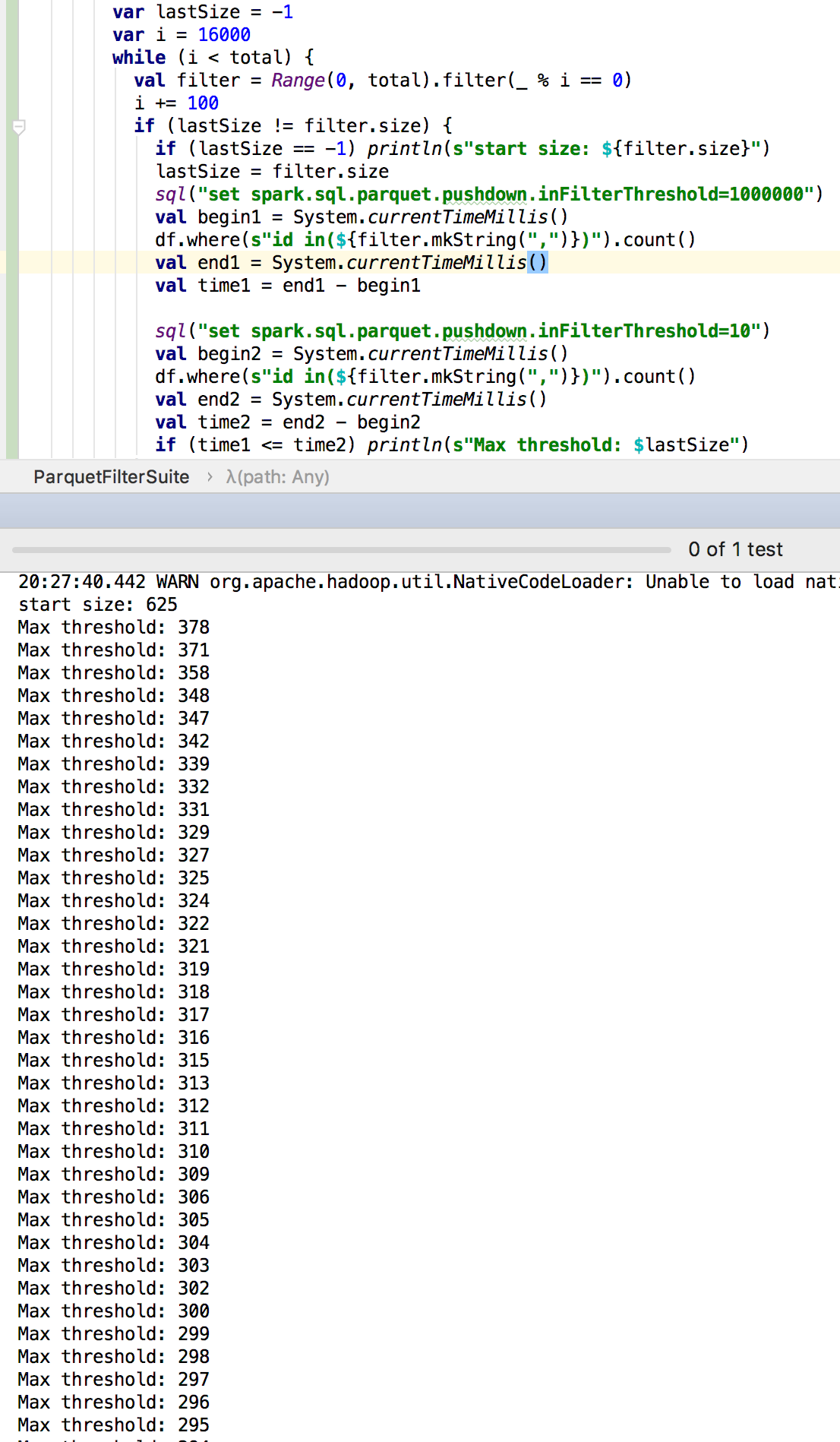

It seems that the push-down performance is better when threshold is less than

300:The code:

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for doing this benchmark, this shall be useful, while I still have some questions:

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

It mainly depends on how many row groups can skip. for small table (assuming only one row group). There is no obvious difference.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I have prepared a test case that you can verify it: