-

Notifications

You must be signed in to change notification settings - Fork 2.5k

[HUDI-4937] Fix HoodieTable injecting non-reusable HoodieBackedTableMetadata aggressively flushing MT readers

#6815

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

hey Alexey. I am not sure if we can completely remove the reuse flag. Vinoth was involved while designing the reuse flag. |

2e3420c to

12160b8

Compare

|

@hudi-bot run azure |

nsivabalan

left a comment

nsivabalan

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This needs to be thoroughly vetted. We have some code paths, where we disabled reuse explicitly.

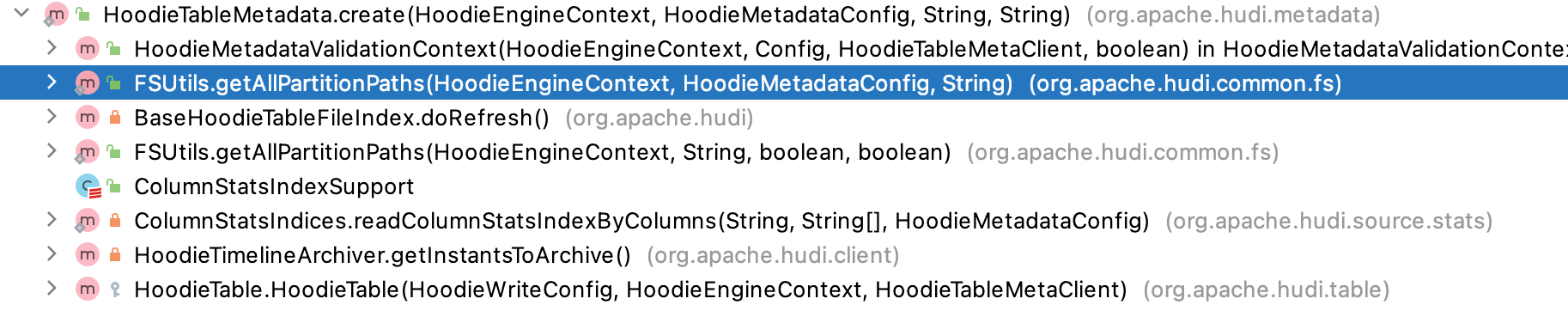

Can we list out all callers where re-use is false and we can confirm that enabling re-use is the right fix.

From what I glean, I see two places.

- Metadata table writer initalized w/ reuse = false, which makes sense.

static HoodieTableMetadata create(HoodieEngineContext engineContext, HoodieMetadataConfig metadataConfig, String datasetBasePath,

String spillableMapPath) {

return create(engineContext, metadataConfig, datasetBasePath, spillableMapPath, false);

}

But this method has quite a few callers.

let's sync up sometime and go over the list to ensure we don't cause any regression w/ unintentional revert.

12160b8 to

0025243

Compare

13fb788 to

3d90e88

Compare

|

|

||

| private static final Logger LOG = Logger.getLogger(LeakTrackingFSDataInputStream.class.getName()); | ||

|

|

||

| private final StackTraceElement[] callSite; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This is only for debug purpose, right?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Correct

f8826f0 to

987bcb8

Compare

HoodieTable injecting non-reusable HoodieBackedTableMetadata aggressively flushing MT readersHoodieTable injecting non-reusable HoodieBackedTableMetadata aggressively flushing MT readers

031dc62 to

97af245

Compare

- Broadcasted instance of MT for Spark - Transient instance of MT for Flink This would allow to make sure that wherever `HoodieTable` is used MT will be broadcasted no more than once per executor

…streams potentially holding on to a S3 connections

…kedTableMetadata`

…ces held, as well as destroy held objects

…t` for ser/de to make sure clean up sequence doesn't re-fetch the `Broadcast` unnecessarily

… of holding the `Broadcast`

2879baf to

e3b6af9

Compare

| } | ||

| } | ||

|

|

||

| public static <T> HoodieSparkTable<T> create(HoodieWriteConfig config, HoodieEngineContext context) { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

These ones were moved as is

| super(config, context, metaClient); | ||

| } | ||

|

|

||

| @Override |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This just been moved; no changes (there were other changes, but these were reverted back)

| } | ||

|

|

||

| @Override | ||

| protected HoodieIndex getIndex(HoodieWriteConfig config, HoodieEngineContext context) { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

No changes

nsivabalan

left a comment

nsivabalan

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM except for 1 comment.

| protected static final long MAX_MEMORY_SIZE_IN_BYTES = 1024 * 1024 * 1024; | ||

| // NOTE: Buffer-size is deliberately set pretty low, since MT internally is relying | ||

| // on HFile (serving as persisted binary key-value mapping) to do caching | ||

| protected static final int BUFFER_SIZE = 10 * 1024; // 10Kb |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

did you get a chance to run any benchmarks w/ this fix?

even w. Hfile, if the inputStream size is set to 10Kb, while original hfile block size is 64Kb, we might make more remote calls just to fetch 1 hfile block right? instead of just 1.

|

This change is currently blocked on HUDI-3397: now that |

|

hi @nsivabalan ! is this supposed to be integrated in 0.14 ? As of 0.13.1, cleaning w/ MDT still is incredibly slow in this context:

We recently had a cleaning job w/ MDT running for 24h while dropping the MDT the job ended in few minutes. Thanks |

yihua

left a comment

yihua

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Problems addressed by this PR are already resolved on the latest master. Closing this PR.

Change Logs

Currently,

HoodieTableis holdingHoodieBackedTableMetadatathat is setup not to reuse actual LogScanner and HFileReader used to read MT itself.This is proving to be wasteful on a number of occasions already, including (not an exhaustive list):

Changes

reuseflag from theHoodieBackedTableMetadatactor to make sure readers are always reusedHoodieBackedTableMetadataasBroadcastin HoodieTable to make sure it's being deserialized once per executor (instead of currently being once per task)HoodieBackedTableMetadataserializable object (still omitting the readers though)HoodieSparkKryoRegistrarto be able to register serializer forSerializableConfigurationImpact

Risk level: Low

Documentation Update

Documentation update is required now specifying that

--conf spark.kryo.registrator=org.apache.spark.HoodieSparkKryoRegistrarproperty will be mandatoryContributor's checklist