-

Notifications

You must be signed in to change notification settings - Fork 2.5k

Description

The Problem I faced

when I compile hudi with hbase-2.2.6 with the command of mvn clean package -DskipTests -DskipITs -Dhbase.version=2.2.6, it got errors like below:

[ERROR] Failed to execute goal org.apache.maven.plugins:maven-compiler-plugin:3.8.0:compile (default-compile) on project hudi-common: Compilation failure: Compilation failure:

[ERROR] /root/hudi-0.9.0/hudi-common/src/main/java/org/apache/hudi/common/bootstrap/index/HFileBootstrapIndex.java:[181,34] no suitable method found for createReader(org.apache.hadoop.fs.FileSystem,org.apache.hudi.common.bootstrap.index.HFileBootstrapIndex.HFilePathForReader,org.apache.hadoop.hbase.io.hfile.CacheConfig,org.apache.hadoop.conf.Configuration)

[ERROR] method org.apache.hadoop.hbase.io.hfile.HFile.createReader(org.apache.hadoop.fs.FileSystem,org.apache.hadoop.fs.Path,org.apache.hadoop.hbase.io.FSDataInputStreamWrapper,long,org.apache.hadoop.hbase.io.hfile.CacheConfig,boolean,org.apache.hadoop.conf.Configuration) is not applicable

[ERROR] (actual and formal argument lists differ in length)

[ERROR] method org.apache.hadoop.hbase.io.hfile.HFile.createReader(org.apache.hadoop.fs.FileSystem,org.apache.hadoop.fs.Path,org.apache.hadoop.conf.Configuration) is not applicable

[ERROR] (actual and formal argument lists differ in length)

[ERROR] method org.apache.hadoop.hbase.io.hfile.HFile.createReader(org.apache.hadoop.fs.FileSystem,org.apache.hadoop.fs.Path,org.apache.hadoop.hbase.io.hfile.CacheConfig,boolean,org.apache.hadoop.conf.Configuration) is not applicable

[ERROR] (actual and formal argument lists differ in length)

[ERROR] /root/hudi-0.9.0/hudi-common/src/main/java/org/apache/hudi/common/bootstrap/index/HFileBootstrapIndex.java:[309,93] cannot find symbol

[ERROR] symbol: method getKeyValue()

[ERROR] location: variable scanner of type org.apache.hadoop.hbase.io.hfile.HFileScanner

[ERROR] /root/hudi-0.9.0/hudi-common/src/main/java/org/apache/hudi/common/bootstrap/index/HFileBootstrapIndex.java:[534,51] incompatible types: org.apache.hudi.common.bootstrap.index.HFileBootstrapIndex.HoodieKVComparator cannot be converted to org.apache.hadoop.hbase.CellComparator

[ERROR] /root/hudi-0.9.0/hudi-common/src/main/java/org/apache/hudi/common/bootstrap/index/HFileBootstrapIndex.java:[537,51] incompatible types: org.apache.hudi.common.bootstrap.index.HFileBootstrapIndex.HoodieKVComparator cannot be converted to org.apache.hadoop.hbase.CellComparator

[ERROR] /root/hudi-0.9.0/hudi-common/src/main/java/org/apache/hudi/io/storage/HoodieHFileReader.java:[72,24] no suitable method found for createReader(org.apache.hadoop.fs.FileSystem,org.apache.hadoop.fs.Path,org.apache.hadoop.hbase.io.hfile.CacheConfig,org.apache.hadoop.conf.Configuration)

[ERROR] method org.apache.hadoop.hbase.io.hfile.HFile.createReader(org.apache.hadoop.fs.FileSystem,org.apache.hadoop.fs.Path,org.apache.hadoop.hbase.io.FSDataInputStreamWrapper,long,org.apache.hadoop.hbase.io.hfile.CacheConfig,boolean,org.apache.hadoop.conf.Configuration) is not applicable

[ERROR] (actual and formal argument lists differ in length)

[ERROR] method org.apache.hadoop.hbase.io.hfile.HFile.createReader(org.apache.hadoop.fs.FileSystem,org.apache.hadoop.fs.Path,org.apache.hadoop.conf.Configuration) is not applicable

[ERROR] (actual and formal argument lists differ in length)

[ERROR] method org.apache.hadoop.hbase.io.hfile.HFile.createReader(org.apache.hadoop.fs.FileSystem,org.apache.hadoop.fs.Path,org.apache.hadoop.hbase.io.hfile.CacheConfig,boolean,org.apache.hadoop.conf.Configuration) is not applicable

[ERROR] (actual and formal argument lists differ in length)

[ERROR] /root/hudi-0.9.0/hudi-common/src/main/java/org/apache/hudi/io/storage/HoodieHFileReader.java:[80,24] no suitable method found for createReader(org.apache.hadoop.fs.FileSystem,org.apache.hadoop.fs.Path,org.apache.hadoop.hbase.io.FSDataInputStreamWrapper,int,org.apache.hadoop.hbase.io.hfile.CacheConfig,org.apache.hadoop.conf.Configuration)

[ERROR] method org.apache.hadoop.hbase.io.hfile.HFile.createReader(org.apache.hadoop.fs.FileSystem,org.apache.hadoop.fs.Path,org.apache.hadoop.hbase.io.FSDataInputStreamWrapper,long,org.apache.hadoop.hbase.io.hfile.CacheConfig,boolean,org.apache.hadoop.conf.Configuration) is not applicable

[ERROR] (actual and formal argument lists differ in length)

[ERROR] method org.apache.hadoop.hbase.io.hfile.HFile.createReader(org.apache.hadoop.fs.FileSystem,org.apache.hadoop.fs.Path,org.apache.hadoop.conf.Configuration) is not applicable

[ERROR] (actual and formal argument lists differ in length)

[ERROR] method org.apache.hadoop.hbase.io.hfile.HFile.createReader(org.apache.hadoop.fs.FileSystem,org.apache.hadoop.fs.Path,org.apache.hadoop.hbase.io.hfile.CacheConfig,boolean,org.apache.hadoop.conf.Configuration) is not applicable

[ERROR] (actual and formal argument lists differ in length)

[ERROR] /root/hudi-0.9.0/hudi-common/src/main/java/org/apache/hudi/io/storage/HoodieHFileReader.java:[114,56] incompatible types: org.apache.hadoop.hbase.io.hfile.HFileBlock cannot be converted to java.nio.ByteBuffer

[ERROR] /root/hudi-0.9.0/hudi-common/src/main/java/org/apache/hudi/io/storage/HoodieHFileReader.java:[149,27] cannot find symbol

[ERROR] symbol: method getKeyValue()

[ERROR] location: variable scanner of type org.apache.hadoop.hbase.io.hfile.HFileScanner

[ERROR] /root/hudi-0.9.0/hudi-common/src/main/java/org/apache/hudi/io/storage/HoodieHFileReader.java:[180,54] cannot find symbol

[ERROR] symbol: method getKeyValue()

[ERROR] location: variable scanner of type org.apache.hadoop.hbase.io.hfile.HFileScanner

[ERROR] /root/hudi-0.9.0/hudi-common/src/main/java/org/apache/hudi/io/storage/HoodieHFileReader.java:[200,50] cannot find symbol

[ERROR] symbol: method getKeyValue()

[ERROR] location: variable scanner of type org.apache.hadoop.hbase.io.hfile.HFileScanner

[ERROR] /root/hudi-0.9.0/hudi-common/src/main/java/org/apache/hudi/io/storage/HoodieHFileReader.java:[224,28] cannot find symbol

[ERROR] symbol: method getKeyValue()

[ERROR] location: variable keyScanner of type org.apache.hadoop.hbase.io.hfile.HFileScanner

[ERROR] -> [Help 1]

[ERROR]

[ERROR] To see the full stack trace of the errors, re-run Maven with the -e switch.

[ERROR] Re-run Maven using the -X switch to enable full debug logging.

[ERROR]

[ERROR] For more information about the errors and possible solutions, please read the following articles:

[ERROR] [Help 1] http://cwiki.apache.org/confluence/display/MAVEN/MojoFailureException

[ERROR]

[ERROR] After correcting the problems, you can resume the build with the command

[ERROR] mvn <goals> -rf :hudi-commonTo Reproduce

mvn clean package -DskipTests -DskipITs -Dhbase.version=2.2.6Environment Description

-

Hudi version : 0.9.0

-

Hbase version: 2.2.6

The behaviors I do to fix the error

I check the errors in HFileBootstrapIndex.java and HoodieHFileReader.java and repaired the errors with the update information of hbase from hbase-1.x to hbase-2.x. The update information of hbase is list bellow.

- The method of createReader in HFile adds an extra parameter, called primaryReplicaReader. So, I refer to the updated method of createReader and add the parameter of primaryReplicaReader to

true.

an example method of createReader in HFile is list below:

/**

* Creates reader with cache configuration disabled

* @param fs filesystem

* @param path Path to file to read

* @return an active Reader instance

* @throws IOException Will throw a CorruptHFileException

* (DoNotRetryIOException subtype) if hfile is corrupt/invalid.

*/

public static Reader createReader(FileSystem fs, Path path, Configuration conf)

throws IOException {

// The primaryReplicaReader is mainly used for constructing block cache key, so if we do not use

// block cache then it is OK to set it as any value. We use true here.

return createReader(fs, path, CacheConfig.DISABLED, true, conf);

}I repair the error of

[ERROR] /root/hudi-0.9.0/hudi-common/src/main/java/org/apache/hudi/common/bootstrap/index/HFileBootstrapIndex.java:[181,34] no suitable method found for createReader(org.apache.hadoop.fs.FileSystem,org.apache.hudi.common.bootstrap.index.HFileBootstrapIndex.HFilePathForReader,org.apache.hadoop.hbase.io.hfile.CacheConfig,org.apache.hadoop.conf.Configuration)

by replace line 182-183 of the class of HFileBootstrapIndex with

HFile.Reader reader = HFile.createReader(fileSystem, new HFilePathForReader(hFilePath),

new CacheConfig(conf), true, conf);For other same error, fix it with the method like before.

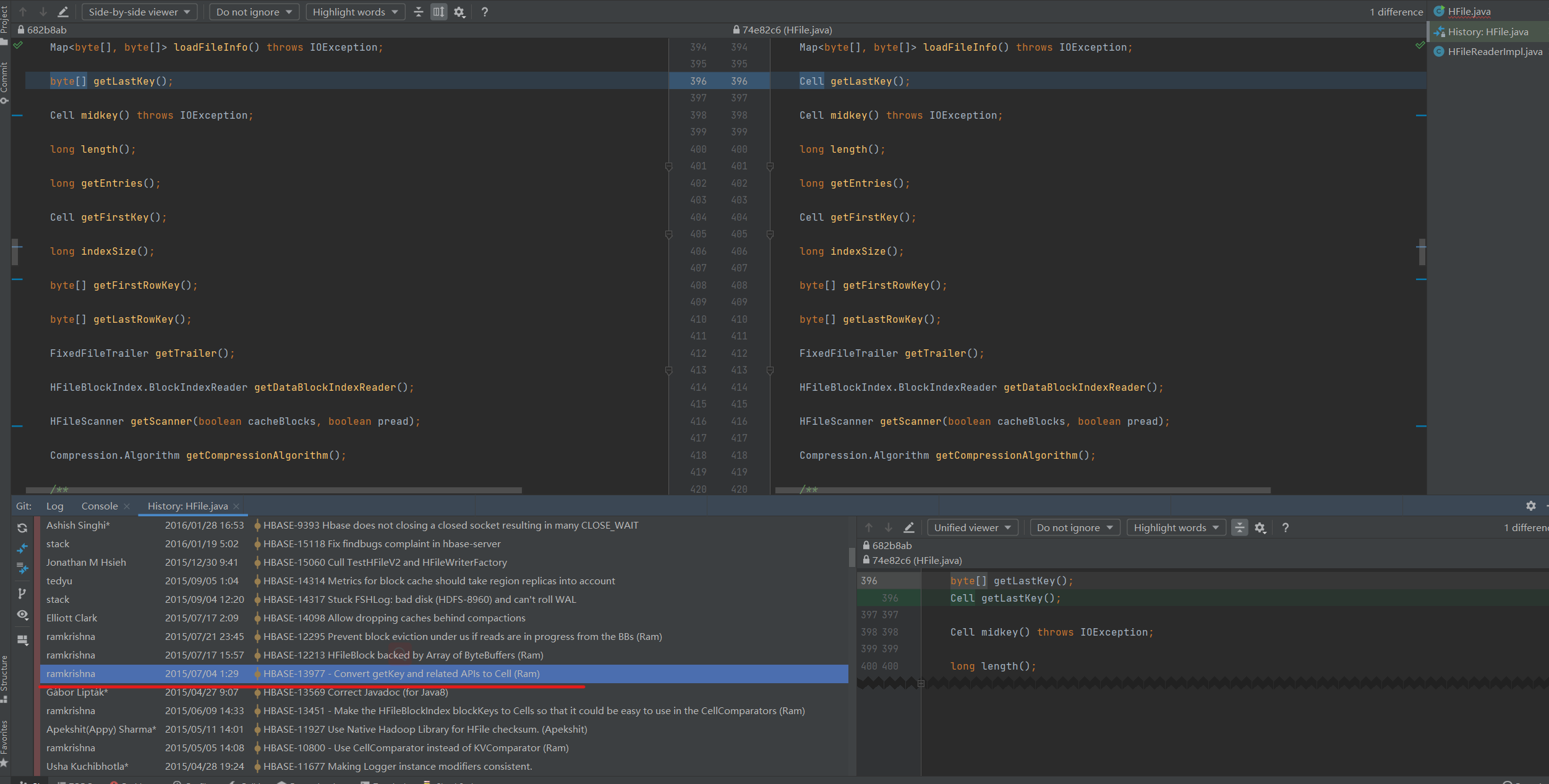

- The method of getKeyValue in HFileScanner is replaced with getCell

I repair the error of

[ERROR] /root/hudi-0.9.0/hudi-common/src/main/java/org/apache/hudi/common/bootstrap/index/HFileBootstrapIndex.java:[309,93] cannot find symbol

[ERROR] symbol: method getKeyValue()

[ERROR] location: variable scanner of type org.apache.hadoop.hbase.io.hfile.HFileScannerby replace the method of getKeyValue with getCell, and replace line 309 with

keys.add(converter.apply(getUserKeyFromCellKey(CellUtil.getCellKeyAsString(scanner.getCell()))));For other same error, fix it with the method like before.

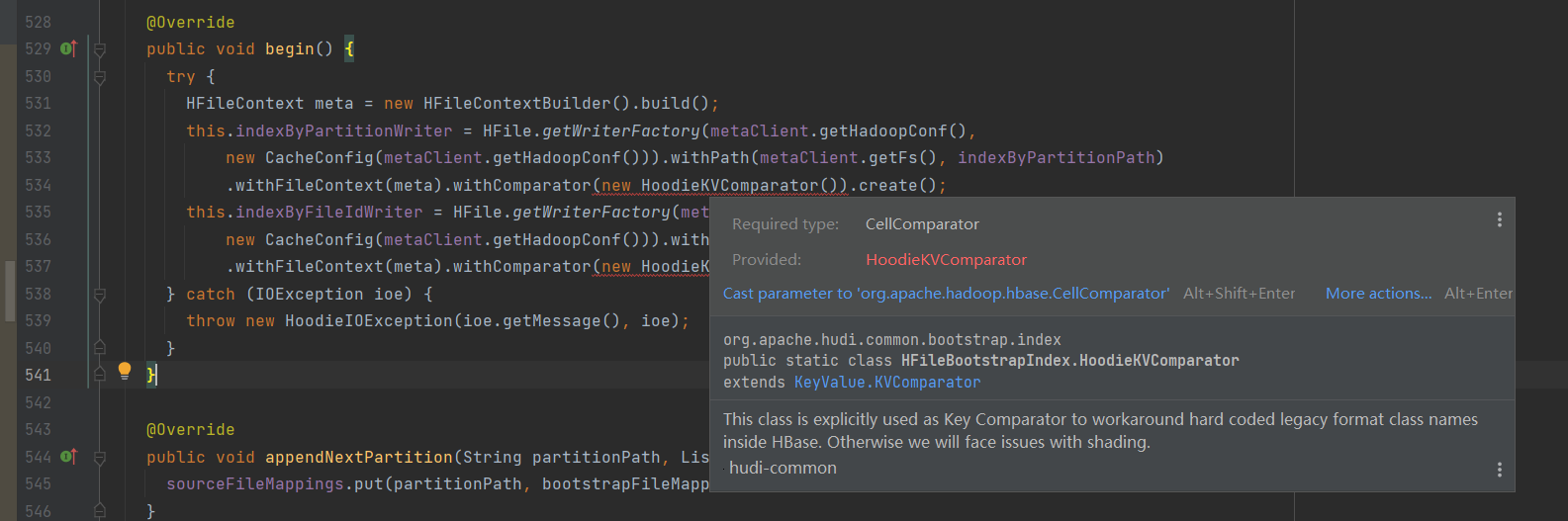

- The method of withComparator in HFile requires the type of CellComparator, however the provided is HoodieKVComparator.

I repair the error of

[ERROR] /root/hudi-0.9.0/hudi-common/src/main/java/org/apache/hudi/common/bootstrap/index/HFileBootstrapIndex.java:[534,51] incompatible types: org.apache.hudi.common.bootstrap.index.HFileBootstrapIndex.HoodieKVComparator cannot be converted to org.apache.hadoop.hbase.CellComparatorby replace line 585-586 of the class of HFileBootstrapIndex with

public static class HoodieKVComparator extends CellComparatorImpl {

}For other same error, fix it with the method like before.

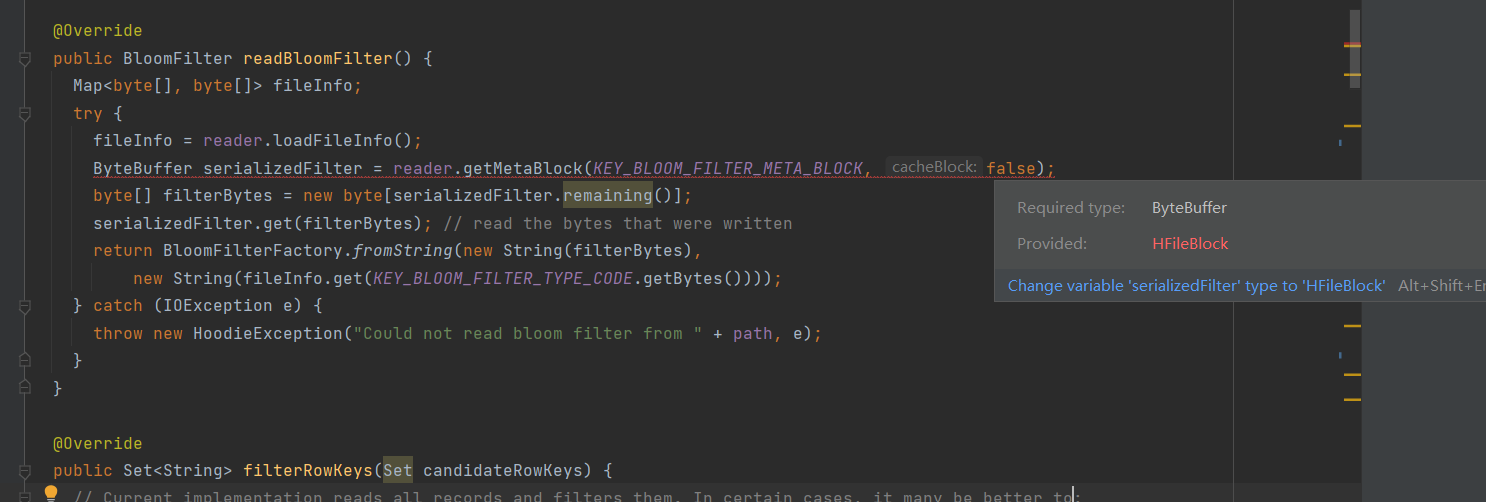

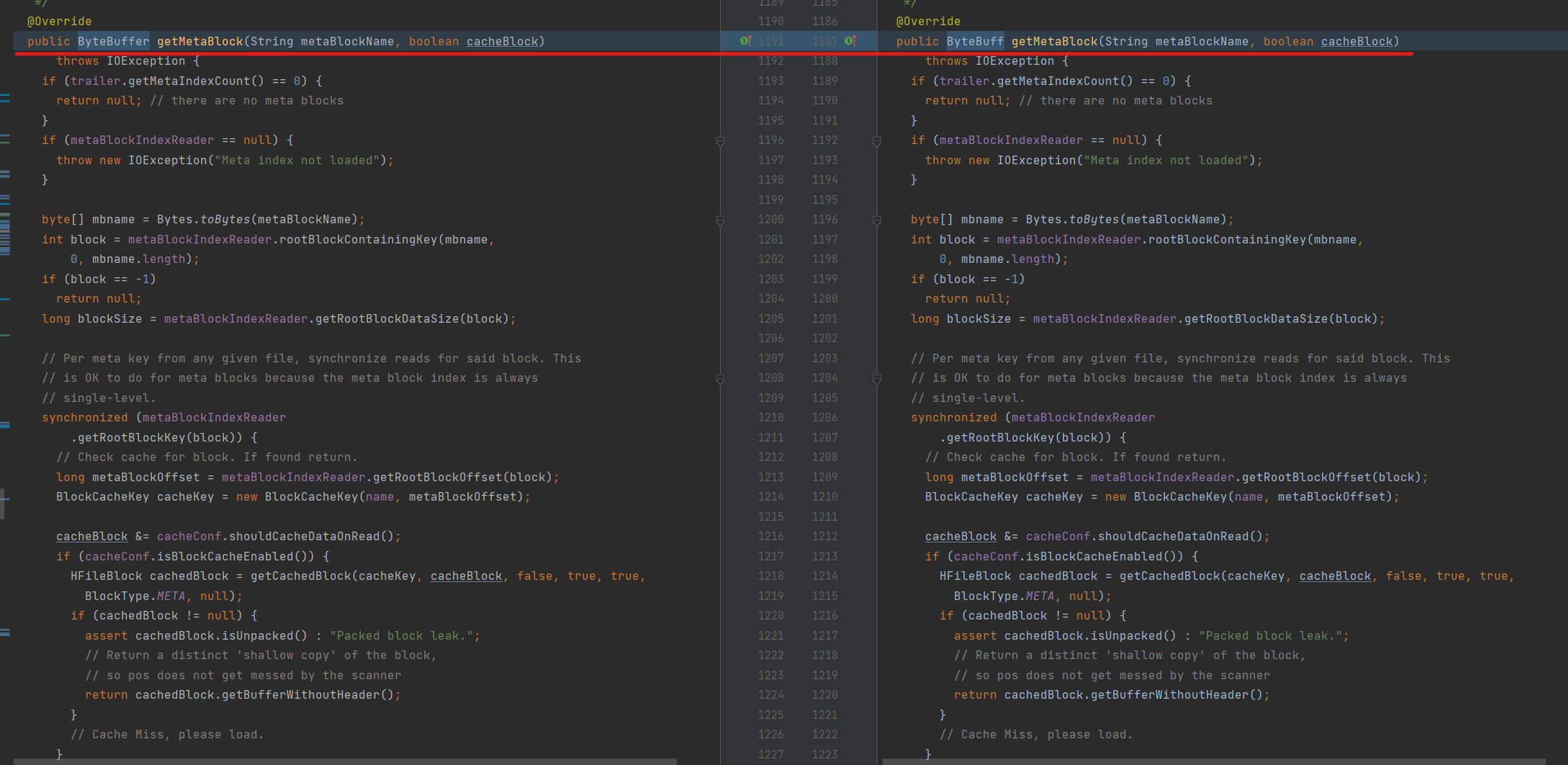

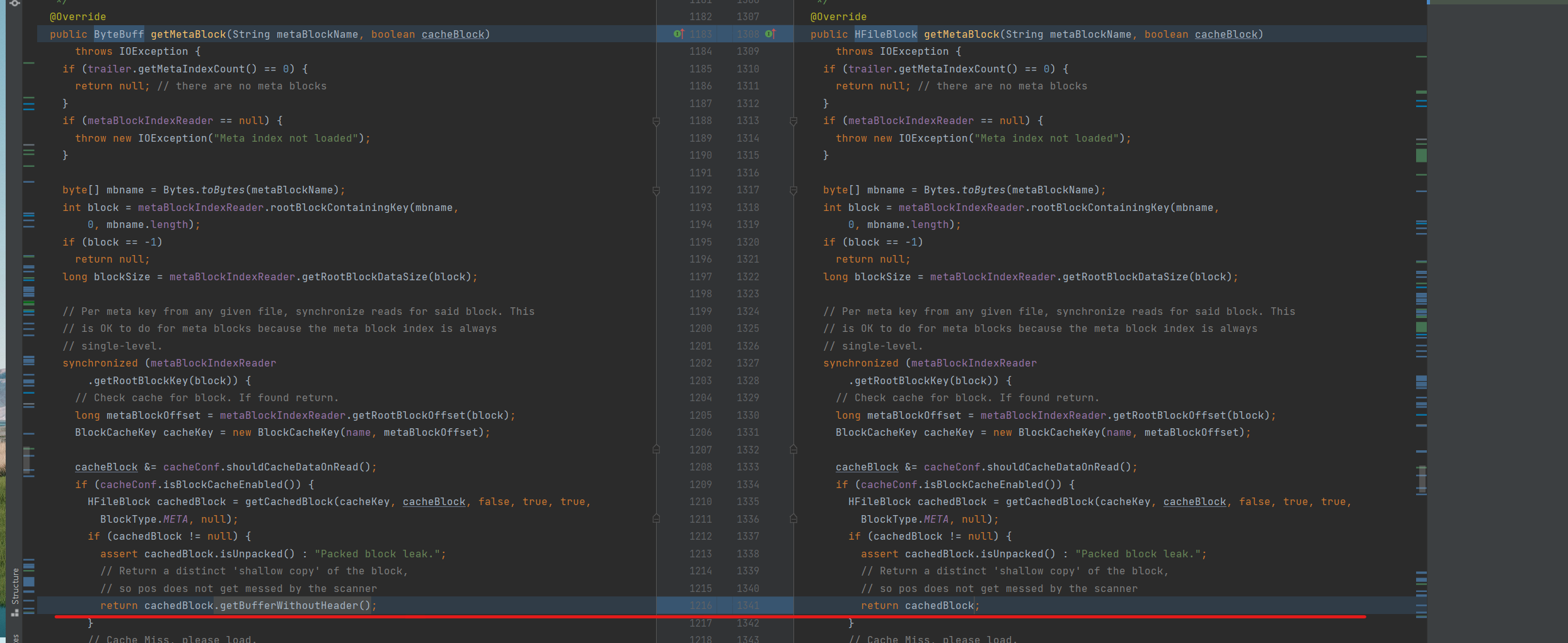

- The return of the method of getMetaBlock in HFileReaderImpl is changed

and the transition of the method is list below:

I repair the error of

[ERROR] /root/hudi-0.9.0/hudi-common/src/main/java/org/apache/hudi/io/storage/HoodieHFileReader.java:[114,56] incompatible types: org.apache.hadoop.hbase.io.hfile.HFileBlock cannot be converted to java.nio.ByteBuffer

by replace line 115 of the class of HoodieHFileReader with

ByteBuff serializedFilter = reader.getMetaBlock(KEY_BLOOM_FILTER_META_BLOCK, false).getBufferWithoutHeader();For other same error, fix it with the method like before.

After I repair the error list before, I can compile the code successful.

The test is hard to pass

However, when I run the test by running:

mvn -Punit-tests testanother problems occurs:

INFO] Tests run: 2, Failures: 0, Errors: 0, Skipped: 0, Time elapsed: 3.467 s - in org.apache.hudi.execution.TestSparkBoundedInMemoryExecutor

[INFO]

[INFO] Results:

[INFO]

[ERROR] Failures:

[ERROR] TestHoodieRowCreateHandle.testInstantiationFailure:176 Should have thrown exception

[ERROR] Errors:

[ERROR] TestCleaner.testKeepLatestCommits:1130->generateBootstrapIndexAndSourceData:1258 » NoSuchMethod

[ERROR] TestCleaner.testKeepLatestFileVersions:650->generateBootstrapIndexAndSourceData:1258 » NoSuchMethod

[ERROR] TestHoodieMergeOnReadTable.testSimpleInsertAndUpdateHFile:232->insertRecords:1662 » HoodieUpsert

[ERROR] TestAsyncCompaction.testCompactionAfterTwoDeltaCommits:304->CompactionTestBase.runNextDeltaCommits:127 » HoodieMetadata

[ERROR] TestAsyncCompaction.testCompactionOnReplacedFiles:356->CompactionTestBase.runNextDeltaCommits:127 » HoodieMetadata

[ERROR] TestAsyncCompaction.testInflightCompaction:176->CompactionTestBase.runNextDeltaCommits:127 » HoodieMetadata

[ERROR] TestAsyncCompaction.testInterleavedCompaction:328->CompactionTestBase.runNextDeltaCommits:127 » HoodieMetadata

[ERROR] TestAsyncCompaction.testRollbackForInflightCompaction:72->CompactionTestBase.runNextDeltaCommits:131 » HoodieMetadata

[ERROR] TestAsyncCompaction.testRollbackInflightIngestionWithPendingCompaction:126->CompactionTestBase.runNextDeltaCommits:131 » HoodieMetadata

[ERROR] TestAsyncCompaction.testScheduleCompactionAfterPendingIngestion:239->CompactionTestBase.runNextDeltaCommits:131 » HoodieMetadata

[ERROR] TestAsyncCompaction.testScheduleCompactionWithOlderOrSameTimestamp:270->CompactionTestBase.runNextDeltaCommits:131 » HoodieMetadata

[ERROR] TestAsyncCompaction.testScheduleIngestionBeforePendingCompaction:208->CompactionTestBase.runNextDeltaCommits:131 » HoodieMetadata

[INFO]

[ERROR] Tests run: 358, Failures: 1, Errors: 12, Skipped: 0

[INFO]

[INFO] ------------------------------------------------------------------------

[INFO] Reactor Summary:

[INFO]

[INFO] hudi-spark-client 0.9.0 ............................ FAILURE [39:17 min]

[INFO] hudi-sync-common ................................... SKIPPED

[INFO] hudi-hive-sync ..................................... SKIPPED

[INFO] hudi-spark-datasource .............................. SKIPPED

[INFO] hudi-spark-common_2.11 ............................. SKIPPED

[INFO] hudi-spark2_2.11 ................................... SKIPPED

[INFO] hudi-spark_2.11 .................................... SKIPPED

[INFO] hudi-utilities_2.11 ................................ SKIPPED

[INFO] hudi-utilities-bundle_2.11 ......................... SKIPPED

[INFO] hudi-cli ........................................... SKIPPED

[INFO] hudi-java-client ................................... SKIPPED

[INFO] hudi-flink-client .................................. SKIPPED

[INFO] hudi-spark3_2.12 ................................... SKIPPED

[INFO] hudi-dla-sync ...................................... SKIPPED

[INFO] hudi-sync .......................................... SKIPPED

[INFO] hudi-hadoop-mr-bundle .............................. SKIPPED

[INFO] hudi-hive-sync-bundle .............................. SKIPPED

[INFO] hudi-spark-bundle_2.11 ............................. SKIPPED

[INFO] hudi-presto-bundle ................................. SKIPPED

[INFO] hudi-timeline-server-bundle ........................ SKIPPED

[INFO] hudi-hadoop-docker ................................. SKIPPED

[INFO] hudi-hadoop-base-docker ............................ SKIPPED

[INFO] hudi-hadoop-namenode-docker ........................ SKIPPED

[INFO] hudi-hadoop-datanode-docker ........................ SKIPPED

[INFO] hudi-hadoop-history-docker ......................... SKIPPED

[INFO] hudi-hadoop-hive-docker ............................ SKIPPED

[INFO] hudi-hadoop-sparkbase-docker ....................... SKIPPED

[INFO] hudi-hadoop-sparkmaster-docker ..................... SKIPPED

[INFO] hudi-hadoop-sparkworker-docker ..................... SKIPPED

[INFO] hudi-hadoop-sparkadhoc-docker ...................... SKIPPED

[INFO] hudi-hadoop-presto-docker .......................... SKIPPED

[INFO] hudi-integ-test .................................... SKIPPED

[INFO] hudi-integ-test-bundle ............................. SKIPPED

[INFO] hudi-examples ...................................... SKIPPED

[INFO] hudi-flink_2.11 .................................... SKIPPED

[INFO] hudi-flink-bundle_2.11 0.9.0 ....................... SKIPPED

[INFO] ------------------------------------------------------------------------

[INFO] BUILD FAILURE

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 39:18 min

[INFO] Finished at: 2021-09-08T17:19:14+08:00

[INFO] ------------------------------------------------------------------------

[ERROR] Failed to execute goal org.apache.maven.plugins:maven-surefire-plugin:3.0.0-M4:test (default-test) on project hudi-spark-client: There are test failures.

[ERROR]

[ERROR] Please refer to /root/xzc/hudi/hudi/hudi-client/hudi-spark-client/target/surefire-reports for the individual test results.

[ERROR] Please refer to dump files (if any exist) [date].dump, [date]-jvmRun[N].dump and [date].dumpstream.

[ERROR] -> [Help 1]

[ERROR]

[ERROR] To see the full stack trace of the errors, re-run Maven with the -e switch.

[ERROR] Re-run Maven using the -X switch to enable full debug logging.

[ERROR]

[ERROR] For more information about the errors and possible solutions, please read the following articles:

[ERROR] [Help 1] [http://cwiki.apache.org/confluence/display/MAVEN/MojoFailureException](http://cwiki.apache.org/confluence/display/MAVEN/MojoFailureException)

root@host116[/root/xzc/hudi/hudi]#