-

Notifications

You must be signed in to change notification settings - Fork 5.9k

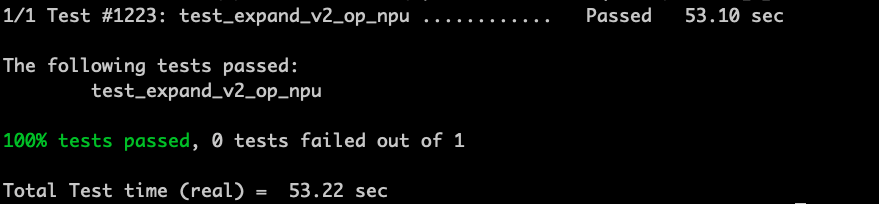

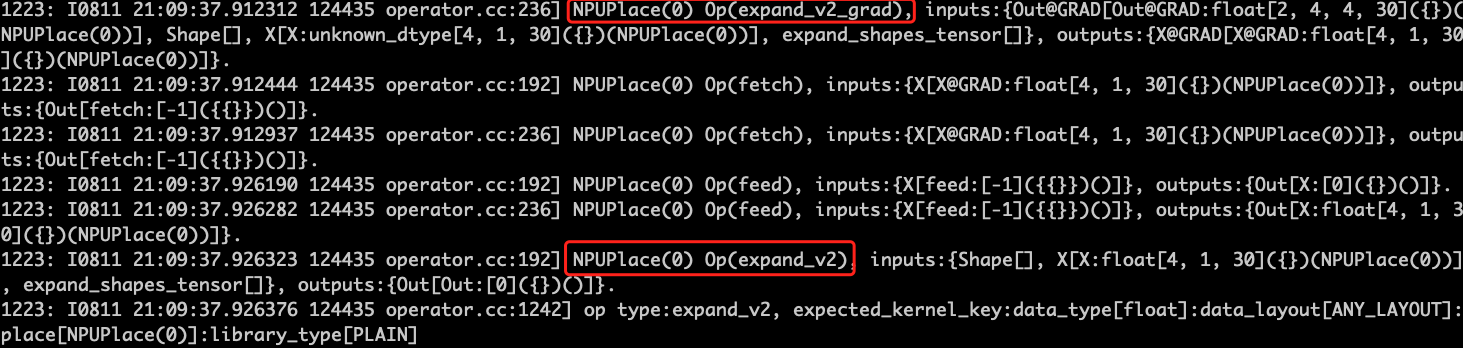

[NPU] Support npu op expand_v2 and expand_v2_grad #34764

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

Thanks for your contribution! |

yaoxuefeng6

left a comment

yaoxuefeng6

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM

|

|

||

| #include "paddle/fluid/operators/expand_v2_op.h" | ||

| #include <memory> | ||

| #include <string> |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

14-15两行头文件不需要

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

已删除

| namespace paddle { | ||

| namespace operators { | ||

|

|

||

| inline std::vector<int> get_expand_shape_npu( |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

参考 https://github.com/PaddlePaddle/Paddle/blob/develop/paddle/fluid/operators/expand_op.h 中 get_expand_times 的写法,直接修改 expand_v2_op.h中get_expand_shape的方法,增加platform::is_npu_place的功能实现即可,不需要重写整个函数。

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

已在expand_v2_op.h中添加platform::is_npu_place,expand_v2_op_npu.cc中取消get_expand_shape_npu函数

| "The %uth element of 'shape' for expand_v2_npu op must be " | ||

| "greater than 0, but the value given is %d.", | ||

| i, expand_shape[i])); | ||

| } |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

需要结合TestExpandV2OpRank4进行代码调整。

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

expand_v2_op_npu.cc中添加对expand_shape的再构造,考虑三种情况 1) expand_shape比input_dim维度要大的情况 2) expand_shape[i]维度大于0的情况 3) expand_shape[i]等于-1的情况

| # def init_data(self): | ||

| # self.ori_shape = (2, 4, 5, 7) | ||

| # self.shape = (-1, -1, -1, -1) | ||

| # self.expand_times = (1, 1, 1, 1) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

确认下,在CPU/CUDA代码中,对于self.shape = (-1, -1, -1, -1)的情况时如何处理的?需要看下expand_v2_op.h的CPU计算逻辑,应该最后可以得到一个正值的out_dims的结果,之后看下是否可以通过NPU的ExpandD或者BoradCastD等算子进行实现。

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

已添加三个shape中有-1情况的单测用例,单测已通过

qili93

left a comment

qili93

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM

zhiqiu

left a comment

zhiqiu

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM

|

|

||

| /* Copyright (c) 2021 PaddlePaddle Authors. All Rights Reserved. | ||

| Licensed under the Apache License, Version 2.0 (the "License"); | ||

| you may not use this file except in compliance with the License. | ||

| You may obtain a copy of the License at | ||

| http://www.apache.org/licenses/LICENSE-2.0 | ||

| Unless required by applicable law or agreed to in writing, software | ||

| distributed under the License is distributed on an "AS IS" BASIS, | ||

| WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. | ||

| See the License for the specific language governing permissions and | ||

| limitations under the License. */ |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Plz check the copyright format.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

reformat the copyright done.

|

|

||

| REGISTER_OP_NPU_KERNEL( | ||

| expand_v2_grad, | ||

| ops::ExpandV2NPUGradKernel<paddle::platform::NPUDeviceContext, float>, |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Seems missing fp16 kernel for expand_grad

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

add fp16 kernel support for expand_grad

| for (auto i = 0; i < reduce_ndim; ++i) { | ||

| axes.push_back(i); | ||

| } | ||

| Tensor* tmp_dout = const_cast<Tensor*>(dout); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Avoid const_cast by using SharedDataWith, see dropout_op_npu.cc:L61 for details.

zhiqiu

left a comment

zhiqiu

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM for ShareDataWith

PR types

New features

PR changes

OPs

Describe

Add NPU kernel for expand_v2 and expand_v2_grad