-

Notifications

You must be signed in to change notification settings - Fork 192

Bug 1320977 - performance tweaks #678

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Bug 1320977 - performance tweaks #678

Conversation

|

@purelogiq can you review this? |

purelogiq

left a comment

purelogiq

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Nice

| use Bugzilla::Util (); | ||

| use Bugzilla::RNG (); | ||

| use Bugzilla::ModPerl (); | ||

| use Mojo::Loader qw(find_modules); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Interesting use of mojo in a not-yet-mojo app :)

|

@dylanwh Will verify the profiling numbers Monday, missed your comment there. |

|

cool, I'll wait to merge. if it's not fast enough-er I can find another slow part. |

|

@dylanwh First off, awesome stuff with the flamegraphs and profiling. When I compared the profile on master vs this branch I saw that Vid on google drive: https://drive.google.com/file/d/194cHcyvlZpyP5JvXUr1eyY42tCmp0DlA/view |

|

Are you restarting httpd between tests? But actually, looking at the video this is mostly expected -- the difference disappears after the first request because the optimization here is just loading some modules earlier. However "first requests" are quite common -- because it's per worker-process and there are hundreds of those, and they get restarted somewhat frequently. |

|

Looks like the remaining balance of time is in using Safe->reval(). I wanted to fix that in #321 but it's a little tricky. |

|

@purelogiq I don't want to check now because I really want to get this test case posted, but tomorrow can you try benchmarking |

|

@dylanwh Retested and still getting the same results :/ Changing $cpt->reval($serialized) in DB/Schema.pm as below and removing the Safe import: results in a pretty good speed up: BEFORE:AFTER: |

|

@dylanwh I'm still good on r+ing this because I've been testing post-warmup and the pre-warmup speed improvement like you mentioned maybe be worth it. |

|

Yup, I'll take it, and prioritize #321 later. |

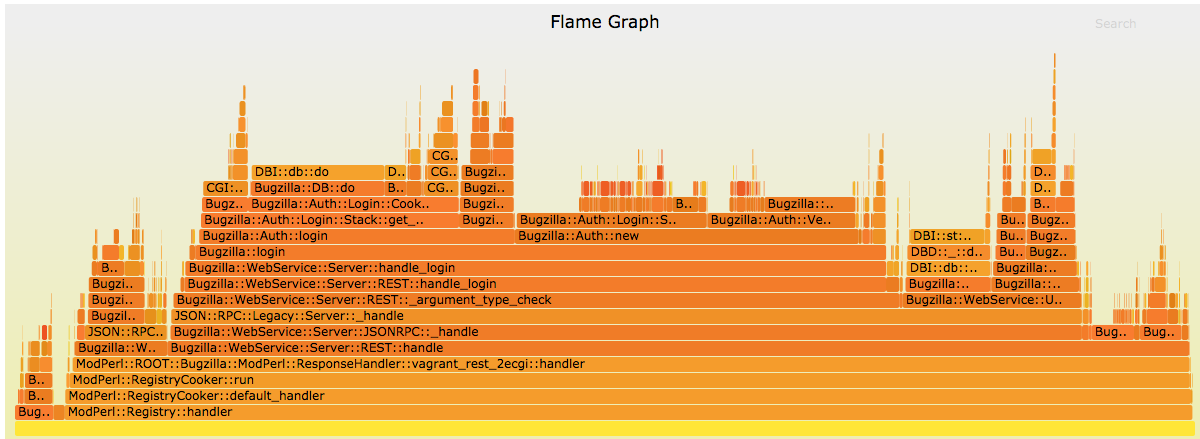

In #672 performance regressed a bit, so let's win that back here.

The theme of this change is to load bugzilla classes earlier. It seems to save about 40ms per API call.

Before:

After