diff --git a/CHANGELOG.md b/CHANGELOG.md

index 6f97b9c2c..97f83ec75 100644

--- a/CHANGELOG.md

+++ b/CHANGELOG.md

@@ -14,7 +14,19 @@ The `Unreleased` section name is replaced by the expected version of next releas

- `Cosmos`: Reorganize Sync log message text, merge with Sync Conflict message [#241](https://github.com/jet/equinox/pull/241)

- `Cosmos`: Converge Stored Procedure Impl with `tip-isa-batch` impl from V3 (minor Request Charges cost reduction) [#242](https://github.com/jet/equinox/pull/242)

-- target `Microsoft.Azure.Cosmos` v `3.9.0` (instead of `Microsoft.Azure.DocumentDB`[`.Core`] v 2.x) [#144](https://github.com/jet/equinox/pull/144)

+- Fork `Equinox.Cosmos` to `Equinox.CosmosStore`

+ - target `Microsoft.Azure.Cosmos` v `3.9.0` (instead of `Microsoft.Azure.DocumentDB`[`.Core`] v 2.x) [#144](https://github.com/jet/equinox/pull/144)

+ - Removed [warmup call](https://github.com/Azure/azure-cosmos-dotnet-v3/issues/1436)

+ - Rename `Equinox.Cosmos` DLL and namespace to `Equinox.CosmosStore` [#243](https://github.com/jet/equinox/pull/243)

+ - Rename `Equinox.Cosmos.Store` -> `Equinox.CosmosStore.Core`

+ - `Core` sub-namespace

+ - Rename `Equinox.Cosmos.Core.Context` -> `Equinox.CosmosStore.Core.EventsContext`

+ - Change `Equinox.Cosmos.Core.Connection` -> `Equinox.CosmosStore.Core.RetryPolicy`

+ - Rename `Equinox.Cosmos.Core.Gateway` -> `Equinox.CosmosStore.Core.StoreClient`

+ - Rename `Equinox.Cosmos.Containers` -> `Equinox.CosmosStore.CosmosStoreConnection`

+ - Rename `Equinox.Cosmos.Context` -> `Equinox.CosmosStore.CosmosStoreContext`

+ - Rename `Equinox.Cosmos.Resolver` -> `Equinox.CosmosStore.CosmosStoreCategory`

+ - Rename `Equinox.Cosmos.Connector` -> `Equinox.CosmosStore.CosmosStoreClientFactory`

- target `EventStore.Client` v `20.6` (instead of v `5.0.x`) [#224](https://github.com/jet/equinox/pull/224)

- Retarget `netcoreapp2.1` apps to `netcoreapp3.1` with `SystemTextJson`

- Retarget Todobackend to `aspnetcore` v `3.1`

@@ -24,7 +36,6 @@ The `Unreleased` section name is replaced by the expected version of next releas

- Update to `3.1.101` SDK

- Remove `module Commands` convention from in examples

- Revise semantics of Cart Sample Command handling

-- `Cosmos:` Removed [warmup call](https://github.com/Azure/azure-cosmos-dotnet-v3/issues/1436)

- Simplify `AsyncCacheCell` [#229](https://github.com/jet/equinox/pull/229)

### Removed

diff --git a/DOCUMENTATION.md b/DOCUMENTATION.md

index f9e88d709..39fcad83e 100755

--- a/DOCUMENTATION.md

+++ b/DOCUMENTATION.md

@@ -164,23 +164,23 @@ slightly differently:

-# Equinox.Cosmos

+# Equinox.CosmosStore

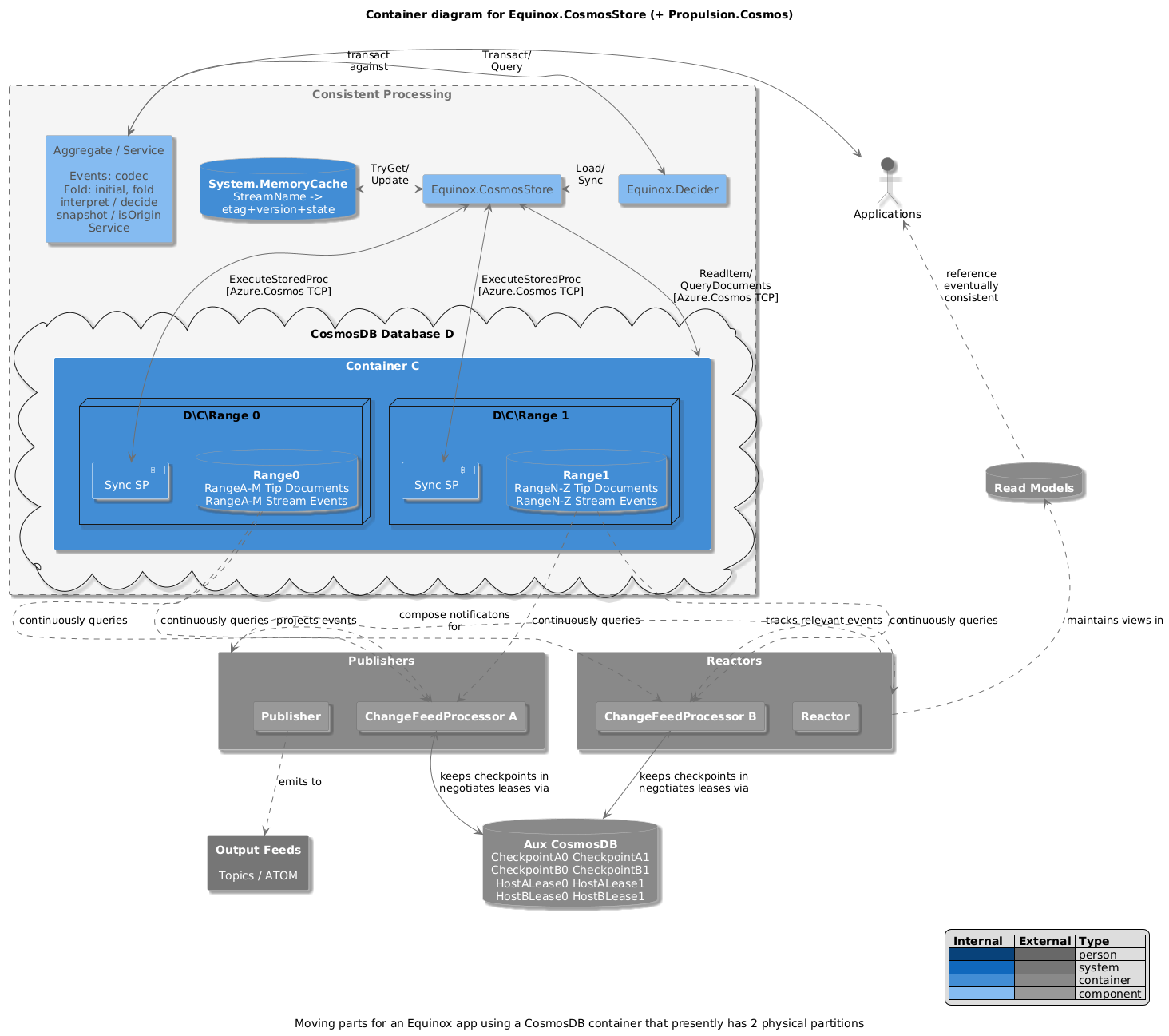

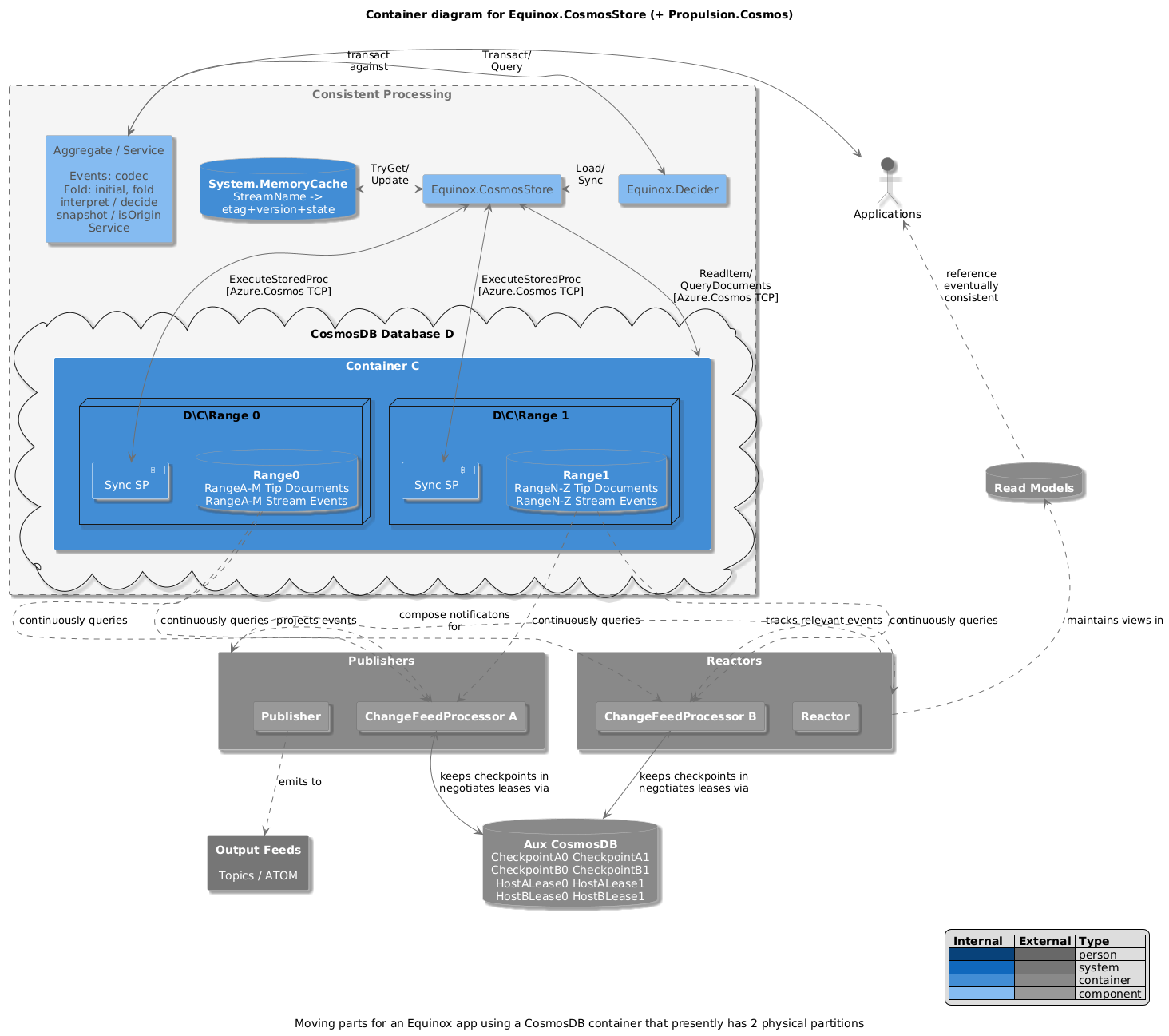

-## Container Diagram for `Equinox.Cosmos`

+## Container Diagram for `Equinox.CosmosStore`

-

+

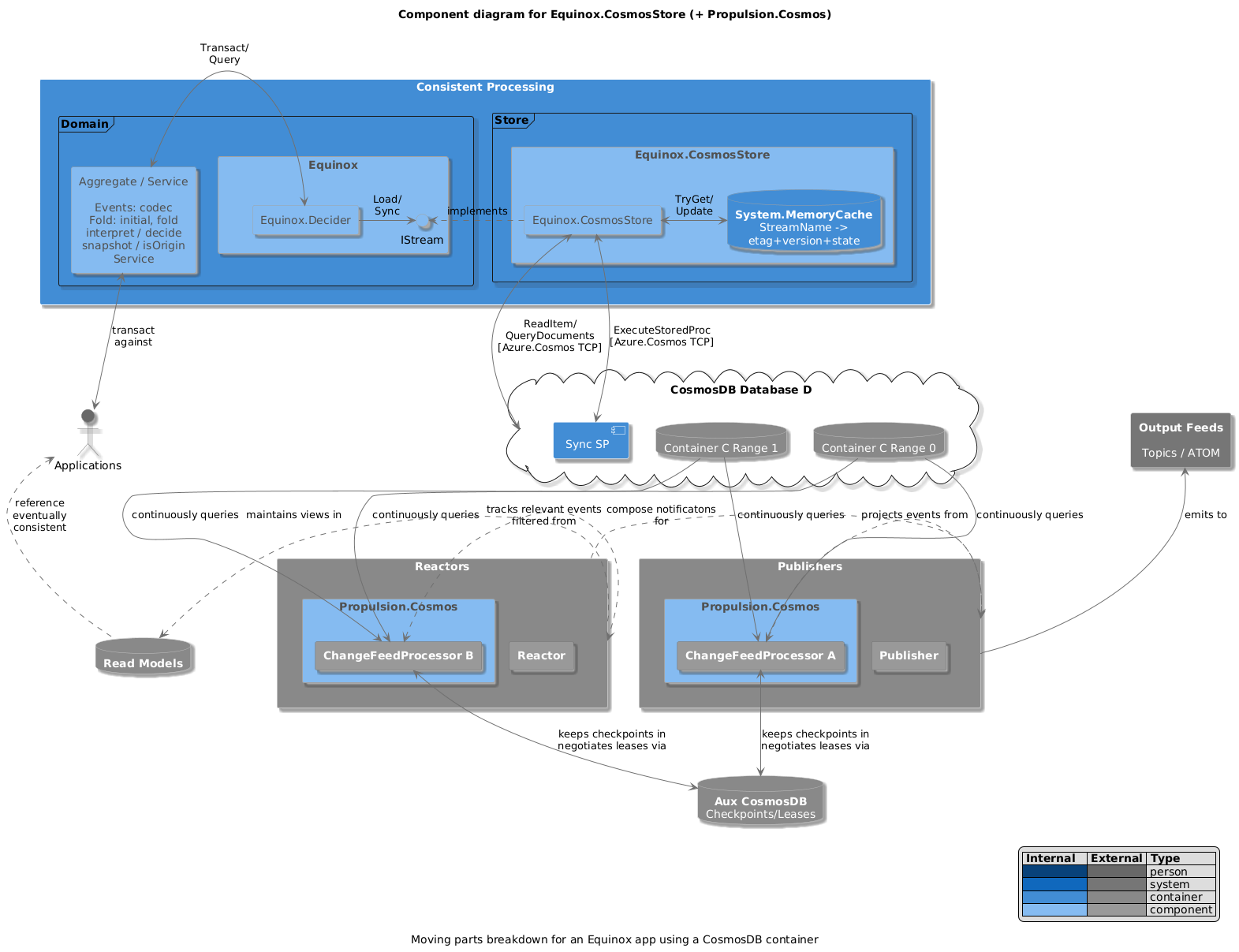

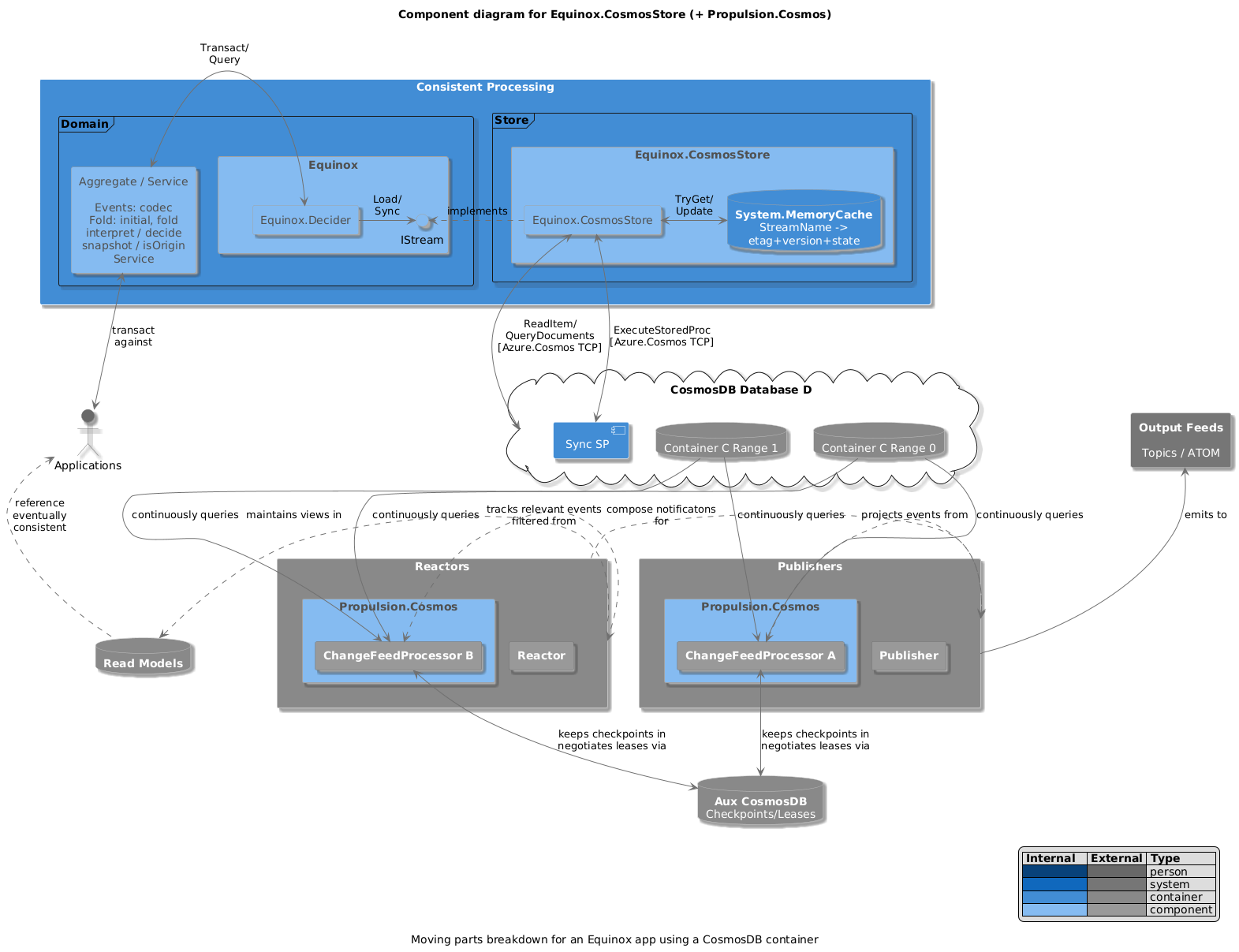

-## Component Diagram for `Equinox.Cosmos`

+## Component Diagram for `Equinox.CosmosStore`

-

+

-## Code Diagrams for `Equinox.Cosmos`

+## Code Diagrams for `Equinox.CosmosStore`

This diagram walks through the basic sequence of operations, where:

- this node has not yet read this stream (i.e. there's nothing in the Cache)

- when we do read it, the Read call returns `404` (with a charge of `1 RU`)

-

+

Next, we extend the scenario to show:

- how state held in the Cache influences the Cosmos APIs used

@@ -194,12 +194,12 @@ Next, we extend the scenario to show:

- when there's conflict and we're giving up (throw

`MaxAttemptsExceededException`)

-

+

After the write, we circle back to illustrate the effect of the caching when we

have correct state (we get a `304 Not Mofified` and pay only `1 RU`)

-

+

In other processes (when a cache is not fully in sync), the sequence runs

slightly differently:

@@ -208,7 +208,7 @@ slightly differently:

suitable snapshot that passes the `isOrigin` predicate is found within the

_Tip_

-

+

# Glossary

@@ -410,12 +410,12 @@ module EventStore =

module Cosmos =

let accessStrategy =

- Equinox.Cosmos.AccessStrategy.Snapshot (Fold.isOrigin, Fold.snapshot)

+ Equinox.CosmosStore.AccessStrategy.Snapshot (Fold.isOrigin, Fold.snapshot)

let create (context, cache) =

let cacheStrategy =

- Equinox.Cosmos.CachingStrategy.SlidingWindow (cache, System.TimeSpan.FromMinutes 20.)

+ Equinox.CosmosStore.CachingStrategy.SlidingWindow (cache, System.TimeSpan.FromMinutes 20.)

let resolver =

- Equinox.Cosmos.Resolver(context, Events.codec, Fold.fold, Fold.initial, cacheStrategy, accessStrategy)

+ Equinox.CosmCosmosStoreos.Resolver(context, Events.codec, Fold.fold, Fold.initial, cacheStrategy, accessStrategy)

create resolver.Resolve

```

@@ -1558,12 +1558,12 @@ not reading data redundantly, and not feeding back into the oneself (although

having separate roundtrips obviously has implications).

-# `Equinox.Cosmos` CosmosDB Storage Model

+# `Equinox.CosmosStore` CosmosDB Storage Model

-This article provides a walkthrough of how `Equinox.Cosmos` encodes, writes and

+This article provides a walkthrough of how `Equinox.CosmosStore` encodes, writes and

reads records from a stream under its control.

-The code (see [source](src/Equinox.Cosmos/Cosmos.fs#L6)) contains lots of

+The code (see [source](src/Equinox.CosmosStore/CosmosStore.fs#L6)) contains lots of

comments and is intended to be read - this just provides some background.

## Batches

@@ -1648,7 +1648,7 @@ basic elements

- Unfolds - the term `unfold` is based on the well known 'standard' FP function

of that name, bearing the signature `'state -> 'event seq`. **=> For

- `Equinox.Cosmos`, one might say `unfold` yields _projection_ s as _event_ s

+ `Equinox.CosmosStore`, one might say `unfold` yields _projection_ s as _event_ s

to _snapshot_ the _state_ as at that _position_ in the _stream_**.

## Generating and saving `unfold`ed events

@@ -1742,9 +1742,9 @@ based on the events presented.

This covers what the most complete possible implementation of the JS Stored

Procedure (see

-[source](https://github.com/jet/equinox/blob/tip-isa-batch/src/Equinox.Cosmos/Cosmos.fs#L302))

+[source](https://github.com/jet/equinox/blob/tip-isa-batch/src/Equinox.CosmosStore/Cosmos.fs#L302))

does when presented with a batch to be written. (NB The present implementation

-is slightly simplified; see [source](src/Equinox.Cosmos/Cosmos.fs#L303).

+is slightly simplified; see [source](src/Equinox.CosmosStore/CosmosStore.fs#L404).

The `sync` stored procedure takes as input, a document that is almost identical

to the format of the _`Tip`_ batch (in fact, if the stream is found to be

@@ -1780,9 +1780,9 @@ stream). The request includes the following elements:

retrying in the case of conflict, _without any events being written per state

change_)

-## Equinox.Cosmos.Core.Events

+## Equinox.CosmosStore.Core.Events

-The `Equinox.Cosmos.Core` namespace provides a lower level API that can be used

+The `Equinox.CosmosStore.Core` namespace provides a lower level API that can be used

to manipulate events stored within a Azure CosmosDb using optimized native

access patterns.

@@ -1807,7 +1807,7 @@ following key benefits:

```fsharp

-open Equinox.Cosmos.Core

+open Equinox.CosmosStore.Core

// open MyCodecs.Json // example of using specific codec which can yield UTF-8

// byte arrays from a type using `Json.toBytes` via Fleece

// or similar

@@ -1818,7 +1818,7 @@ type EventData with

// Load connection sring from your Key Vault (example here is the CosmosDb

// simulator's well known key)

-let connectionString: string =

+let connectionString : string =

"AccountEndpoint=https://localhost:8081;AccountKey=C2y6yDjf5/R+ob0N8A7Cgv30VRDJIWEHLM+4QDU5DE2nQ9nDuVTqobD4b8mGGyPMbIZnqyMsEcaGQy67XIw/Jw==;"

// Forward to Log (you can use `Log.Logger` and/or `Log.ForContext` if your app

@@ -1829,22 +1829,22 @@ let outputLog = LoggerConfiguration().WriteTo.NLog().CreateLogger()

let gatewayLog =

outputLog.ForContext(Serilog.Core.Constants.SourceContextPropertyName, "Equinox")

-// When starting the app, we connect (once)

-let connector : Equinox.Cosmos.Connector =

- Connector(

+let factory : Equinox.CosmosStore.CosmosStoreClientFactory =

+ CosmosStoreClientFactory(

requestTimeout = TimeSpan.FromSeconds 5.,

maxRetryAttemptsOnThrottledRequests = 1,

maxRetryWaitTimeInSeconds = 3,

log = gatewayLog)

-let cnx =

- connector.Connect("Application.CommandProcessor", Discovery.FromConnectionString connectionString)

+let client =

+ factory.Create("Application.CommandProcessor", Discovery.FromConnectionString connectionString)

|> Async.RunSynchronously

+let client = factory.Create(Discovery.ConnectionString connectionString)

+

// If storing in a single collection, one specifies the db and collection

-// alternately use the overload that defers the mapping until the stream one is

-// writing to becomes clear

-let containerMap = Containers("databaseName", "containerName")

-let ctx = Context(cnx, containerMap, gatewayLog)

+// alternately use the overload that defers the mapping until the stream one is writing to becomes clear

+let connection = CosmosStoreConnection(client, "databaseName", "containerName")

+let ctx = EventsContext(connection, gatewayLog)

//

// Write an event

@@ -1870,7 +1870,7 @@ An Access Strategy defines any optimizations regarding how one arrives at a

State of an Aggregate based on the Events stored in a Stream in a Store.

The specifics of an Access Strategy depend on what makes sense for a given

-Store, i.e. `Equinox.Cosmos` necessarily has a significantly different set of

+Store, i.e. `Equinox.CosmosStore` necessarily has a significantly different set of

strategies than `Equinox.EventStore` (although there is an intersection).

Access Strategies only affect performance; you should still be able to infer

@@ -1881,9 +1881,9 @@ NOTE: its not important to select a strategy until you've actually actually

modelled your aggregate, see [what if I change my access

strategy](#changing-access-strategy)

-## `Equinox.Cosmos.AccessStrategy`

+## `Equinox.CosmosStore.AccessStrategy`

-TL;DR `Equinox.Cosmos`: (see also: [the storage

+TL;DR `Equinox.CosmosStore`: (see also: [the storage

model](cosmos-storage-model) for a deep dive, and [glossary,

below the table](#access-strategy-glossary) for definition of terms)

- keeps all the Events for a Stream in a single [CosmosDB _logical

@@ -2073,20 +2073,20 @@ EventStore, and it's Store adapter is the most proven and is pretty feature

rich relative to the need of consumers to date. Some things remain though:

- Provide a low level walking events in F# API akin to

- `Equinox.Cosmos.Core.Events`; this would allow consumers to jump from direct

+ `Equinox.CosmosStore.Core.Events`; this would allow consumers to jump from direct

use of `EventStore.ClientAPI` -> `Equinox.EventStore.Core.Events` ->

`Equinox.Stream` (with the potential to swap stores once one gets to using

`Equinox.Stream`)

-- Get conflict handling as efficient and predictable as for `Equinox.Cosmos`

+- Get conflict handling as efficient and predictable as for `Equinox.CosmosStore`

https://github.com/jet/equinox/issues/28

- provide for snapshots to be stored out of the stream, and loaded in a

customizable manner in a manner analogous to

- [the proposed comparable `Equinox.Cosmos` facility](https://github.com/jet/equinox/issues/61).

+ [the proposed comparable `Equinox.CosmosStore` facility](https://github.com/jet/equinox/issues/61).

-## Wouldn't it be nice - `Equinox.Cosmos`

+## Wouldn't it be nice - `Equinox.CosmosStore`

- Enable snapshots to be stored outside of the main collection in

- `Equinox.Cosmos` [#61](https://github.com/jet/equinox/issues/61)

+ `Equinox.CosmosStore` [#61](https://github.com/jet/equinox/issues/61)

- Multiple writers support for `u`nfolds (at present a `sync` completely

replaces the unfolds in the Tip; this will be extended by having the stored

proc maintain the union of the unfolds in play (both for semi-related

diff --git a/Equinox.sln b/Equinox.sln

index 80d4d4328..1be43e785 100644

--- a/Equinox.sln

+++ b/Equinox.sln

@@ -47,9 +47,9 @@ Project("{6EC3EE1D-3C4E-46DD-8F32-0CC8E7565705}") = "Equinox.MemoryStore.Integra

EndProject

Project("{6EC3EE1D-3C4E-46DD-8F32-0CC8E7565705}") = "Equinox.Tool", "tools\Equinox.Tool\Equinox.Tool.fsproj", "{C8992C1C-6DC5-42CD-A3D7-1C5663433FED}"

EndProject

-Project("{6EC3EE1D-3C4E-46DD-8F32-0CC8E7565705}") = "Equinox.Cosmos", "src\Equinox.Cosmos\Equinox.Cosmos.fsproj", "{54EA6187-9F9F-4D67-B602-163D011E43E6}"

+Project("{6EC3EE1D-3C4E-46DD-8F32-0CC8E7565705}") = "Equinox.CosmosStore", "src\Equinox.CosmosStore\Equinox.CosmosStore.fsproj", "{54EA6187-9F9F-4D67-B602-163D011E43E6}"

EndProject

-Project("{6EC3EE1D-3C4E-46DD-8F32-0CC8E7565705}") = "Equinox.Cosmos.Integration", "tests\Equinox.Cosmos.Integration\Equinox.Cosmos.Integration.fsproj", "{DE0FEBF0-72DC-4D4A-BBA7-788D875D6B4B}"

+Project("{6EC3EE1D-3C4E-46DD-8F32-0CC8E7565705}") = "Equinox.CosmosStore.Integration", "tests\Equinox.CosmosStore.Integration\Equinox.CosmosStore.Integration.fsproj", "{DE0FEBF0-72DC-4D4A-BBA7-788D875D6B4B}"

EndProject

Project("{6EC3EE1D-3C4E-46DD-8F32-0CC8E7565705}") = "TodoBackend", "samples\TodoBackend\TodoBackend.fsproj", "{EC2EC658-3D85-44F3-AD2F-52AFCAFF8871}"

EndProject

diff --git a/README.md b/README.md

index f20d75451..6cef99749 100644

--- a/README.md

+++ b/README.md

@@ -38,7 +38,7 @@ Some aspects of the implementation are distilled from [`Jet.com` systems dating

- support, (via the [`FsCodec.IEventCodec`](https://github.com/jet/FsCodec#IEventCodec)) for the maintenance of multiple co-existing compaction schemas for a given stream (A 'compaction' event/snapshot isa Event)

- compaction events typically do not get deleted (consistent with how EventStore works), although it is safe to do so in concept

- NB while this works well, and can deliver excellent performance (especially when allied with the Cache), [it's not a panacea, as noted in this excellent EventStore.org article on the topic](https://eventstore.org/docs/event-sourcing-basics/rolling-snapshots/index.html)

-- **`Equinox.Cosmos` 'Tip with Unfolds' schema**: (In contrast to `Equinox.EventStore`'s `AccessStrategy.RollingSnapshots`,) when using `Equinox.Cosmos`, optimized command processing is managed via the `Tip`; a document per stream with a well-known identity enabling Syncing the r/w Position via a single point-read by virtue of the fact that the document maintains:

+- **`Equinox.CosmosStore` 'Tip with Unfolds' schema**: (In contrast to `Equinox.EventStore`'s `AccessStrategy.RollingSnapshots`,) when using `Equinox.CosmosStore`, optimized command processing is managed via the `Tip`; a document per stream with a well-known identity enabling Syncing the r/w Position via a single point-read by virtue of the fact that the document maintains:

a) the present Position of the stream - i.e. the index at which the next events will be appended for a given stream (events and the Tip share a common logical partition key)

b) ephemeral (`deflate+base64` compressed) [_unfolds_](DOCUMENTATION.md#Cosmos-Storage-Model)

c) (optionally) a holding buffer for events since those unfolded events ([presently removed](https://github.com/jet/equinox/pull/58), but [should return](DOCUMENTATION.md#Roadmap), see [#109](https://github.com/jet/equinox/pull/109))

@@ -49,7 +49,7 @@ Some aspects of the implementation are distilled from [`Jet.com` systems dating

- no additional roundtrips to the store needed at either the Load or Sync points in the flow

It should be noted that from a querying perspective, the `Tip` shares the same structure as `Batch` documents (a potential future extension would be to carry some events in the `Tip` as [some interim versions of the implementation once did](https://github.com/jet/equinox/pull/58), see also [#109](https://github.com/jet/equinox/pull/109).

-- **`Equinox.Cosmos` `RollingState` and `Custom` 'non-event-sourced' modes**: Uses 'Tip with Unfolds' encoding to avoid having to write event documents at all - this enables one to build, reason about and test your aggregates in the normal manner, but inhibit event documents from being generated. This enables one to benefit from the caching and consistency management mechanisms without having to bear the cost of writing and storing the events themselves (and/or dealing with an ever-growing store size). Search for `transmute` or `RollingState` in the `samples` and/or see [the `Checkpoint` Aggregate in Propulsion](https://github.com/jet/propulsion/blob/master/src/Propulsion.EventStore/Checkpoint.fs). One chief use of this mechanism is for tracking Summary Event feeds in [the `dotnet-templates` `summaryConsumer` template](https://github.com/jet/dotnet-templates/tree/master/propulsion-summary-consumer).

+- **`Equinox.CosmosStore` `RollingState` and `Custom` 'non-event-sourced' modes**: Uses 'Tip with Unfolds' encoding to avoid having to write event documents at all - this enables one to build, reason about and test your aggregates in the normal manner, but inhibit event documents from being generated. This enables one to benefit from the caching and consistency management mechanisms without having to bear the cost of writing and storing the events themselves (and/or dealing with an ever-growing store size). Search for `transmute` or `RollingState` in the `samples` and/or see [the `Checkpoint` Aggregate in Propulsion](https://github.com/jet/propulsion/blob/master/src/Propulsion.EventStore/Checkpoint.fs). One chief use of this mechanism is for tracking Summary Event feeds in [the `dotnet-templates` `summaryConsumer` template](https://github.com/jet/dotnet-templates/tree/master/propulsion-summary-consumer).

## Components

@@ -77,7 +77,7 @@ The components within this repository are delivered as multi-targeted Nuget pack

- `Equinox.Core` [](https://www.nuget.org/packages/Equinox.Core/): Interfaces and helpers used in realizing the concrete Store implementations, together with the default [`System.Runtime.Caching.Cache`-based] `Cache` implementation . ([depends](https://www.fuget.org/packages/Equinox.Core) on `Equinox`, `System.Runtime.Caching`)

- `Equinox.MemoryStore` [](https://www.nuget.org/packages/Equinox.MemoryStore/): In-memory store for integration testing/performance baselining/providing out-of-the-box zero dependency storage for examples. ([depends](https://www.fuget.org/packages/Equinox.MemoryStore) on `Equinox.Core`, `FsCodec`)

- `Equinox.EventStore` [](https://www.nuget.org/packages/Equinox.EventStore/): Production-strength [EventStoreDB](https://eventstore.org/) Adapter instrumented to the degree necessitated by Jet's production monitoring requirements. ([depends](https://www.fuget.org/packages/Equinox.EventStore) on `Equinox.Core`, `EventStore.Client >= 20.6`, `FSharp.Control.AsyncSeq >= 2.0.23`)

-- `Equinox.Cosmos` [](https://www.nuget.org/packages/Equinox.Cosmos/): Production-strength Azure CosmosDB Adapter with integrated 'unfolds' feature, facilitating optimal read performance in terms of latency and RU costs, instrumented to the degree necessitated by Jet's production monitoring requirements. ([depends](https://www.fuget.org/packages/Equinox.Cosmos) on `Equinox.Core`, `Microsoft.Azure.Cosmos >= 3.9`, `FsCodec.NewtonsoftJson`, `FSharp.Control.AsyncSeq >= 2.0.23`)

+- `Equinox.CosmosStore` [](https://www.nuget.org/packages/Equinox.CosmosStore/): Production-strength Azure CosmosDB Adapter with integrated 'unfolds' feature, facilitating optimal read performance in terms of latency and RU costs, instrumented to the degree necessitated by Jet's production monitoring requirements. ([depends](https://www.fuget.org/packages/Equinox.CosmosStore) on `Equinox.Core`, `Microsoft.Azure.Cosmos >= 3.9`, `FsCodec.NewtonsoftJson`, `FSharp.Control.AsyncSeq >= 2.0.23`)

- `Equinox.SqlStreamStore` [](https://www.nuget.org/packages/Equinox.SqlStreamStore/): Production-strength [SqlStreamStore](https://github.com/SQLStreamStore/SQLStreamStore) Adapter derived from `Equinox.EventStore` - provides core facilities (but does not connect to a specific database; see sibling `SqlStreamStore`.* packages). ([depends](https://www.fuget.org/packages/Equinox.SqlStreamStore) on `Equinox.Core`, `FsCodec`, `SqlStreamStore >= 1.2.0-beta.8`, `FSharp.Control.AsyncSeq`)

- `Equinox.SqlStreamStore.MsSql` [](https://www.nuget.org/packages/Equinox.SqlStreamStore.MsSql/): [SqlStreamStore.MsSql](https://sqlstreamstore.readthedocs.io/en/latest/sqlserver) Sql Server `Connector` implementation for `Equinox.SqlStreamStore` package). ([depends](https://www.fuget.org/packages/Equinox.SqlStreamStore.MsSql) on `Equinox.SqlStreamStore`, `SqlStreamStore.MsSql >= 1.2.0-beta.8`)

- `Equinox.SqlStreamStore.MySql` [](https://www.nuget.org/packages/Equinox.SqlStreamStore.MySql/): `SqlStreamStore.MySql` MySQL Í`Connector` implementation for `Equinox.SqlStreamStore` package). ([depends](https://www.fuget.org/packages/Equinox.SqlStreamStore.MySql) on `Equinox.SqlStreamStore`, `SqlStreamStore.MySql >= 1.2.0-beta.8`)

@@ -89,7 +89,7 @@ Equinox does not focus on projection logic or wrapping thereof - each store brin

- `FsKafka` [](https://www.nuget.org/packages/FsKafka/): Wraps `Confluent.Kafka` to provide efficient batched Kafka Producer and Consumer configurations, with basic logging instrumentation. Used in the [`propulsion project kafka`](https://github.com/jet/propulsion#dotnet-tool-provisioning--projections-test-tool) tool command; see [`dotnet new proProjector -k; dotnet new proConsumer` to generate a sample app](https://github.com/jet/dotnet-templates#propulsion-related) using it (see the `BatchedAsync` and `BatchedSync` modules in `Examples.fs`).

- `Propulsion` [](https://www.nuget.org/packages/Propulsion/): defines a canonical `Propulsion.Streams.StreamEvent` used to interop with `Propulsion.*` in processing pipelines for the `proProjector` and `proSync` templates in the [templates repo](https://github.com/jet/dotnet-templates), together with the `Ingestion`, `Streams`, `Progress` and `Parallel` modules that get composed into those processing pipelines. ([depends](https://www.fuget.org/packages/Propulsion) on `Serilog`)

-- `Propulsion.Cosmos` [](https://www.nuget.org/packages/Propulsion.Cosmos/): Wraps the [Microsoft .NET `ChangeFeedProcessor` library](https://github.com/Azure/azure-documentdb-changefeedprocessor-dotnet) providing a [processor loop](DOCUMENTATION.md#change-feed-processors) that maintains a continuous query loop per CosmosDb Physical Partition (Range) yielding new or updated documents (optionally unrolling events written by `Equinox.Cosmos` for processing or forwarding). Used in the [`propulsion project stats cosmos`](dotnet-tool-provisioning--benchmarking-tool) tool command; see [`dotnet new proProjector` to generate a sample app](#quickstart) using it. ([depends](https://www.fuget.org/packages/Propulsion.Cosmos) on `Equinox.Cosmos`, `Microsoft.Azure.DocumentDb.ChangeFeedProcessor >= 2.2.5`)

+- `Propulsion.Cosmos` [](https://www.nuget.org/packages/Propulsion.Cosmos/): Wraps the [Microsoft .NET `ChangeFeedProcessor` library](https://github.com/Azure/azure-documentdb-changefeedprocessor-dotnet) providing a [processor loop](DOCUMENTATION.md#change-feed-processors) that maintains a continuous query loop per CosmosDb Physical Partition (Range) yielding new or updated documents (optionally unrolling events written by `Equinox.CosmosStore` for processing or forwarding). Used in the [`propulsion project stats cosmos`](dotnet-tool-provisioning--benchmarking-tool) tool command; see [`dotnet new proProjector` to generate a sample app](#quickstart) using it. ([depends](https://www.fuget.org/packages/Propulsion.Cosmos) on `Equinox.CosmosStore`, `Microsoft.Azure.DocumentDb.ChangeFeedProcessor >= 2.2.5`)

- `Propulsion.EventStore` [](https://www.nuget.org/packages/Propulsion.EventStore/) Used in the [`propulsion project es`](dotnet-tool-provisioning--benchmarking-tool) tool command; see [`dotnet new proSync` to generate a sample app](#quickstart) using it. ([depends](https://www.fuget.org/packages/Propulsion.EventStore) on `Equinox.EventStore`)

- `Propulsion.Kafka` [](https://www.nuget.org/packages/Propulsion.Kafka/): Provides a canonical `RenderedSpan` that can be used as a default format when projecting events via e.g. the Producer/Consumer pair in `dotnet new proProjector -k; dotnet new proConsumer`. ([depends](https://www.fuget.org/packages/Propulsion.Kafka) on `Newtonsoft.Json >= 11.0.2`, `Propulsion`, `FsKafka`)

@@ -586,16 +586,16 @@ The secondary benefit is of course that you have an absolute guarantee there wil

`Equinox.SqlStreamStore` implements this scheme too - it's easier to do things like e.g. replace the bodies of snapshot events with `nulls` as a maintenance task in that instance

-Initially, `Equinox.Cosmos` implemented the same strategy as the `Equinox.EventStore` (it started as a cut and paste of the it). However the present implementation takes advantage of the fact that in a Document Store, you can ... update documents - thus, snapshots (termed unfolds) are saved in a custom field (it's an array) in the Tip document - every update includes an updated snapshot (which is zipped to save read and write costs) which overwrites the preceding unfolds. You're currently always guaranteed that the snapshots are in sync with the latest event by virtue of how the stored proc writes. A DynamoDb impl would likely follow the same strategy

+Initially, `Equinox.CosmosStore` implemented the same strategy as the `Equinox.EventStore` (it started as a cut and paste of the it). However the present implementation takes advantage of the fact that in a Document Store, you can ... update documents - thus, snapshots (termed unfolds) are saved in a custom field (it's an array) in the Tip document - every update includes an updated snapshot (which is zipped to save read and write costs) which overwrites the preceding unfolds. You're currently always guaranteed that the snapshots are in sync with the latest event by virtue of how the stored proc writes. A DynamoDb impl would likely follow the same strategy

I expand (too much!) on some more of the considerations in https://github.com/jet/equinox/blob/master/DOCUMENTATION.md

The other thing that should be pointed out is the caching can typically cover a lot of perf stuff as long as stream lengths stay sane - Snapshotting (esp polluting the stream with snapshot events should definitely be toward the bottom of your list of tactics for managing a stream efficiently given long streams are typically a design smell)

-### Changing Access / Representation strategies in `Equinox.Cosmos` - what happens?

+### Changing Access / Representation strategies in `Equinox.CosmosStore` - what happens?

-> Does Equinox adapt the stream if we start writing with `Equinox.Cosmos.AccessStrategy.RollingState` and change to `Snapshotted` for instance? It could take the last RollingState writing and make the first snapshot ?

+> Does Equinox adapt the stream if we start writing with `Equinox.CosmosStore.AccessStrategy.RollingState` and change to `Snapshotted` for instance? It could take the last RollingState writing and make the first snapshot ?

> what about the opposite? It deletes all events and start writing `RollingState` ?

@@ -617,7 +617,7 @@ General rules:

a) load and decode unfolds from tip (followed by events, if and only if necessary)

b) offer the events to an `isOrigin` function to allow us to stop when we've got a start point (a Reset Event, a relevant snapshot, or, failing that, the start of the stream)

-It may be helpful to look at [how an `AccessStrategy` is mapped to `isOrigin`, `toSnapshot` and `transmute` lambdas internally](https://github.com/jet/equinox/blob/74129903e85e01ce584b4449f629bf3e525515ea/src/Equinox.Cosmos/Cosmos.fs#L1029)

+It may be helpful to look at [how an `AccessStrategy` is mapped to `isOrigin`, `toSnapshot` and `transmute` lambdas internally](https://github.com/jet/equinox/blob/master/src/Equinox.CosmosStore/CosmosStore.fs#L1016)

#### Aaand answering the question

@@ -636,7 +636,7 @@ Then, whenever you emit events from a `decide` or `interpret`, the `AccessStrate

- write updated unfolds/snapshots

- remove or adjust events before they get passed down to the `sync` stored procedure (`Custom`, `RollingState`, `LatestKnownEvent` modes)

-Ouch, not looking forward to reading all that logic :frown: ? [Have a read, it's really not that :scream:](https://github.com/jet/equinox/blob/74129903e85e01ce584b4449f629bf3e525515ea/src/Equinox.Cosmos/Cosmos.fs#L870).

+Ouch, not looking forward to reading all that logic :frown: ? [Have a read, it's really not that :scream:](https://github.com/jet/equinox/blob/master/src/Equinox.CosmosStore/CosmosStore.fs#1011).

### OK, but you didn't answer my question, you just talked about stuff you wanted to talk about!

diff --git a/build.proj b/build.proj

index 99309aa4b..0d5e2e31a 100644

--- a/build.proj

+++ b/build.proj

@@ -16,7 +16,7 @@

-

+

diff --git a/diagrams/CosmosCode.puml b/diagrams/CosmosCode.puml

index 3f79f20f5..5a93d14f6 100644

--- a/diagrams/CosmosCode.puml

+++ b/diagrams/CosmosCode.puml

@@ -1,5 +1,5 @@

@startuml

-title Code diagram for Equinox.Cosmos Query operation, with empty cache and nothing written to the stream yet

+title Code diagram for Equinox.CosmosStore Query operation, with empty cache and nothing written to the stream yet

actor Caller order 20

box "Equinox.Stream"

@@ -7,7 +7,7 @@ box "Equinox.Stream"

end box

participant Aggregate order 50

participant Service order 60

-box "Equinox.Cosmos / CosmosDB"

+box "Equinox.CosmosStore / CosmosDB"

participant IStream order 80

collections Cache order 90

database CosmosDB order 100

@@ -30,7 +30,7 @@ Stream -> Caller: {result = list }

@enduml

@startuml

-title Code diagram for Equinox.Cosmos Transact operation, with cache up to date using Snapshotting Access Strategy

+title Code diagram for Equinox.CosmosStore Transact operation, with cache up to date using Snapshotting Access Strategy

actor Caller order 20

box "Equinox.Stream"

@@ -38,7 +38,7 @@ box "Equinox.Stream"

end box

participant Aggregate order 50

participant Service order 60

-box "Equinox.Cosmos / CosmosDB"

+box "Equinox.CosmosStore / CosmosDB"

participant IStream order 80

collections Cache order 90

database CosmosDB order 100

@@ -115,7 +115,7 @@ Stream -> Caller: proposedResult

@enduml

@startuml

-title Code diagram for Equinox.Cosmos Query operation immediately following a Query/Transact on the same node, i.e. cached

+title Code diagram for Equinox.CosmosStore Query operation immediately following a Query/Transact on the same node, i.e. cached

actor Caller order 20

box "Equinox.Stream"

@@ -123,7 +123,7 @@ box "Equinox.Stream"

end box

participant Aggregate order 50

participant Service order 60

-box "Equinox.Cosmos / CosmosDB"

+box "Equinox.CosmosStore / CosmosDB"

participant IStream order 80

collections Cache order 90

database CosmosDB order 100

@@ -144,7 +144,7 @@ Aggregate -> Caller: result

@enduml

@startuml

-title Code diagram for Equinox.Cosmos Query operation on a node without an in-sync cached value (with snapshotting Access Strategy)

+title Code diagram for Equinox.CosmosStore Query operation on a node without an in-sync cached value (with snapshotting Access Strategy)

actor Caller order 20

box "Equinox.Stream"

@@ -152,7 +152,7 @@ box "Equinox.Stream"

end box

participant Aggregate order 50

participant Service order 60

-box "Equinox.Cosmos / CosmosDB"

+box "Equinox.CosmosStore / CosmosDB"

participant IStream order 80

collections Cache order 90

database CosmosDB order 100

diff --git a/diagrams/CosmosComponent.puml b/diagrams/CosmosComponent.puml

index 5bed95a2f..9a996e845 100644

--- a/diagrams/CosmosComponent.puml

+++ b/diagrams/CosmosComponent.puml

@@ -1,7 +1,7 @@

@startuml

!includeurl https://raw.githubusercontent.com/skleanthous/C4-PlantumlSkin/master/build/output/c4.puml

-title Component diagram for Equinox.Cosmos (+ Propulsion.Cosmos)

+title Component diagram for Equinox.CosmosStore (+ Propulsion.Cosmos)

caption Moving parts breakdown for an Equinox app using a CosmosDB container

actor "Applications" <> as apps

@@ -23,9 +23,9 @@ rectangle "Consistent Processing" <> {

]

}

frame Store {

- rectangle "Equinox.Cosmos" <> {

+ rectangle "Equinox.CosmosStore" <> {

rectangle eqxcosmos <> [

- Equinox.Cosmos

+ Equinox.CosmosStore

]

database memorycache <> [

**System.MemoryCache**

diff --git a/diagrams/CosmosContainer.puml b/diagrams/CosmosContainer.puml

index b3087a6ac..2ed352148 100644

--- a/diagrams/CosmosContainer.puml

+++ b/diagrams/CosmosContainer.puml

@@ -1,7 +1,7 @@

@startuml

!includeurl https://raw.githubusercontent.com/skleanthous/C4-PlantumlSkin/master/build/output/c4.puml

-title Container diagram for Equinox.Cosmos (+ Propulsion.Cosmos)

+title Container diagram for Equinox.CosmosStore (+ Propulsion.Cosmos)

caption Moving parts for an Equinox app using a CosmosDB container that presently has 2 physical partitions

actor "Applications" <> as apps

@@ -29,7 +29,7 @@ rectangle "Consistent Processing" <> {

]

rectangle eqxcosmos <> [

- Equinox.Cosmos

+ Equinox.CosmosStore

]

cloud "CosmosDB Database D" as db {

diff --git a/diagrams/container.puml b/diagrams/container.puml

index f208b861c..2822de086 100644

--- a/diagrams/container.puml

+++ b/diagrams/container.puml

@@ -23,7 +23,7 @@ together {

frame "Consistent Event Stores" as stores <> {

frame "Cosmos" as cosmos <> {

- rectangle "Equinox.Cosmos" <> as cs

+ rectangle "Equinox.CosmosStore" <> as cs

rectangle "Propulsion.Cosmos" <> as cr

rectangle "Azure.Cosmos" <> as cc

}

diff --git a/samples/Infrastructure/Infrastructure.fsproj b/samples/Infrastructure/Infrastructure.fsproj

index d073373c8..b79e4f928 100644

--- a/samples/Infrastructure/Infrastructure.fsproj

+++ b/samples/Infrastructure/Infrastructure.fsproj

@@ -19,7 +19,7 @@

-

+

diff --git a/samples/Infrastructure/Services.fs b/samples/Infrastructure/Services.fs

index 2ba19ba9b..6ff9b8ded 100644

--- a/samples/Infrastructure/Services.fs

+++ b/samples/Infrastructure/Services.fs

@@ -11,10 +11,9 @@ type StreamResolver(storage) =

initial: 'state,

snapshot: (('event -> bool) * ('state -> 'event))) =

match storage with

- | Storage.StorageConfig.Cosmos (gateway, caching, unfolds, databaseId, containerId) ->

- let store = Equinox.Cosmos.Context(gateway, databaseId, containerId)

- let accessStrategy = if unfolds then Equinox.Cosmos.AccessStrategy.Snapshot snapshot else Equinox.Cosmos.AccessStrategy.Unoptimized

- Equinox.Cosmos.Resolver<'event,'state,_>(store, codec, fold, initial, caching, accessStrategy).Resolve

+ | Storage.StorageConfig.Cosmos (store, caching, unfolds) ->

+ let accessStrategy = if unfolds then Equinox.CosmosStore.AccessStrategy.Snapshot snapshot else Equinox.CosmosStore.AccessStrategy.Unoptimized

+ Equinox.CosmosStore.CosmosStoreCategory<'event,'state,_>(store, codec, fold, initial, caching, accessStrategy).Resolve

| Storage.StorageConfig.Es (context, caching, unfolds) ->

let accessStrategy = if unfolds then Equinox.EventStore.AccessStrategy.RollingSnapshots snapshot |> Some else None

Equinox.EventStore.Resolver<'event,'state,_>(context, codec, fold, initial, ?caching = caching, ?access = accessStrategy).Resolve

diff --git a/samples/Infrastructure/Storage.fs b/samples/Infrastructure/Storage.fs

index 35c7d21e1..32ae868a7 100644

--- a/samples/Infrastructure/Storage.fs

+++ b/samples/Infrastructure/Storage.fs

@@ -10,7 +10,7 @@ type StorageConfig =

// For MemoryStore, we keep the events as UTF8 arrays - we could use FsCodec.Codec.Box to remove the JSON encoding, which would improve perf but can conceal problems

| Memory of Equinox.MemoryStore.VolatileStore

| Es of Equinox.EventStore.Context * Equinox.EventStore.CachingStrategy option * unfolds: bool

- | Cosmos of Equinox.Cosmos.Gateway * Equinox.Cosmos.CachingStrategy * unfolds: bool * databaseId: string * containerId: string

+ | Cosmos of Equinox.CosmosStore.CosmosStoreContext * Equinox.CosmosStore.CachingStrategy * unfolds: bool

| Sql of Equinox.SqlStreamStore.Context * Equinox.SqlStreamStore.CachingStrategy option * unfolds: bool

module MemoryStore =

@@ -67,22 +67,23 @@ module Cosmos =

/// 1) replace connection below with a connection string or Uri+Key for an initialized Equinox instance with a database and collection named "equinox-test"

/// 2) Set the 3x environment variables and create a local Equinox using tools/Equinox.Tool/bin/Release/net461/eqx.exe `

/// init -ru 1000 cosmos -s $env:EQUINOX_COSMOS_CONNECTION -d $env:EQUINOX_COSMOS_DATABASE -c $env:EQUINOX_COSMOS_CONTAINER

- open Equinox.Cosmos

+ open Equinox.CosmosStore

open Serilog

- let private createGateway connection maxItems = Gateway(connection, BatchingPolicy(defaultMaxItems=maxItems))

- let connection (log: ILogger, storeLog: ILogger) (a : Info) =

- let (Discovery.UriAndKey (endpointUri,_)) as discovery = a.Connection |> Discovery.FromConnectionString

+ let conn (log: ILogger) (a : Info) =

+ let discovery = Discovery.ConnectionString a.Connection

+ let client = CosmosStoreClientFactory(a.Timeout, a.Retries, a.MaxRetryWaitTime, mode=a.Mode).Create(discovery)

log.Information("CosmosDb {mode} {connection} Database {database} Container {container}",

- a.Mode, endpointUri, a.Database, a.Container)

+ a.Mode, client.Endpoint, a.Database, a.Container)

log.Information("CosmosDb timeout {timeout}s; Throttling retries {retries}, max wait {maxRetryWaitTime}s",

(let t = a.Timeout in t.TotalSeconds), a.Retries, let x = a.MaxRetryWaitTime in x.TotalSeconds)

- discovery, a.Database, a.Container, Connector(a.Timeout, a.Retries, a.MaxRetryWaitTime, log=storeLog, mode=a.Mode)

- let config (log: ILogger, storeLog) (cache, unfolds, batchSize) info =

- let discovery, dName, cName, connector = connection (log, storeLog) info

- let conn = connector.Connect(appName, discovery) |> Async.RunSynchronously

+ client, a.Database, a.Container

+ let config (log: ILogger) (cache, unfolds, batchSize) info =

+ let client, databaseId, containerId = conn log info

+ let conn = CosmosStoreConnection(client, databaseId, containerId)

+ let ctx = CosmosStoreContext(conn, defaultMaxItems = batchSize)

let cacheStrategy = match cache with Some c -> CachingStrategy.SlidingWindow (c, TimeSpan.FromMinutes 20.) | None -> CachingStrategy.NoCaching

- StorageConfig.Cosmos (createGateway conn batchSize, cacheStrategy, unfolds, dName, cName)

+ StorageConfig.Cosmos (ctx, cacheStrategy, unfolds)

/// To establish a local node to run the tests against:

/// 1. cinst eventstore-oss -y # where cinst is an invocation of the Chocolatey Package Installer on Windows

diff --git a/samples/Store/Integration/CartIntegration.fs b/samples/Store/Integration/CartIntegration.fs

index 462d45300..acbcaabfd 100644

--- a/samples/Store/Integration/CartIntegration.fs

+++ b/samples/Store/Integration/CartIntegration.fs

@@ -1,7 +1,7 @@

module Samples.Store.Integration.CartIntegration

open Equinox

-open Equinox.Cosmos.Integration

+open Equinox.CosmosStore.Integration

open Swensen.Unquote

#nowarn "1182" // From hereon in, we may have some 'unused' privates (the tests)

@@ -21,9 +21,9 @@ let resolveGesStreamWithoutCustomAccessStrategy gateway =

fun (id,opt) -> EventStore.Resolver(gateway, codec, fold, initial).Resolve(id,?option=opt)

let resolveCosmosStreamWithSnapshotStrategy gateway =

- fun (id,opt) -> Cosmos.Resolver(gateway, codec, fold, initial, Cosmos.CachingStrategy.NoCaching, Cosmos.AccessStrategy.Snapshot snapshot).Resolve(id,?option=opt)

+ fun (id,opt) -> CosmosStore.CosmosStoreCategory(gateway, codec, fold, initial, CosmosStore.CachingStrategy.NoCaching, CosmosStore.AccessStrategy.Snapshot snapshot).Resolve(id,?option=opt)

let resolveCosmosStreamWithoutCustomAccessStrategy gateway =

- fun (id,opt) -> Cosmos.Resolver(gateway, codec, fold, initial, Cosmos.CachingStrategy.NoCaching, Cosmos.AccessStrategy.Unoptimized).Resolve(id,?option=opt)

+ fun (id,opt) -> CosmosStore.CosmosStoreCategory(gateway, codec, fold, initial, CosmosStore.CachingStrategy.NoCaching, CosmosStore.AccessStrategy.Unoptimized).Resolve(id,?option=opt)

let addAndThenRemoveItemsManyTimesExceptTheLastOne context cartId skuId (service: Backend.Cart.Service) count =

service.ExecuteManyAsync(cartId, false, seq {

@@ -50,7 +50,7 @@ type Tests(testOutputHelper) =

do! act service args

}

- let arrange connect choose resolve = async {

+ let arrangeEs connect choose resolve = async {

let log = createLog ()

let! conn = connect log

let gateway = choose conn defaultBatchSize

@@ -58,24 +58,29 @@ type Tests(testOutputHelper) =

[]

let ``Can roundtrip against EventStore, correctly folding the events without compaction semantics`` args = Async.RunSynchronously <| async {

- let! service = arrange connectToLocalEventStoreNode createGesGateway resolveGesStreamWithoutCustomAccessStrategy

+ let! service = arrangeEs connectToLocalEventStoreNode createGesGateway resolveGesStreamWithoutCustomAccessStrategy

do! act service args

}

[]

let ``Can roundtrip against EventStore, correctly folding the events with RollingSnapshots`` args = Async.RunSynchronously <| async {

- let! service = arrange connectToLocalEventStoreNode createGesGateway resolveGesStreamWithRollingSnapshots

+ let! service = arrangeEs connectToLocalEventStoreNode createGesGateway resolveGesStreamWithRollingSnapshots

do! act service args

}

+ let arrangeCosmos connect resolve =

+ let log = createLog ()

+ let ctx : CosmosStore.CosmosStoreContext = connect log defaultBatchSize

+ Backend.Cart.create log (resolve ctx)

+

[]

let ``Can roundtrip against Cosmos, correctly folding the events without custom access strategy`` args = Async.RunSynchronously <| async {

- let! service = arrange connectToSpecifiedCosmosOrSimulator createCosmosContext resolveCosmosStreamWithoutCustomAccessStrategy

+ let service = arrangeCosmos createPrimaryContext resolveCosmosStreamWithoutCustomAccessStrategy

do! act service args

}

[]

let ``Can roundtrip against Cosmos, correctly folding the events with With Snapshotting`` args = Async.RunSynchronously <| async {

- let! service = arrange connectToSpecifiedCosmosOrSimulator createCosmosContext resolveCosmosStreamWithSnapshotStrategy

+ let service = arrangeCosmos createPrimaryContext resolveCosmosStreamWithSnapshotStrategy

do! act service args

}

diff --git a/samples/Store/Integration/CodecIntegration.fs b/samples/Store/Integration/CodecIntegration.fs

index 057ae35ce..f5713439a 100644

--- a/samples/Store/Integration/CodecIntegration.fs

+++ b/samples/Store/Integration/CodecIntegration.fs

@@ -24,7 +24,7 @@ type SimpleDu =

| EventD

// See JsonConverterTests for why these are ruled out atm

//| EventE of int // works but disabled due to Strings and DateTimes not working

- //| EventF of string // has wierd semantics, particularly when used with a VerbatimJsonConverter in Equinox.Cosmos

+ //| EventF of string // has wierd semantics, particularly when used with a VerbatimJsonConverter in Equinox.CosmosStore

interface IUnionContract

let render = function

@@ -46,4 +46,4 @@ let ``Can roundtrip, rendering correctly`` (x: SimpleDu) =

render x =! if serialized.Data = null then null else System.Text.Encoding.UTF8.GetString(serialized.Data)

let adapted = FsCodec.Core.TimelineEvent.Create(-1L, serialized.EventType, serialized.Data)

let deserialized = codec.TryDecode adapted |> Option.get

- deserialized =! x

\ No newline at end of file

+ deserialized =! x

diff --git a/samples/Store/Integration/ContactPreferencesIntegration.fs b/samples/Store/Integration/ContactPreferencesIntegration.fs

index 178a6158e..b3954a632 100644

--- a/samples/Store/Integration/ContactPreferencesIntegration.fs

+++ b/samples/Store/Integration/ContactPreferencesIntegration.fs

@@ -1,7 +1,7 @@

module Samples.Store.Integration.ContactPreferencesIntegration

open Equinox

-open Equinox.Cosmos.Integration

+open Equinox.CosmosStore.Integration

open Swensen.Unquote

#nowarn "1182" // From hereon in, we may have some 'unused' privates (the tests)

@@ -18,13 +18,13 @@ let resolveStreamGesWithOptimizedStorageSemantics gateway =

let resolveStreamGesWithoutAccessStrategy gateway =

EventStore.Resolver(gateway defaultBatchSize, codec, fold, initial).Resolve

-let resolveStreamCosmosWithLatestKnownEventSemantics gateway =

- Cosmos.Resolver(gateway 1, codec, fold, initial, Cosmos.CachingStrategy.NoCaching, Cosmos.AccessStrategy.LatestKnownEvent).Resolve

-let resolveStreamCosmosUnoptimized gateway =

- Cosmos.Resolver(gateway defaultBatchSize, codec, fold, initial, Cosmos.CachingStrategy.NoCaching, Cosmos.AccessStrategy.Unoptimized).Resolve

-let resolveStreamCosmosRollingUnfolds gateway =

- let access = Cosmos.AccessStrategy.Custom(Domain.ContactPreferences.Fold.isOrigin, Domain.ContactPreferences.Fold.transmute)

- Cosmos.Resolver(gateway defaultBatchSize, codec, fold, initial, Cosmos.CachingStrategy.NoCaching, access).Resolve

+let resolveStreamCosmosWithLatestKnownEventSemantics context =

+ CosmosStore.CosmosStoreCategory(context, codec, fold, initial, CosmosStore.CachingStrategy.NoCaching, CosmosStore.AccessStrategy.LatestKnownEvent).Resolve

+let resolveStreamCosmosUnoptimized context =

+ CosmosStore.CosmosStoreCategory(context, codec, fold, initial, CosmosStore.CachingStrategy.NoCaching, CosmosStore.AccessStrategy.Unoptimized).Resolve

+let resolveStreamCosmosRollingUnfolds context =

+ let access = CosmosStore.AccessStrategy.Custom(Domain.ContactPreferences.Fold.isOrigin, Domain.ContactPreferences.Fold.transmute)

+ CosmosStore.CosmosStoreCategory(context, codec, fold, initial, CosmosStore.CachingStrategy.NoCaching, access).Resolve

type Tests(testOutputHelper) =

let testOutput = TestOutputAdapter testOutputHelper

@@ -61,20 +61,25 @@ type Tests(testOutputHelper) =

do! act service args

}

+ let arrangeCosmos connect resolve batchSize =

+ let log = createLog ()

+ let ctx: CosmosStore.CosmosStoreContext = connect log batchSize

+ Backend.ContactPreferences.create log (resolve ctx)

+

[]

let ``Can roundtrip against Cosmos, correctly folding the events with Unoptimized semantics`` args = Async.RunSynchronously <| async {

- let! service = arrange connectToSpecifiedCosmosOrSimulator createCosmosContext resolveStreamCosmosUnoptimized

+ let service = arrangeCosmos createPrimaryContext resolveStreamCosmosUnoptimized defaultBatchSize

do! act service args

}

[]

let ``Can roundtrip against Cosmos, correctly folding the events with LatestKnownEvent semantics`` args = Async.RunSynchronously <| async {

- let! service = arrange connectToSpecifiedCosmosOrSimulator createCosmosContext resolveStreamCosmosWithLatestKnownEventSemantics

+ let service = arrangeCosmos createPrimaryContext resolveStreamCosmosWithLatestKnownEventSemantics 1

do! act service args

}

[]

let ``Can roundtrip against Cosmos, correctly folding the events with RollingUnfold semantics`` args = Async.RunSynchronously <| async {

- let! service = arrange connectToSpecifiedCosmosOrSimulator createCosmosContext resolveStreamCosmosRollingUnfolds

+ let service = arrangeCosmos createPrimaryContext resolveStreamCosmosRollingUnfolds defaultBatchSize

do! act service args

}

diff --git a/samples/Store/Integration/FavoritesIntegration.fs b/samples/Store/Integration/FavoritesIntegration.fs

index da93ce82f..76a06a733 100644

--- a/samples/Store/Integration/FavoritesIntegration.fs

+++ b/samples/Store/Integration/FavoritesIntegration.fs

@@ -1,7 +1,7 @@

module Samples.Store.Integration.FavoritesIntegration

open Equinox

-open Equinox.Cosmos.Integration

+open Equinox.CosmosStore.Integration

open Swensen.Unquote

#nowarn "1182" // From hereon in, we may have some 'unused' privates (the tests)

@@ -19,12 +19,12 @@ let createServiceGes gateway log =

Backend.Favorites.create log resolver.Resolve

let createServiceCosmos gateway log =

- let resolver = Cosmos.Resolver(gateway, codec, fold, initial, Cosmos.CachingStrategy.NoCaching, Cosmos.AccessStrategy.Snapshot snapshot)

+ let resolver = CosmosStore.CosmosStoreCategory(gateway, codec, fold, initial, CosmosStore.CachingStrategy.NoCaching, CosmosStore.AccessStrategy.Snapshot snapshot)

Backend.Favorites.create log resolver.Resolve

let createServiceCosmosRollingState gateway log =

- let access = Cosmos.AccessStrategy.RollingState Domain.Favorites.Fold.snapshot

- let resolver = Cosmos.Resolver(gateway, codec, fold, initial, Cosmos.CachingStrategy.NoCaching, access)

+ let access = CosmosStore.AccessStrategy.RollingState Domain.Favorites.Fold.snapshot

+ let resolver = CosmosStore.CosmosStoreCategory(gateway, codec, fold, initial, CosmosStore.CachingStrategy.NoCaching, access)

Backend.Favorites.create log resolver.Resolve

type Tests(testOutputHelper) =

@@ -60,17 +60,16 @@ type Tests(testOutputHelper) =

[]

let ``Can roundtrip against Cosmos, correctly folding the events`` args = Async.RunSynchronously <| async {

let log = createLog ()

- let! conn = connectToSpecifiedCosmosOrSimulator log

- let gateway = createCosmosContext conn defaultBatchSize

- let service = createServiceCosmos gateway log

+ let store = createPrimaryContext log defaultBatchSize

+ let service = createServiceCosmos store log

do! act service args

}

[]

let ``Can roundtrip against Cosmos, correctly folding the events with rolling unfolds`` args = Async.RunSynchronously <| async {

let log = createLog ()

- let! conn = connectToSpecifiedCosmosOrSimulator log

- let gateway = createCosmosContext conn defaultBatchSize

- let service = createServiceCosmosRollingState gateway log

+ let log = createLog ()

+ let store = createPrimaryContext log defaultBatchSize

+ let service = createServiceCosmosRollingState store log

do! act service args

}

diff --git a/samples/Store/Integration/Integration.fsproj b/samples/Store/Integration/Integration.fsproj

index 6c0d14cfe..d29db5e2b 100644

--- a/samples/Store/Integration/Integration.fsproj

+++ b/samples/Store/Integration/Integration.fsproj

@@ -18,11 +18,11 @@

-

+

-

+

diff --git a/samples/Store/Integration/LogIntegration.fs b/samples/Store/Integration/LogIntegration.fs

index c4bf13efb..2dcc1dc25 100644

--- a/samples/Store/Integration/LogIntegration.fs

+++ b/samples/Store/Integration/LogIntegration.fs

@@ -1,7 +1,7 @@

module Samples.Store.Integration.LogIntegration

open Equinox.Core

-open Equinox.Cosmos.Integration

+open Equinox.CosmosStore.Integration

open FSharp.UMX

open Swensen.Unquote

open System

@@ -23,7 +23,7 @@ module EquinoxEsInterop =

| Log.Batch (Direction.Backward,c,m) -> "LoadB", m, Some c

{ action = action; stream = metric.stream; interval = metric.interval; bytes = metric.bytes; count = metric.count; batches = batches }

module EquinoxCosmosInterop =

- open Equinox.Cosmos.Store

+ open Equinox.CosmosStore.Core

[]

type FlatMetric = { action: string; stream : string; interval: StopwatchInterval; bytes: int; count: int; responses: int option; ru: float } with

override __.ToString() = sprintf "%s-Stream=%s %s-Elapsed=%O Ru=%O" __.action __.stream __.action __.interval.Elapsed __.ru

@@ -65,7 +65,7 @@ type SerilogMetricsExtractor(emit : string -> unit) =

logEvent.Properties

|> Seq.tryPick (function

| KeyValue (k, SerilogScalar (:? Equinox.EventStore.Log.Event as m)) -> Some <| Choice1Of3 (k,m)

- | KeyValue (k, SerilogScalar (:? Equinox.Cosmos.Store.Log.Event as m)) -> Some <| Choice2Of3 (k,m)

+ | KeyValue (k, SerilogScalar (:? Equinox.CosmosStore.Core.Log.Event as m)) -> Some <| Choice2Of3 (k,m)

| _ -> None)

|> Option.defaultValue (Choice3Of3 ())

let handleLogEvent logEvent =

@@ -125,9 +125,8 @@ type Tests() =

let batchSize = defaultBatchSize

let buffer = ConcurrentQueue()

let log = createLoggerWithMetricsExtraction buffer.Enqueue

- let! conn = connectToSpecifiedCosmosOrSimulator log

- let gateway = createCosmosContext conn batchSize

- let service = Backend.Cart.create log (CartIntegration.resolveCosmosStreamWithSnapshotStrategy gateway)

+ let store = createPrimaryContext log batchSize

+ let service = Backend.Cart.create log (CartIntegration.resolveCosmosStreamWithSnapshotStrategy store)

let itemCount = batchSize / 2 + 1

let cartId = % Guid.NewGuid()

do! act buffer service itemCount context cartId skuId "EqxCosmos Tip " // one is a 404, one is a 200

diff --git a/samples/Tutorial/AsAt.fsx b/samples/Tutorial/AsAt.fsx

index 691ddeba7..f99209554 100644

--- a/samples/Tutorial/AsAt.fsx

+++ b/samples/Tutorial/AsAt.fsx

@@ -33,7 +33,7 @@

#r "Equinox.EventStore.dll"

#r "Microsoft.Azure.Cosmos.Direct.dll"

#r "Microsoft.Azure.Cosmos.Client.dll"

-#r "Equinox.Cosmos.dll"

+#r "Equinox.CosmosStore.dll"

open System

@@ -124,12 +124,12 @@ module Log =

let c = LoggerConfiguration()

let c = if verbose then c.MinimumLevel.Debug() else c

let c = c.WriteTo.Sink(Equinox.EventStore.Log.InternalMetrics.Stats.LogSink()) // to power Log.InternalMetrics.dump

- let c = c.WriteTo.Sink(Equinox.Cosmos.Store.Log.InternalMetrics.Stats.LogSink()) // to power Log.InternalMetrics.dump

+ let c = c.WriteTo.Sink(Equinox.CosmosStore.Core.Log.InternalMetrics.Stats.LogSink()) // to power Log.InternalMetrics.dump

let c = c.WriteTo.Seq("http://localhost:5341") // https://getseq.net

let c = c.WriteTo.Console(if verbose then LogEventLevel.Debug else LogEventLevel.Information)

c.CreateLogger()

let dumpMetrics () =

- Equinox.Cosmos.Store.Log.InternalMetrics.dump log

+ Equinox.CosmosStore.Core.Log.InternalMetrics.dump log

Equinox.EventStore.Log.InternalMetrics.dump log

let [] appName = "equinox-tutorial"

@@ -153,7 +153,7 @@ module EventStore =

let resolve id = Equinox.Stream(Log.log, resolver.Resolve(streamName id), maxAttempts = 3)

module Cosmos =

- open Equinox.Cosmos

+ open Equinox.CosmosStore

let read key = System.Environment.GetEnvironmentVariable key |> Option.ofObj |> Option.get

let connector = Connector(TimeSpan.FromSeconds 5., 2, TimeSpan.FromSeconds 5., log=Log.log, mode=Microsoft.Azure.Cosmos.ConnectionMode.Gateway)

@@ -161,7 +161,7 @@ module Cosmos =

let context = Context(conn, read "EQUINOX_COSMOS_DATABASE", read "EQUINOX_COSMOS_CONTAINER")

let cacheStrategy = CachingStrategy.SlidingWindow (cache, TimeSpan.FromMinutes 20.) // OR CachingStrategy.NoCaching

let accessStrategy = AccessStrategy.Snapshot (Fold.isValid,Fold.snapshot)

- let resolver = Resolver(context, Events.codec, Fold.fold, Fold.initial, cacheStrategy, accessStrategy)

+ let resolver = CosmosStoreCategory(context, Events.codec, Fold.fold, Fold.initial, cacheStrategy, accessStrategy)

let resolve id = Equinox.Stream(Log.log, resolver.Resolve(streamName id), maxAttempts = 3)

let serviceES = Service(EventStore.resolve)

diff --git a/samples/Tutorial/Cosmos.fsx b/samples/Tutorial/Cosmos.fsx

index 8c9b7942f..4a44cd975 100644

--- a/samples/Tutorial/Cosmos.fsx

+++ b/samples/Tutorial/Cosmos.fsx

@@ -17,7 +17,7 @@

#r "Microsoft.Azure.Cosmos.Client.dll"

#r "System.Net.Http"

#r "Serilog.Sinks.Seq.dll"

-#r "Equinox.Cosmos.dll"

+#r "Equinox.CosmosStore.dll"

module Log =

@@ -27,11 +27,11 @@ module Log =

let log =

let c = LoggerConfiguration()

let c = if verbose then c.MinimumLevel.Debug() else c

- let c = c.WriteTo.Sink(Equinox.Cosmos.Store.Log.InternalMetrics.Stats.LogSink()) // to power Log.InternalMetrics.dump

+ let c = c.WriteTo.Sink(Equinox.CosmosStore.Core.Log.InternalMetrics.Stats.LogSink()) // to power Log.InternalMetrics.dump

let c = c.WriteTo.Seq("http://localhost:5341") // https://getseq.net

let c = c.WriteTo.Console(if verbose then LogEventLevel.Debug else LogEventLevel.Information)

c.CreateLogger()

- let dumpMetrics () = Equinox.Cosmos.Store.Log.InternalMetrics.dump log

+ let dumpMetrics () = Equinox.CosmosStore.Core.Log.InternalMetrics.dump log

module Favorites =

@@ -82,11 +82,11 @@ module Favorites =

module Cosmos =

- open Equinox.Cosmos // Everything outside of this module is completely storage agnostic so can be unit tested simply and/or bound to any store

+ open Equinox.CosmosStore // Everything outside of this module is completely storage agnostic so can be unit tested simply and/or bound to any store

let accessStrategy = AccessStrategy.Unoptimized // Or Snapshot etc https://github.com/jet/equinox/blob/master/DOCUMENTATION.md#access-strategies

let create (context, cache) =

let cacheStrategy = CachingStrategy.SlidingWindow (cache, System.TimeSpan.FromMinutes 20.) // OR CachingStrategy.NoCaching

- let resolver = Resolver(context, Events.codec, Fold.fold, Fold.initial, cacheStrategy, accessStrategy)

+ let resolver = CosmosStoreCategory(context, Events.codec, Fold.fold, Fold.initial, cacheStrategy, accessStrategy)

create resolver.Resolve

let [] appName = "equinox-tutorial"

@@ -94,9 +94,9 @@ let [] appName = "equinox-tutorial"

module Store =

let read key = System.Environment.GetEnvironmentVariable key |> Option.ofObj |> Option.get

- let connector = Equinox.Cosmos.Connector(System.TimeSpan.FromSeconds 5., 2, System.TimeSpan.FromSeconds 5., log=Log.log)

- let conn = connector.Connect(appName, Equinox.Cosmos.Discovery.FromConnectionString (read "EQUINOX_COSMOS_CONNECTION")) |> Async.RunSynchronously

- let createContext () = Equinox.Cosmos.Context(conn, read "EQUINOX_COSMOS_DATABASE", read "EQUINOX_COSMOS_CONTAINER")

+ let connector = Equinox.CosmosStore.Connector(System.TimeSpan.FromSeconds 5., 2, System.TimeSpan.FromSeconds 5., log=Log.log)

+ let conn = connector.Connect(appName, Equinox.CosmosStore.Discovery.FromConnectionString (read "EQUINOX_COSMOS_CONNECTION")) |> Async.RunSynchronously

+ let createContext () = Equinox.CosmosStore.Context(conn, read "EQUINOX_COSMOS_DATABASE", read "EQUINOX_COSMOS_CONTAINER")

let context = Store.createContext ()

let cache = Equinox.Cache(appName, 20)

diff --git a/samples/Tutorial/FulfilmentCenter.fsx b/samples/Tutorial/FulfilmentCenter.fsx

index 18972d4f7..022b3be58 100644

--- a/samples/Tutorial/FulfilmentCenter.fsx

+++ b/samples/Tutorial/FulfilmentCenter.fsx

@@ -11,7 +11,7 @@

#r "Microsoft.Azure.Cosmos.Client.dll"

#r "System.Net.Http"

#r "Serilog.Sinks.Seq.dll"

-#r "Equinox.Cosmos.dll"

+#r "Equinox.CosmosStore.dll"

open FSharp.UMX

@@ -103,7 +103,7 @@ module FulfilmentCenter =

member __.Read id : Async = read id

member __.QueryWithVersion(id, render : Fold.State -> 'res) : Async = queryEx id render

-open Equinox.Cosmos

+open Equinox.CosmosStore

open System

module Log =

@@ -114,11 +114,11 @@ module Log =

let log =

let c = LoggerConfiguration()

let c = if verbose then c.MinimumLevel.Debug() else c

- let c = c.WriteTo.Sink(Store.Log.InternalMetrics.Stats.LogSink()) // to power Log.InternalMetrics.dump

+ let c = c.WriteTo.Sink(Core.Log.InternalMetrics.Stats.LogSink()) // to power Log.InternalMetrics.dump

let c = c.WriteTo.Seq("http://localhost:5341") // https://getseq.net

let c = c.WriteTo.Console(if verbose then LogEventLevel.Debug else LogEventLevel.Information)

c.CreateLogger()

- let dumpMetrics () = Store.Log.InternalMetrics.dump log

+ let dumpMetrics () = Core.Log.InternalMetrics.dump log

module Store =

@@ -133,7 +133,7 @@ module Store =

open FulfilmentCenter

-let resolver = Resolver(Store.context, Events.codec, Fold.fold, Fold.initial, Store.cacheStrategy, AccessStrategy.Unoptimized)

+let resolver = CosmosStoreCategory(Store.context, Events.codec, Fold.fold, Fold.initial, Store.cacheStrategy, AccessStrategy.Unoptimized)

let resolve id = Equinox.Stream(Log.log, resolver.Resolve(streamName id), maxAttempts = 3)

let service = Service(resolve)

diff --git a/samples/Tutorial/Gapless.fs b/samples/Tutorial/Gapless.fs

index 7042b4e10..5d84ea7ce 100644

--- a/samples/Tutorial/Gapless.fs

+++ b/samples/Tutorial/Gapless.fs

@@ -76,10 +76,10 @@ let [] appName = "equinox-tutorial-gapless"

module Cosmos =

- open Equinox.Cosmos

+ open Equinox.CosmosStore

let private create (context,cache,accessStrategy) =

let cacheStrategy = CachingStrategy.SlidingWindow (cache, TimeSpan.FromMinutes 20.) // OR CachingStrategy.NoCaching

- let resolver = Resolver(context, Events.codec, Fold.fold, Fold.initial, cacheStrategy, accessStrategy)

+ let resolver = CosmosStoreCategory(context, Events.codec, Fold.fold, Fold.initial, cacheStrategy, accessStrategy)

let resolve sequenceId =

let streamName = streamName sequenceId

Equinox.Stream(Serilog.Log.Logger, resolver.Resolve streamName, maxAttempts = 3)

diff --git a/samples/Tutorial/Index.fs b/samples/Tutorial/Index.fs

index 92bd1c06b..c1a6c9241 100644

--- a/samples/Tutorial/Index.fs

+++ b/samples/Tutorial/Index.fs

@@ -53,11 +53,11 @@ let create<'t> resolve indexId =

module Cosmos =

- open Equinox.Cosmos

+ open Equinox.CosmosStore

let create<'v> (context,cache) =

let cacheStrategy = CachingStrategy.SlidingWindow (cache, System.TimeSpan.FromMinutes 20.)

let accessStrategy = AccessStrategy.RollingState Fold.snapshot

- let resolver = Resolver(context, Events.codec, Fold.fold, Fold.initial, cacheStrategy, accessStrategy)

+ let resolver = CosmosStoreCategory(context, Events.codec, Fold.fold, Fold.initial, cacheStrategy, accessStrategy)

create resolver.Resolve

module MemoryStore =

diff --git a/samples/Tutorial/Sequence.fs b/samples/Tutorial/Sequence.fs

index c69acb510..ebdfb0d34 100644

--- a/samples/Tutorial/Sequence.fs

+++ b/samples/Tutorial/Sequence.fs

@@ -55,10 +55,10 @@ let create resolve =

module Cosmos =

- open Equinox.Cosmos

+ open Equinox.CosmosStore

let private create (context,cache,accessStrategy) =

let cacheStrategy = CachingStrategy.SlidingWindow (cache, TimeSpan.FromMinutes 20.) // OR CachingStrategy.NoCaching

- let resolver = Resolver(context, Events.codec, Fold.fold, Fold.initial, cacheStrategy, accessStrategy)

+ let resolver = CosmosStoreCategory(context, Events.codec, Fold.fold, Fold.initial, cacheStrategy, accessStrategy)

create resolver.Resolve

module LatestKnownEvent =

diff --git a/samples/Tutorial/Set.fs b/samples/Tutorial/Set.fs

index b9b5a3ae7..d7e013e5b 100644

--- a/samples/Tutorial/Set.fs

+++ b/samples/Tutorial/Set.fs

@@ -53,11 +53,11 @@ let create resolve setId =

module Cosmos =

- open Equinox.Cosmos

+ open Equinox.CosmosStore

let create (context,cache) =

let cacheStrategy = CachingStrategy.SlidingWindow (cache, System.TimeSpan.FromMinutes 20.)

let accessStrategy = AccessStrategy.RollingState Fold.snapshot

- let resolver = Resolver(context, Events.codec, Fold.fold, Fold.initial, cacheStrategy, accessStrategy)

+ let resolver = CosmosStoreCategory(context, Events.codec, Fold.fold, Fold.initial, cacheStrategy, accessStrategy)

create resolver.Resolve

module MemoryStore =

diff --git a/samples/Tutorial/Todo.fsx b/samples/Tutorial/Todo.fsx

index cc642230a..aeebae2ac 100644

--- a/samples/Tutorial/Todo.fsx

+++ b/samples/Tutorial/Todo.fsx

@@ -15,7 +15,7 @@

#r "FsCodec.NewtonsoftJson.dll"

#r "FSharp.Control.AsyncSeq.dll"

#r "Microsoft.Azure.Cosmos.Client.dll"

-#r "Equinox.Cosmos.dll"

+#r "Equinox.CosmosStore.dll"

open System

@@ -116,7 +116,7 @@ let log = LoggerConfiguration().WriteTo.Console().CreateLogger()

let [] appName = "equinox-tutorial"

let cache = Equinox.Cache(appName, 20)

-open Equinox.Cosmos

+open Equinox.CosmosStore

module Store =

let read key = Environment.GetEnvironmentVariable key |> Option.ofObj |> Option.get

@@ -129,7 +129,7 @@ module Store =

module TodosCategory =

let access = AccessStrategy.Snapshot (isOrigin,snapshot)

- let resolver = Resolver(Store.store, codec, fold, initial, Store.cacheStrategy, access=access)

+ let resolver = CosmosStoreCategory(Store.store, codec, fold, initial, Store.cacheStrategy, access=access)

let resolve id = Equinox.Stream(log, resolver.Resolve(streamName id), maxAttempts = 3)

let service = Service(TodosCategory.resolve)

diff --git a/samples/Tutorial/Tutorial.fsproj b/samples/Tutorial/Tutorial.fsproj

index 250f0f066..bb4b8c3a8 100644

--- a/samples/Tutorial/Tutorial.fsproj

+++ b/samples/Tutorial/Tutorial.fsproj

@@ -24,7 +24,7 @@

-

+

diff --git a/samples/Tutorial/Upload.fs b/samples/Tutorial/Upload.fs

index f7b5b742c..b11ed8c2f 100644

--- a/samples/Tutorial/Upload.fs

+++ b/samples/Tutorial/Upload.fs

@@ -70,10 +70,10 @@ let create resolve =

module Cosmos =

- open Equinox.Cosmos

+ open Equinox.CosmosStore

let create (context,cache) =

let cacheStrategy = CachingStrategy.SlidingWindow (cache, TimeSpan.FromMinutes 20.) // OR CachingStrategy.NoCaching

- let resolver = Resolver(context, Events.codec, Fold.fold, Fold.initial, cacheStrategy, AccessStrategy.LatestKnownEvent)

+ let resolver = CosmosStoreCategory(context, Events.codec, Fold.fold, Fold.initial, cacheStrategy, AccessStrategy.LatestKnownEvent)

create resolver.Resolve

module EventStore =

diff --git a/samples/Web/Program.fs b/samples/Web/Program.fs

index c49531f82..fab9549b7 100644

--- a/samples/Web/Program.fs

+++ b/samples/Web/Program.fs

@@ -29,7 +29,7 @@ module Program =

.Enrich.FromLogContext()

.WriteTo.Console()

// TOCONSIDER log and reset every minute or something ?

- .WriteTo.Sink(Equinox.Cosmos.Store.Log.InternalMetrics.Stats.LogSink())

+ .WriteTo.Sink(Equinox.CosmosStore.Core.Log.InternalMetrics.Stats.LogSink())

.WriteTo.Sink(Equinox.EventStore.Log.InternalMetrics.Stats.LogSink())

.WriteTo.Sink(Equinox.SqlStreamStore.Log.InternalMetrics.Stats.LogSink())

let c =

@@ -41,4 +41,4 @@ module Program =

0

with e ->

eprintfn "%s" e.Message

- 1

\ No newline at end of file

+ 1

diff --git a/samples/Web/Startup.fs b/samples/Web/Startup.fs

index e896f6f09..51245dd2c 100644

--- a/samples/Web/Startup.fs

+++ b/samples/Web/Startup.fs

@@ -70,7 +70,7 @@ type Startup() =

| Some (Cosmos sargs) ->

let storeLog = createStoreLog <| sargs.Contains Storage.Cosmos.Arguments.VerboseStore

log.Information("CosmosDb Storage options: {options:l}", options)

- Storage.Cosmos.config (log,storeLog) (cache, unfolds, defaultBatchSize) (Storage.Cosmos.Info sargs), storeLog

+ Storage.Cosmos.config log (cache, unfolds, defaultBatchSize) (Storage.Cosmos.Info sargs), storeLog

| Some (Es sargs) ->

let storeLog = createStoreLog <| sargs.Contains Storage.EventStore.Arguments.VerboseStore

log.Information("EventStore Storage options: {options:l}", options)

diff --git a/src/Equinox.Cosmos/Cosmos.fs b/src/Equinox.CosmosStore/CosmosStore.fs

similarity index 73%

rename from src/Equinox.Cosmos/Cosmos.fs

rename to src/Equinox.CosmosStore/CosmosStore.fs

index 8912c923a..15bf418c2 100644

--- a/src/Equinox.Cosmos/Cosmos.fs

+++ b/src/Equinox.CosmosStore/CosmosStore.fs

@@ -1,12 +1,12 @@

-namespace Equinox.Cosmos.Store

+namespace Equinox.CosmosStore.Core

open Equinox.Core

open FsCodec

+open FSharp.Control

open Microsoft.Azure.Cosmos

open Newtonsoft.Json

open Serilog

open System

-open System.IO

/// A single Domain Event from the array held in a Batch

type []

@@ -78,6 +78,7 @@ type []

static member internal IndexedFields = [Batch.PartitionKeyField; "i"; "n"]

/// Compaction/Snapshot/Projection Event based on the state at a given point in time `i`

+[]

type Unfold =

{ /// Base: Stream Position (Version) of State from which this Unfold Event was generated

i: int64

@@ -104,7 +105,7 @@ and Base64DeflateUtf8JsonConverter() =

let pickle (input : byte[]) : string =

if input = null then null else

- use output = new MemoryStream()

+ use output = new System.IO.MemoryStream()

use compressor = new System.IO.Compression.DeflateStream(output, System.IO.Compression.CompressionLevel.Optimal)

compressor.Write(input,0,input.Length)

compressor.Close()

@@ -113,9 +114,9 @@ and Base64DeflateUtf8JsonConverter() =

if str = null then null else

let compressedBytes = System.Convert.FromBase64String str

- use input = new MemoryStream(compressedBytes)

+ use input = new System.IO.MemoryStream(compressedBytes)

use decompressor = new System.IO.Compression.DeflateStream(input, System.IO.Compression.CompressionMode.Decompress)

- use output = new MemoryStream()

+ use output = new System.IO.MemoryStream()

decompressor.CopyTo(output)

output.ToArray()

@@ -159,8 +160,8 @@ type []

static member internal WellKnownDocumentId = "-1"

/// Position and Etag to which an operation is relative

-type []

- Position = { index: int64; etag: string option }

+[]

+type Position = { index: int64; etag: string option }

module internal Position =

/// NB very inefficient compared to FromDocument or using one already returned to you

@@ -392,12 +393,13 @@ module private MicrosoftAzureCosmosWrappers =

// NB while the docs suggest you may see a 412, the NotModified in the body of the try/with is actually what happens

| CosmosException (CosmosStatusCode System.Net.HttpStatusCode.PreconditionFailed as e) -> return e.RequestCharge, NotModified }

-module Sync =

- // NB don't nest in a private module, or serialization will fail miserably ;)

- []

- type SyncResponse = { etag: string; n: int64; conflicts: Unfold[] }

- let [] private sprocName = "EquinoxRollingUnfolds4" // NB need to rename/number for any breaking change

- let [] private sprocBody = """

+// NB don't nest in a private module, or serialization will fail miserably ;)

+[]

+type SyncResponse = { etag: string; n: int64; conflicts: Unfold[] }

+

+module internal SyncStoredProc =

+ let [] name = "EquinoxRollingUnfolds4" // NB need to rename/number for any breaking change

+ let [] body = """

// Manages the merging of the supplied Request Batch into the stream

// 0 perform concurrency check (index=-1 -> always append; index=-2 -> check based on .etag; _ -> check .n=.index)

@@ -481,28 +483,37 @@ function sync(req, expIndex, expEtag) {

}

}"""

+[]

+type internal SyncExp = Version of int64 | Etag of string | Any

+

+module internal Sync =

+

[]

type Result =

| Written of Position

| Conflict of Position * events: ITimelineEvent[]

| ConflictUnknown of Position

- type [] Exp = Version of int64 | Etag of string | Any

let private run (container : Container, stream : string) (exp, req: Tip)

: Async = async {

- let ep = match exp with Exp.Version ev -> Position.fromI ev | Exp.Etag et -> Position.fromEtag et | Exp.Any -> Position.fromAppendAtEnd

+ let ep =

+ match exp with

+ | SyncExp.Version ev -> Position.fromI ev

+ | SyncExp.Etag et -> Position.fromEtag et

+ | SyncExp.Any -> Position.fromAppendAtEnd

let! ct = Async.CancellationToken

let args = [| box req; box ep.index; box (Option.toObj ep.etag)|]

let! (res : Scripts.StoredProcedureExecuteResponse) =

- container.Scripts.ExecuteStoredProcedureAsync(sprocName, PartitionKey stream, args, cancellationToken = ct) |> Async.AwaitTaskCorrect

+ container.Scripts.ExecuteStoredProcedureAsync(SyncStoredProc.name, PartitionKey stream, args, cancellationToken = ct) |> Async.AwaitTaskCorrect

let newPos = { index = res.Resource.n; etag = Option.ofObj res.Resource.etag }

return res.RequestCharge, res.Resource.conflicts |> function

| null -> Result.Written newPos

| [||] when newPos.index = 0L -> Result.Conflict (newPos, Array.empty)

| [||] -> Result.ConflictUnknown newPos

- | xs -> Result.Conflict (newPos, Enum.Unfolds xs |> Array.ofSeq) }

+ | xs ->

+ Result.Conflict (newPos, Enum.Unfolds xs |> Array.ofSeq) }

- let private logged (container,stream) (exp : Exp, req: Tip) (log : ILogger)

+ let private logged (container,stream) (exp : SyncExp, req: Tip) (log : ILogger)

: Async = async {

let! t, (ru, result) = run (container,stream) (exp, req) |> Stopwatch.Time

let (Log.BatchLen bytes), count = Enum.Events req, req.e.Length

@@ -512,9 +523,9 @@ function sync(req, expIndex, expEtag) {

let verbose = log.IsEnabled Serilog.Events.LogEventLevel.Debug

(if verbose then log |> Log.propEvents (Enum.Events req) |> Log.propDataUnfolds req.u else log)

|> match exp with

- | Exp.Etag et -> Log.prop "expectedEtag" et

- | Exp.Version ev -> Log.prop "expectedVersion" ev

- | Exp.Any -> Log.prop "expectedVersion" -1

+ | SyncExp.Etag et -> Log.prop "expectedEtag" et

+ | SyncExp.Version ev -> Log.prop "expectedVersion" ev

+ | SyncExp.Any -> Log.prop "expectedVersion" -1

|> match result with

| Result.Written pos ->

Log.prop "nextExpectedVersion" pos >> Log.event (Log.SyncSuccess (mkMetric ru))

@@ -527,8 +538,8 @@ function sync(req, expIndex, expEtag) {

"Sync", stream, count, req.u.Length, (let e = t.Elapsed in e.TotalMilliseconds), ru, bytes, exp)

return result }

- let batch (log : ILogger) retryPolicy containerStream batch: Async =

- let call = logged containerStream batch

+ let batch (log : ILogger) retryPolicy containerStream expBatch: Async =

+ let call = logged containerStream expBatch

Log.withLoggedRetries retryPolicy "writeAttempt" call log

let mkBatch (stream: string) (events: IEventData<_>[]) unfolds: Tip =

{ p = stream; id = Tip.WellKnownDocumentId; n = -1L(*Server-managed*); i = -1L(*Server-managed*); _etag = null

@@ -537,76 +548,86 @@ function sync(req, expIndex, expEtag) {

let mkUnfold baseIndex (unfolds: IEventData<_> seq) : Unfold seq =

unfolds |> Seq.mapi (fun offset x -> { i = baseIndex + int64 offset; c = x.EventType; d = x.Data; m = x.Meta; t = DateTimeOffset.UtcNow } : Unfold)

- module Initialization =

- type [] Provisioning = Container of rus: int | Database of rus: int

- let adjustOfferC (c:Container) (rus : int) = async {

- let! ct = Async.CancellationToken

- let! _ = c.ReplaceThroughputAsync(rus, cancellationToken = ct) |> Async.AwaitTaskCorrect in () }

- let adjustOfferD (d:Database) (rus : int) = async {

- let! ct = Async.CancellationToken

- let! _ = d.ReplaceThroughputAsync(rus, cancellationToken = ct) |> Async.AwaitTaskCorrect in () }

- let private createDatabaseIfNotExists (client:CosmosClient) dName maybeRus = async {

- let! ct = Async.CancellationToken