diff --git a/docker/transformers-all-latest-gpu/Dockerfile b/docker/transformers-all-latest-gpu/Dockerfile

index 85a3e63680cb..c96b4cc79b3b 100644

--- a/docker/transformers-all-latest-gpu/Dockerfile

+++ b/docker/transformers-all-latest-gpu/Dockerfile

@@ -44,6 +44,8 @@ RUN python3 -m pip install -U "itsdangerous<2.1.0"

RUN python3 -m pip install --no-cache-dir git+https://github.com/huggingface/accelerate@main#egg=accelerate

+RUN python3 -m pip install --no-cache-dir git+https://github.com/huggingface/peft@main#egg=peft

+

# Add bitsandbytes for mixed int8 testing

RUN python3 -m pip install --no-cache-dir bitsandbytes

diff --git a/docs/source/en/_toctree.yml b/docs/source/en/_toctree.yml

index 9d1c33900c10..adb0f475ee3c 100644

--- a/docs/source/en/_toctree.yml

+++ b/docs/source/en/_toctree.yml

@@ -19,6 +19,8 @@

title: Train with a script

- local: accelerate

title: Set up distributed training with 🤗 Accelerate

+ - local: peft

+ title: Load and train adapters with 🤗 PEFT

- local: model_sharing

title: Share your model

- local: transformers_agents

diff --git a/docs/source/en/peft.md b/docs/source/en/peft.md

new file mode 100644

index 000000000000..302b614e5f7b

--- /dev/null

+++ b/docs/source/en/peft.md

@@ -0,0 +1,216 @@

+

+

+# Load adapters with 🤗 PEFT

+

+[[open-in-colab]]

+

+[Parameter-Efficient Fine Tuning (PEFT)](https://huggingface.co/blog/peft) methods freeze the pretrained model parameters during fine-tuning and add a small number of trainable parameters (the adapters) on top of it. The adapters are trained to learn task-specific information. This approach has been shown to be very memory-efficient with lower compute usage while producing results comparable to a fully fine-tuned model.

+

+Adapters trained with PEFT are also usually an order of magnitude smaller than the full model, making it convenient to share, store, and load them.

+

+

+

+

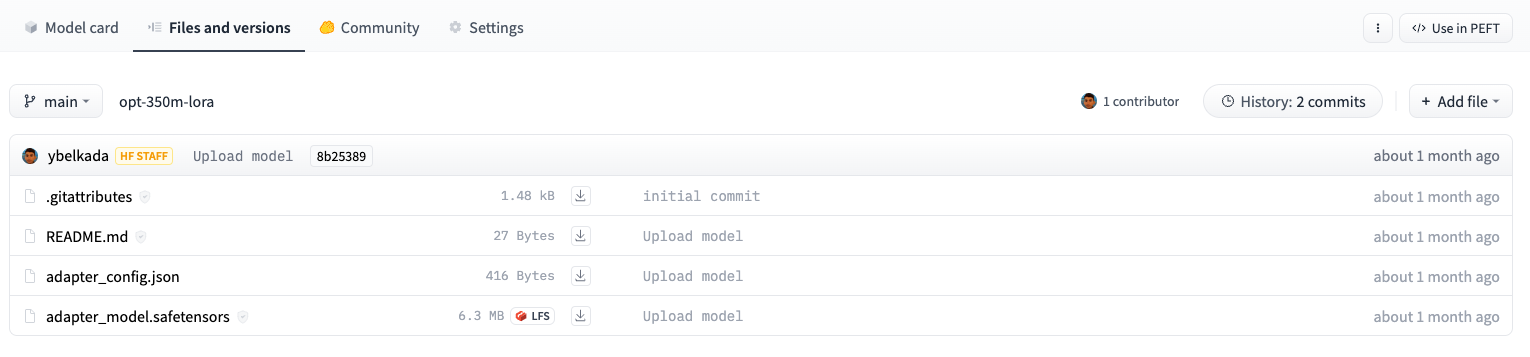

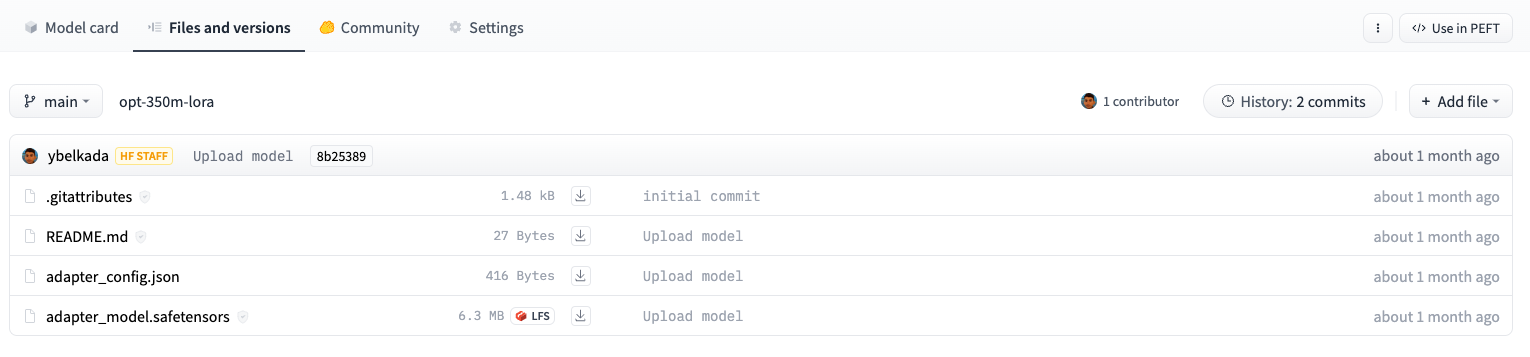

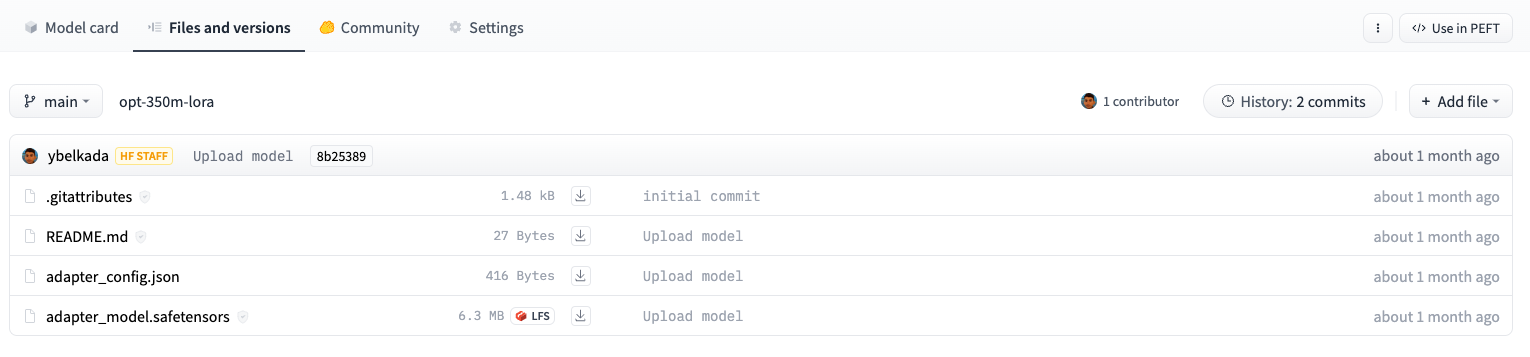

The adapter weights for a OPTForCausalLM model stored on the Hub are only ~6MB compared to the full size of the model weights, which can be ~700MB.

+

+

+  +

+