-

Notifications

You must be signed in to change notification settings - Fork 100

Removing requirements for compute_sub_gradient to use sensitivity image #893

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Removing requirements for compute_sub_gradient to use sensitivity image #893

Conversation

|

Sorry, I thought a Draft PR didnt run Travis. It appears that using |

|

ok. we'll fix all the |

that'll have to be |

|

Need to fix the documentation regarding sensitivities: STIR/src/include/stir/recon_buildblock/PoissonLogLikelihoodWithLinearModelForMean.h Lines 69 to 72 in 42240de

|

95e1fb2 to

a24b062

Compare

|

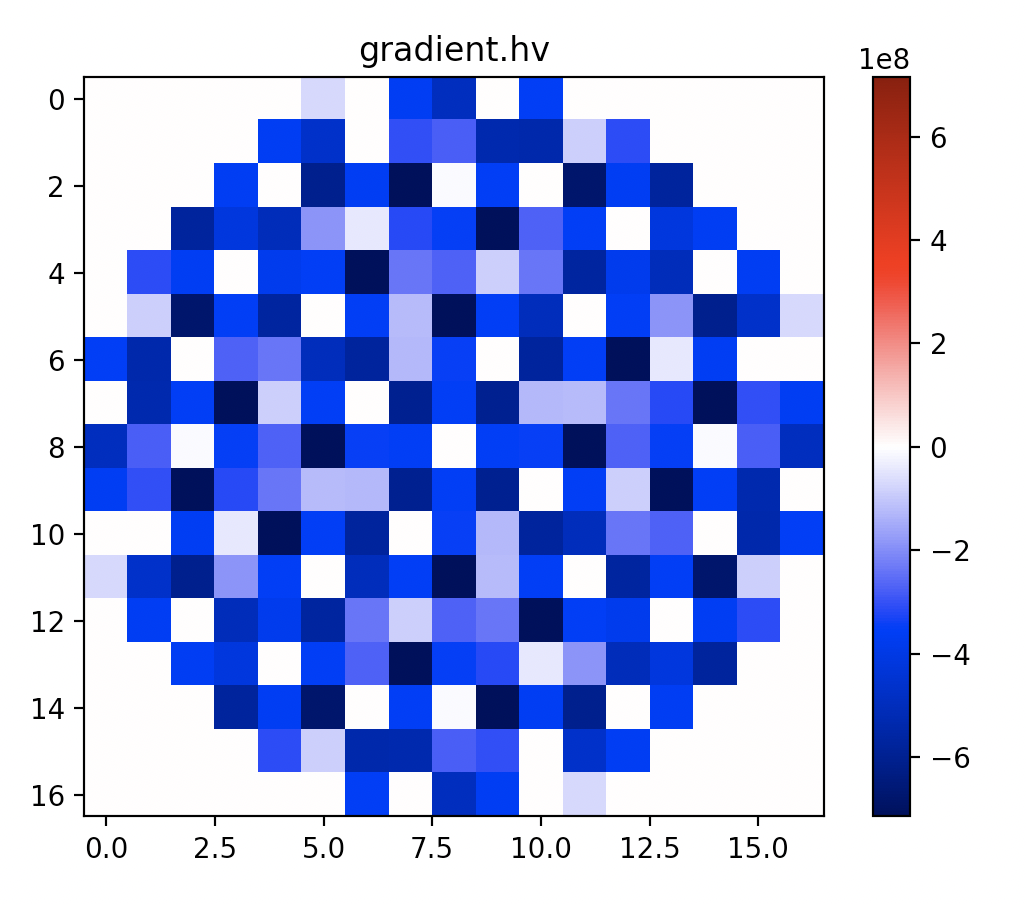

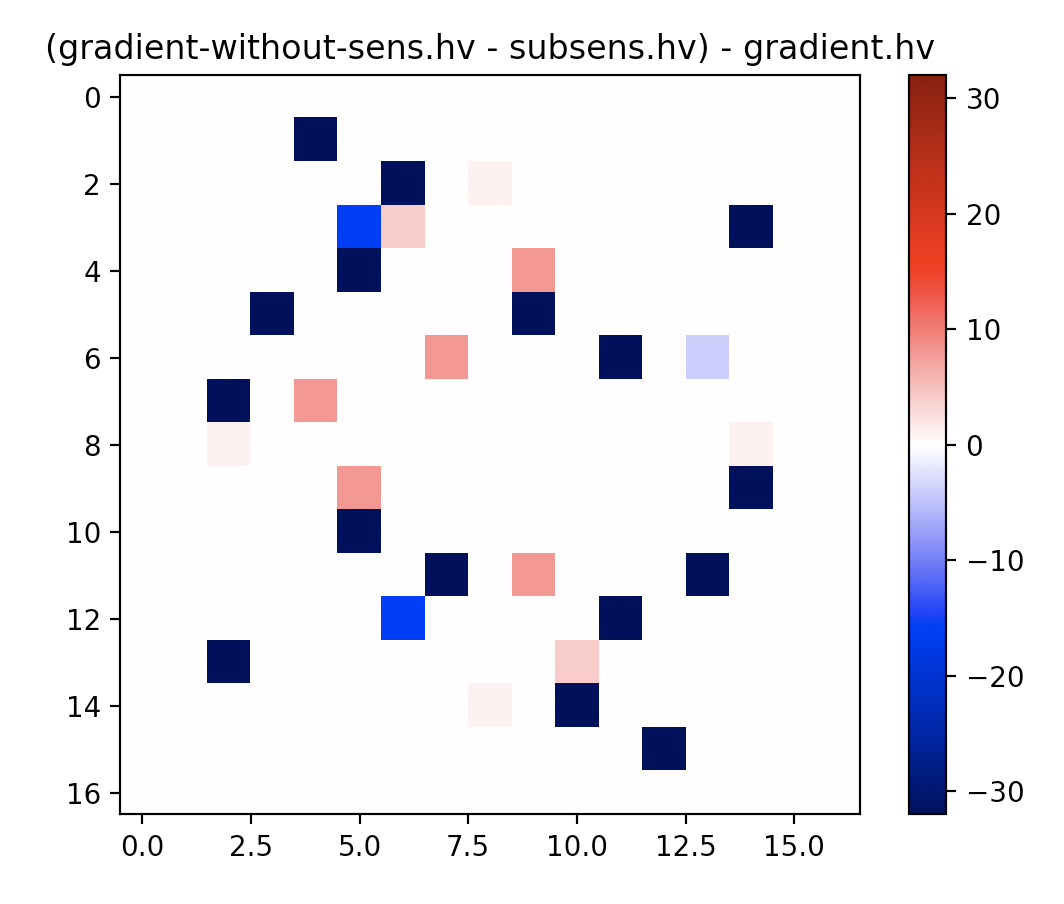

Interestingly the I think this is due to a normalisation issue with the new implementation that is caused by STIR's relucatnce to apply normalisation during the gradient computation and previous handling using the sensitivity subtraction. Let me try and explain. The gradient computation does not want/or need the normalisation ( STIR/src/recon_buildblock/PoissonLogLikelihoodWithLinearModelForMeanAndProjData.cxx Lines 1207 to 1217 in dc6f356

This is because it utilised the mathmatical shortcut:

If this is the case, this PR modification might need some tweaking. Rather than subtracting ones, it should subtract |

|

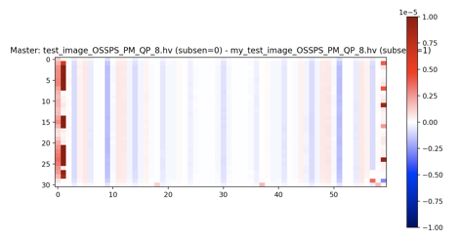

Test passes on my end. I get the same result (ish) difference between Edit: This was fixed by fa9e87e |

|

Test failing on OSSPS in recon_test_pack STIR/recon_test_pack/run_tests.sh Lines 231 to 246 in 42240de

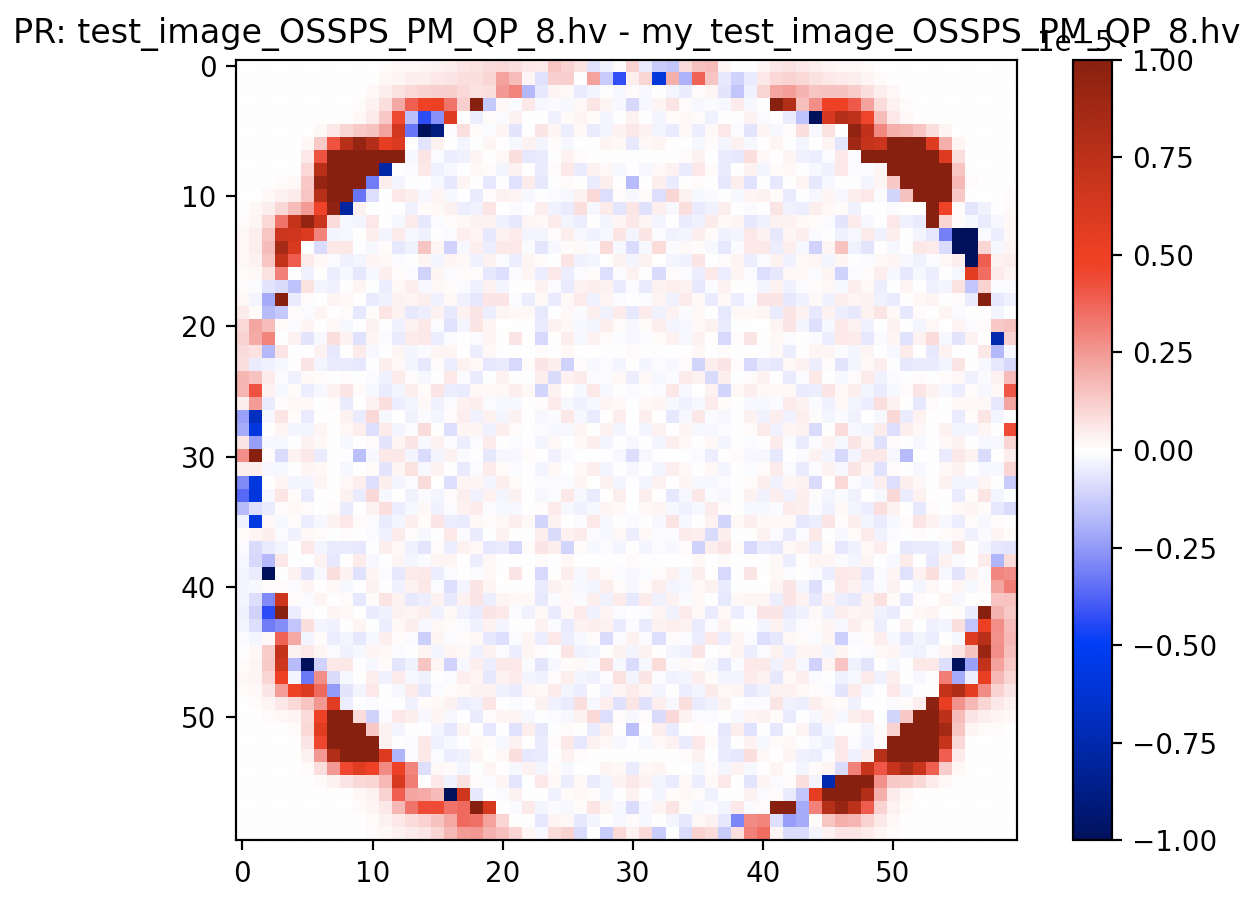

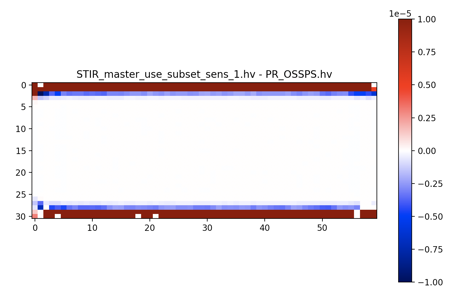

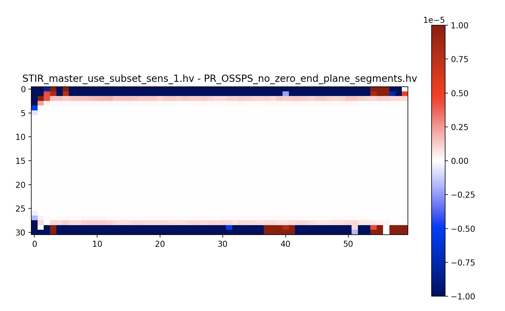

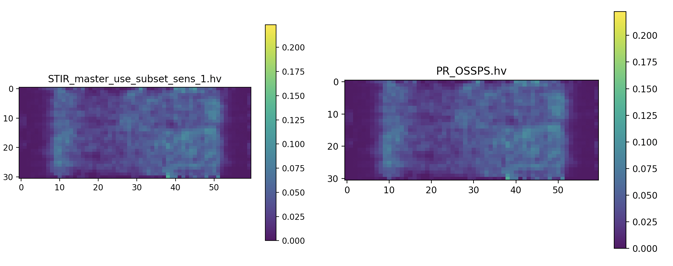

This PRThe error output The OSSPS reconstruction is fine, just the reconstructed image and reference are ~1% different. STIR MasterThe difference between the reference in STIR master are of magnetude e-04 % / e-05 %. DiscussionI think this is because the OSSPS reconstuction parameter file using Difference between master image and reference is virtually 0 Proposed SolutionReplace the OSSPS reference image. |

…ient_without_penalty

…o_subtraction is true

d43dc18 to

6b1af73

Compare

...stir/recon_buildblock/PoissonLogLikelihoodWithLinearKineticModelAndDynamicProjectionData.txx

Outdated

Show resolved

Hide resolved

src/include/stir/recon_buildblock/PoissonLogLikelihoodWithLinearModelForMean.h

Outdated

Show resolved

Hide resolved

src/include/stir/recon_buildblock/PoissonLogLikelihoodWithLinearModelForMean.h

Outdated

Show resolved

Hide resolved

src/include/stir/recon_buildblock/PoissonLogLikelihoodWithLinearModelForMean.h

Show resolved

Hide resolved

src/include/stir/recon_buildblock/PoissonLogLikelihoodWithLinearModelForMean.h

Outdated

Show resolved

Hide resolved

src/include/stir/recon_buildblock/PoissonLogLikelihoodWithLinearModelForMean.h

Outdated

Show resolved

Hide resolved

..._buildblock/PoissonLogLikelihoodWithLinearModelForMeanAndListModeDataWithProjMatrixByBin.cxx

Outdated

Show resolved

Hide resolved

src/recon_buildblock/PoissonLogLikelihoodWithLinearModelForMeanAndProjData.cxx

Outdated

Show resolved

Hide resolved

src/recon_buildblock/PoissonLogLikelihoodWithLinearModelForMeanAndProjData.cxx

Outdated

Show resolved

Hide resolved

…o the endplanes can be zeroed

…ld Poisson classes

..._buildblock/PoissonLogLikelihoodWithLinearModelForMeanAndListModeDataWithProjMatrixByBin.cxx

Outdated

Show resolved

Hide resolved

src/recon_buildblock/PoissonLogLikelihoodWithLinearModelForMeanAndProjData.cxx

Show resolved

Hide resolved

|

Major changes since last comments:

Marking as ready for review (pending travis). There are still a few issues:

Edit: Travis passed on previous major commit |

|

Ready for review |

rephrased release notes on the gradient computation changes [ci skip]

This is a fix for #873

Introduces the method

where the bool

do_subtraction(name needs to change) indicates to distributable and the gradient computation whether to do the gradient computation as:do_subtraction = true(e.g.compute_sub_gradient_without_penalty)or

do_subtraction = false(e.g.compute_sub_gradient_without_penalty_plus_sensitivity)